As artificial intelligence (AI) and machine learning (ML) technologies become more user-friendly, extremists and conspiracy theorists are using deepfakes, AI-generated audio and other forms of synthetic media to spread harmful, hateful and misleading content online.

Background & Definitions

Synthetic media is media content which has been fully or partially generated by technology, typically through artificial intelligence or machine learning processes. This includes any combination of video, photo or audio media formats, and may or may not be created with malicious intent. As an increasingly popular product often aided by Generative Artificial Intelligence (GAI) technology, which allows for more widespread access to the creation of synthetic media, such content has already been leveraged by bad actors to spread mis- and disinformation by way of deepfakes.

The definition of deepfakes has evolved over time to describe all forms of intentionally misleading synthetic media that are created using artificial intelligence or machine learning technology. Originally, deepfakes were defined as videos, sounds or images that replace the likeness of an existing individual with that of another. The term was coined in 2017 from the eponymous screenname of a Reddit user who shared pornographic content showing actress Gal Gadot’s face superimposed on the actual woman in the video – one of the first widespread deepfakes. “Cheap fakes” or “shallow fakes” are used to describe media that has been edited using far less sophisticated tools than those used for deepfakes, without the use of AI or ML technology. Tactics include reversing, deceptively editing or changing the speed of existing audio/visual media. Some synthetic media content may be created using both deepfake and “cheap fake” tactics.

In the context of synthetic media, lip syncing is a technique where voice recordings are mapped – or synced – to a video of someone, making it appear that the individual has said something which they did not. This is sometimes achieved with audio-based deepfake techniques like synthetic speech, which uses artificial intelligence to mimic real voices that have been “cloned” from audio samples of authentic speech from celebrities, politicians or others. Users can make these generated voices “say” whatever they wish, much to the chagrin of celebrities and politicians targeted. This step helps in the generation of more convincing deepfakes.

Generative Adversarial Network images, commonly known as GANs, are computer-generated images created from interactions between two machine learning networks, resulting in synthetic media content. This technology lies behind many popular AI-image generation tools and is often used to create images of realistic-looking humans who don’t exist in the offline world – allowing users to generate eerily-convincing profile pictures on social media, misleading “news” sites and more. Though GANs today can often be detected by spotting inconsistencies in fingers, teeth, background details and more, the technology is rapidly improving.

Synthetic Media for Hate Speech

In January 2023, Vice reported that an AI startup specializing in the creation of synthetic speech saw a worrying uptick in the use of their tool by bad-faith individuals, who were abusing it to create audio of celebrities and other public figures saying hateful or violent rhetoric. Hyperlinks to those synthetic speech audio files are still accessible on message board archives and, therefore, can still be downloaded and weaponized. Many of these are highly disturbing.

Above: 4chan archive with synthetic speech audio files

In one example, a voice says, “Trans rights are human rights,” followed by choking or strangling noises. In another, the character Master Chief from the video game Halo shares graphic instructions for murdering Jewish people and Black people, such as “toss kikes into active volcanoes” and “grind n***** fetuses in the garbage disposal.”

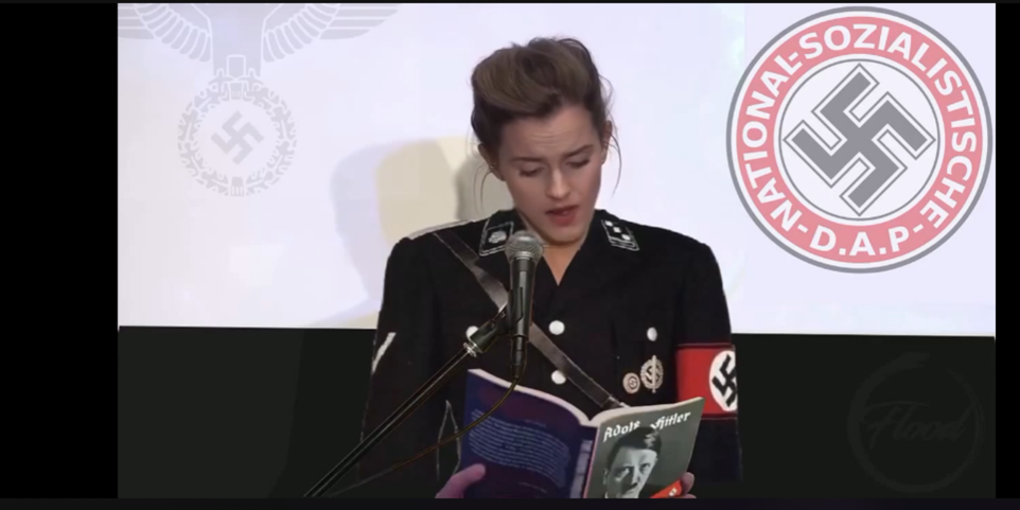

Synthetic speech files like these can be mapped onto videos of the speaker whose voice is being cloned. For example, extremists created a synthetic speech file of actress Emma Watson reciting Adolf Hitler’s manifesto, "Mein Kampf.” This audio was used to generate a deepfake video of Watson reading the book aloud at a podium while dressed in a Nazi uniform.

Above: Still from a deepfake video of Emma Watson “reading” Mein Kampf. Source: Odysee

The final product is not convincing; Watson moves in a non-human, robotic way, and the words don’t quite line up with the movement of her mouth. Still, as this technology evolves, the end results will likely become more realistic.

Deepfakes containing hateful or radicalizing rhetoric have been shared on extremist Telegram channels, including a chat for the white supremacist and antisemitic hate network, Goyim Defense League (GDL). Several of these videos, including the aforementioned Emma Watson deepfake, seem to have been created by the same user and were shared across a number of alternative platforms used by GDL including Odysee, Telegram and GDL’s own streaming network.

Another video which appears in the GDL chat, prefaced with the message, “Disinformation Czar spreads disinformation,” shows a deepfake of Nina Jankowicz, researcher and former director of the now-defunct Disinformation Governance Board. Using synthetic speech, the video falsely depicts Jankowicz making a series of disturbing claims, including that “the word disinformation was made up by Jews to define any information that Jews don’t like” and describing Jews as a “scourge of evil.”

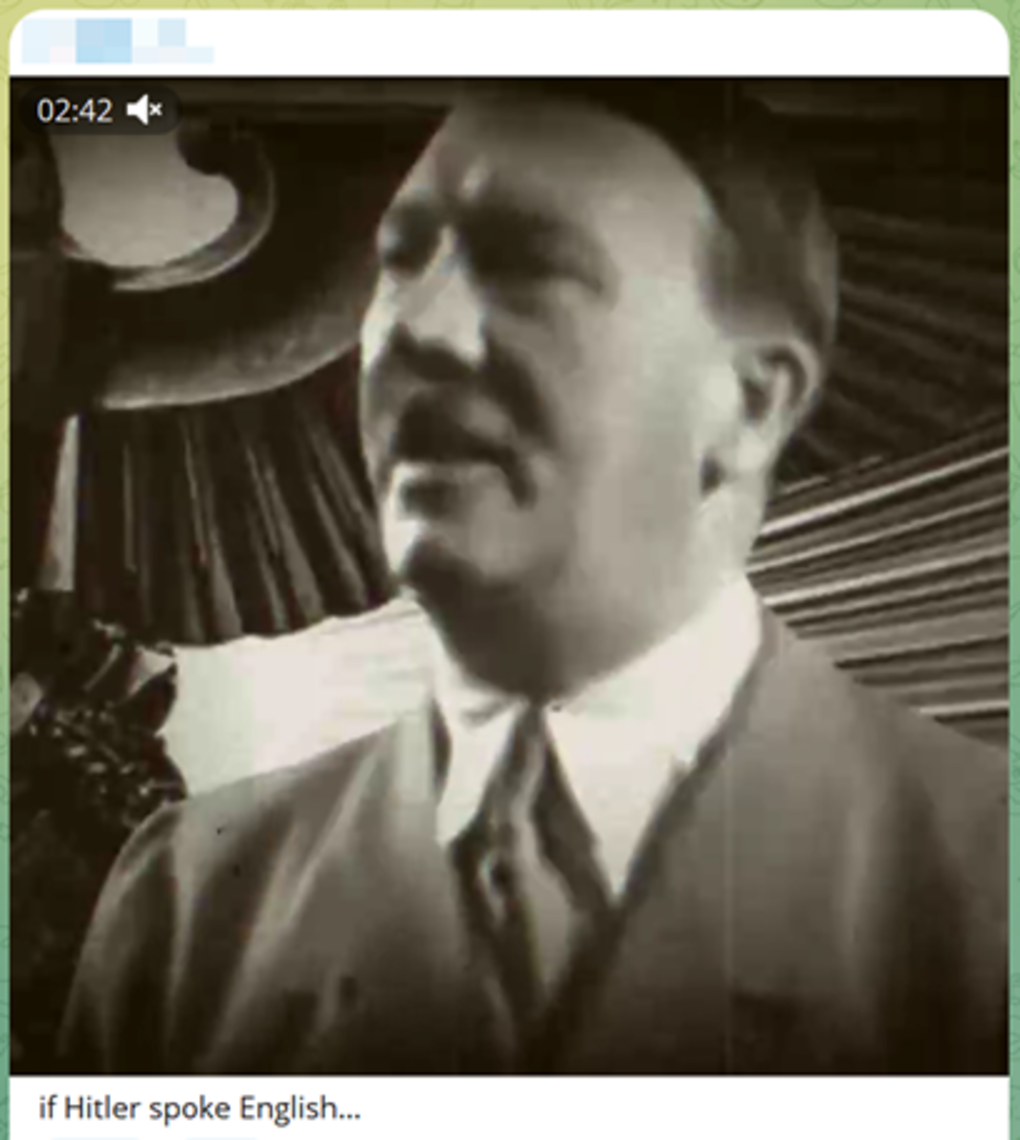

Deepfakes of Adolf Hitler, which use AI to depict him delivering old speeches that have been translated into English, was shared in both a GDL chat and another white supremacist channel on Telegram in April of 2023. One user requests feedback from the group, asking: “What do you guys think? Is it good enough yet to release to the masses?”

Above: Still from an AI-generated video of Adolf Hitler speaking English. Source: Telegram

Another racist and antisemitic content creator who focuses on so-called “Black crimes” also regularly creates and disseminates synthetic media content on their Telegram channel, Gab account and more. One of their videos from early April 2023 shows a deepfake of a BBC newscaster, who appears to be covering a recent story about racist dolls which were confiscated by law enforcement in England. Synthetic speech is mapped onto the reporter, making her “say” antisemitic statements about “Jewish tyranny” in the media, as well as how the police officers in question were only appeasing “their Jewish masters.”

Using a combination of synthetic speech and non-AI/ML editing techniques, this user also created a fake Planned Parenthood advertisement which demonizes what they refer to as “race mixing” and urges white women to abort biracial fetuses.

Above: Still from a fake Planned Parenthood advertisement showing offensive depictions of biracial couples. Source: Gab

The voiceover claims that white women have been “fooled by Jewish race-mixing propaganda” into ruining “ethnic continuity.” The end of the video includes apparently distorted images of biracial couples and their children, all of which were likely synthesized or manipulated using editing software or AI tools.

Synthetic Media for Misinformation and Disinformation

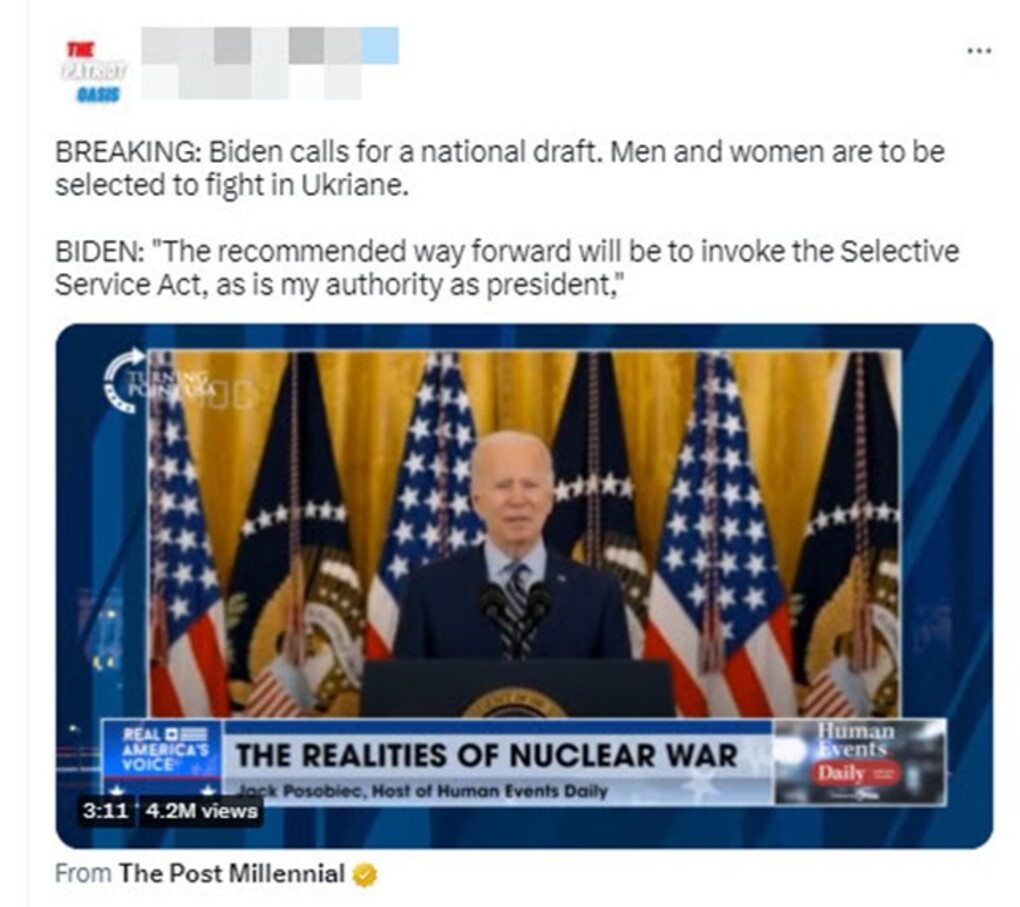

On February 27, 2023, a video surfaced on social media that appeared to show President Biden publicly invoking the Selective Service Act, announcing a draft of U.S. citizens for the war in Ukraine. A viral tweet that included the video read, “BREAKING: Biden calls for national draft. Men and women are to be selected to fight in Ukraine.”

Above: Deepfake of President Biden “invoking“ Selective Service Act. Source: Twitter

It’s not until about 45 seconds into the video that far-right activist and conspiracy theorist Jack Posobiec appears, telling viewers that the video was a deepfake created by his producers to show his predicted future for America and “nuclear war.” While the video was ostensibly created to prove a point, it was misleading enough to warrant a debunk from Snopes.

In early March 2023, a video with the caption “Bill Gates caught in corner” began circulating on TikTok. The modified clip appears to show Gates getting angry and defensive during an Australian news show interview. The interviewer seems to ask leading questions about the safety of the COVID vaccine, after which Gates becomes flustered and abruptly ends the interview.

Above: Still from misleading Bill Gates interview, edited using synthetic media technology. Source: TikTok

The actual footage shows no such thing, and fact checking organizations were quick to debunk the video as a deepfake combining real and synthetic audio. The clip, cleverly edited to make it look like Gates was confronted by evidence of a nefarious conspiracy, adds to the ongoing demonization of Gates and COVID-related mandates and vaccines.

Synthetic Media for Misogynistic Harassment

Much of the current legal and ethical concerns regarding synthetic media are centered around the issue of “deepfake porn,” or synthetically-created nonconsensual distribution of intimate imagery (NDII), which typically involves an individual’s face being mapped onto pornographic content without that individual’s knowledge or consent.

On January 30, 2023, Twitch streamer Atrioc issued a filmed apology after he was caught on stream with an open tab on his browser, which showed a website that hosts non-consensual pornographic content of female streamers generated by deepfake technology.

Above: Moment where Atrioc’s tabs were displayed on stream; highlighted/censored section shows female streamers in deepfake porn. Source: Youtube

QTCinderella, one of the streamers targeted as a deepfake on the website, responded to the incident on Twitter, writing: “Being seen ‘naked’ against your will should NOT BE A PART OF THIS JOB.”

Legal consequences of synthetic media

There are no federal statutes currently in the United States that specifically address deepfakes and synthetic media; in 2021, Rep. Yvette Clarke (D-NY) introduced the “DEEP FAKES Accountability Act” that was designed to “combat the spread of disinformation through restrictions on deep-fake video alteration technology.” However, the bill never advanced to a vote. As of May 2023, only four states – California, Georgia, New York and Virginia – have deepfake laws that go beyond election-related issues.

In the absence of such laws, the responsibility for mitigating synthetic media’s potential harms falls to technology companies, like social media platforms. For example:Twitter’s synthetic and manipulated media policy prohibits the sharing of “synthetic, manipulated, or out-of-context media that may deceive or confuse people and lead to harm.” Content that meets these criteria may be labeled or removed, and Twitter may lock repeat offenders’ accounts.Meta’s manipulated media policy falls under its broader community standards. While the policy applies to audio, images and video, Meta emphasizes the potentially harmful effects of “videos that have been edited or synthesized…in ways that are not apparent to an average person and would likely mislead an average person to believe” they are authentic. These types of videos are subject to removal.YouTube’s misinformation policies include a prohibition on manipulated media, specifically “content that has been technically manipulated or doctored in a way that misleads users (beyond clips taken out of context) and may pose a serious risk of egregious harm.” YouTube says that it will remove this content, and that it will issue a strike against the channel that uploaded it if it is their second time violating community guidelines.Snap’s community guidelines prohibit “manipulating content for false or misleading purposes,” but it is unclear how synthetic media specifically are evaluated in this context.TikTok requires users to disclose “synthetic or manipulated media that shows realistic scenes” through captions or stickers. Additionally, synthetic media of young people under the age of 18, private adults or adult public figures when used for commercial or political endorsement are prohibited.Reddit does not have any policies directly targeting synthetic media, though its communities, i.e., individual sub-Reddits, may have their own standards. Many of synthetic media’s harmful use cases, such as harassment, impersonation and content manipulation could fall under Reddit’s site wide content policy.

Looking Ahead

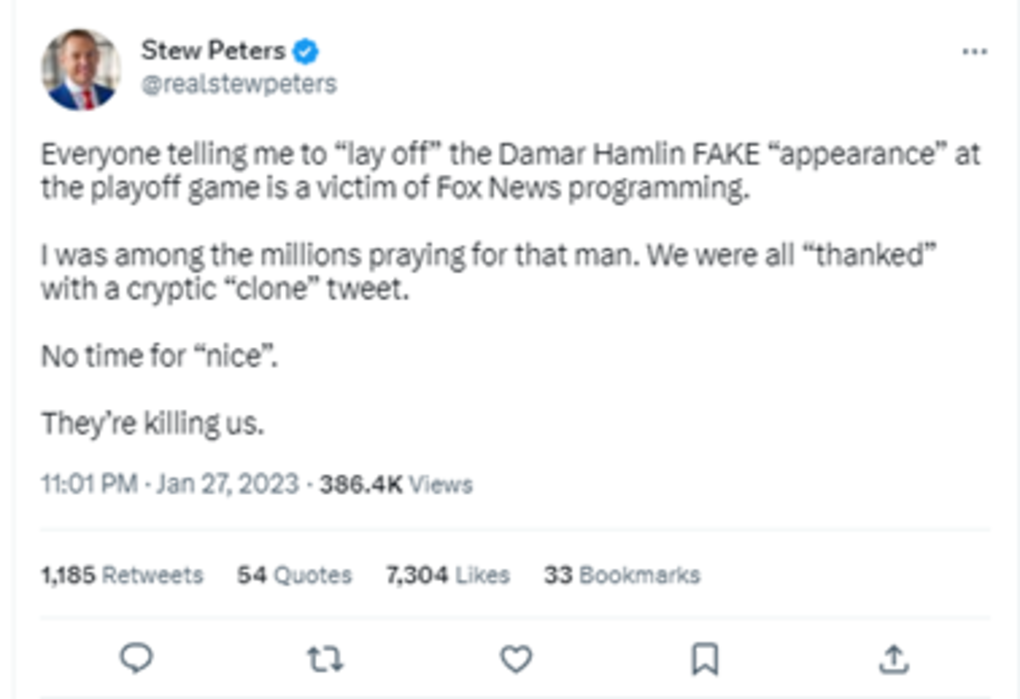

Beyond the concern that synthetic media will be used nefariously for disinformation campaigns, deepfakes have also made it easier for extremists and conspiracy theorists to publicly dismiss legitimate media content. For example, football player Damar Hamlin shared videos of himself following a televised on-field medical emergency to thank his fans and to assure them that he was improving. Anti-vaccine conspiracy theorists like Stew Peters, however, claimed the videos were fake.

Above: Anti-vaccine conspiracy theorist Stew Peters tweeting about allegedly ”fake” Damar Hamlin appearance.

In March of 2023, an unmanned American surveillance drone was reportedly struck down over the Black Sea by a Russian fighter jet. Two days later, the Pentagon released footage of the encounter taken from the drone. Yet many claimed without evidence that the video was digitally generated, alleging that the Biden administration fabricated the footage to encourage anti-Russia sentiments.

Above: Twitter user claiming that U.S. drone attack video was generated via CGI. Source: Twitter

The ability for the masses to create convincing synthetic media provides fertile ground for a large-scale dismissal of facts and reality, which only benefits conspiratorial thinkers and disinformation agents. Worryingly, the use of synthetic media also helps bring violent extremist fantasies to life, raising the chilling possibility that deepfakes will only stimulate extremists’ appetite for the “real thing.”

With this, technology companies and legislators may implement proactive mitigation strategies to help curb the growing threats posed by synthetic media. ADL’s Center for Technology and Society has written about pressing questions that must be considered with the recent prevalence of Generative AI tools. Below are some specific recommendations for government and industry:

For Industry:Prohibit harmful manipulated content: In addition to their regular content policies – which may already ban some forms of synthetic media that are hateful, harassing or offensive – platforms should have explicit policies banning nonconsensual deepfake pornography and synthetic media that is deceptive, misleading and likely to cause harm. Platforms should also ensure that users are able to easily report violations of this policy.

Prioritize transparency: Platforms should maintain records/audit trails of both the instances that they detect synthetic media and the subsequent steps they take upon discovery that a piece of media is synthetic. They should also be transparent with users and the public about their synthetic media policy and enforcement mechanisms.

Consider disclosure requirements for users: Because platforms can play a proactive role in mitigating the often-irreparable harms of deepfakes and other harmful synthetic media, they should consider whether to implement requirements for users to disclose the use of such content. Disclosure may include a combination of labeling requirements, provenance information or watermarks to visibly demonstrate that a post involved the creation or use of synthetic media.

Utilize synthetic media detection tools: Platforms should implement automated mechanisms to detect indicators of synthetic media at scale.

For Government:Outlaw nonconsensual distribution of deepfake intimate images: As noted above, four states have passed legislation outlawing deepfake nonconsensual distribution of intimate imagery (NDII). These laws increase protections for victims and targets of NDII, while upholding free speech protections and principles. Lawmakers in other states and at the federal level should work to pass similar legislation. This would ensure that perpetrators of deepfake NDII are held responsible across U.S. jurisdictions and can serve as an important deterrent for those considering using deepfakes to target individuals.

Include synthetic media in platform transparency legislative proposals: Through ADL’s Stop Hiding Hate campaign, we have worked with state lawmakers to pass legislation focused on getting insight into how platforms manage their own content policies and enforcement mechanisms. Ultimately, federal legislation is needed, as it would be the most effective means to ensure transparency from platforms. Importantly, reporting should include how platforms moderate violative forms of synthetic media.

Promote media literacy: By encouraging users to be vigilant when consuming online information and media, and by creating or sharing educational media resources with users, the public may be better inoculated against the effects of false or misleading content.

No comments:

Post a Comment