Tamir Hayman, David Siman-Tov, Amos Hervitz

Social media, which enhance interpersonal connections and champion freedom of speech, also embody negative elements that can heighten alienation and polarization in society, and even threaten national security and national resilience. Professional bots and trolls deepen existing social rifts and weaken the resilience of Israeli society. A timely example is the online cognitive campaign waged regarding the war in Ukraine. In Israel, however, there is insufficient attention to this phenomenon. The question arises, therefore, why have previous attempts not translated into effective action to tackle the problem? This paper analyzes a recent effort by an advisory committee to the previous Minister of Communications to formulate a plan for the restriction and regulation of social media. Building on the committee’s recommendations, the incoming government should take requisite actions and regulate activity in this realm.

In recent years, a number of committees have been involved in the efforts to limit the unchecked discourse on social media in Israel: the Beinisch Committee (2017), which examined the issue of contemporary election propaganda; the Nahon Committee (2019), which examined the issue of ethics and regulation in the context of artificial intelligence; and the Arbel Committee (2021), which looked at means of protection against harmful publications on the internet. Some of the recommendations were implemented, such as the establishment of the Child Online Protection Bureau – 105 national call center, but most were never implemented due to the global difficulty of imposing regulation on social media companies, and due to constraints originating in the political and government system in Israel.

Recently, the Advisory Committee to the Ministry of Communications, led by the director general of the ministry and with the participation of public figures and academicians, issued a report on the regulation of digital content platforms. The Committee was established based on an understanding that social media are often misused and solutions must be devised at the state level, similar to what has occurred in other countries. In tandem, over the past year an inter-ministerial committee led by the DG of the Ministry of Justice (the Davidi Committee) has been working to adapt Israeli law to the challenges of accelerating innovation and technology, as reflected on social media.

Recommendations of the Committee on the Regulation of Digital Content Platforms

The most important principle is to make the digital platforms responsible for a variety of content – from illegal content, such as incitement to violence, to harmful content, which to date has not received attention, such as the dissemination of fake news.

Legislative Recommendations

Impose legal liability on social media, obliging platforms to set up representative offices in Israel to serve as the address for court orders in Israel, and requiring them to be proactive in the prevention of harmful content that encourages violence and violates the law against incitement. This is a critical amendment, and it is surprising that it has not yet been introduced. How is it possible for an organization providing a service to Israeli citizens to have no local address and therefore be free of responsibility?

Increase the transparency of social platforms, in view of the existing gap between information held by the state and the public, and the information in the possession of the platforms. Social media companies hold all the information as well as the technological ability to control it, monitor inauthentic information, and allow the rapid removal of harmful and false content. However, the platforms have no interest – even if they claim otherwise – in dealing with this problem. As the traffic on the internet grows, the platforms acquire greater ability to sell the content users to advertisers. Harnessing the platforms to action by making the information they hold transparent should be very effective, since it would restrain their ability to spread inappropriate content.

Classify content that must be removed because of negative effects. This recommendation expands the range of content that the platforms are required to deal with (apart from illegal content), to include harmful content that has a negative impact on the public discourse. This recommendation is the most difficult to implement, since it imposes responsibility for content on the platforms. Technologically, implementation is possible and could even become simpler with the introduction of the widespread use of advanced AI tools. However, more work is needed on defining the rules of classification. This involves a complex array of considerations designed to limit freedom of speech in order to protect individual and national security. There should be open discussion of this issue, with the participation of experts in law, ethics, and communications.

Organizational Recommendations

The Committee recommends setting up a regulatory system to establish a formal relationship between media companies and the state. The roles of the proposed system would include oversight and enforcement, mapping the risks emanating from the platforms’ activities in Israel, and acting as a research and knowledge body in the field of regulating online space. The system would formulate recommendations for legislation, serve as an address for public inquiries, and establish a mechanism for appeals. The Committee also suggested that the regulation include a public council to formulate policy and have the powers of decision in areas of supervision and enforcement.

The Committee recommends direct communication between the social platforms and specifically authorized bodies, called “credible reporters,” for effective and credible removal of harmful content and incitement. The “credible reporters” would be NGOs, research bodies, and public authorities engaged in monitoring content in the digital space and would also be obliged to meet basic requirements for the role. This recommendation calls on the public to protect themselves and display reciprocal responsibility.

The State-Society-Digital Platforms Triangle

Limiting and monitoring social media requires strengthening the relationship between the state, the digital content companies, and the public. The entry of global companies that are not subject to local laws, and the development of innovative technologies for which local legislation is not prepared, have naturally created a deep divide that can be addressed at the legislative and regulatory levels, as well as by an initiative on the part of NGOs to impose restraints and promote a digital culture aware of the threats and equipped to handle them.

The current situation of insufficient regulation of social media has implications for national security. Inter alia, this situation allows hostile foreign elements to interfere in democratic processes (the West knows of Russian attempts to interfere in democratic elections; Israel knows of Iranian attempts). These elements exploit the absence of restrictive legislation, freedom of speech, and the ability to penetrate the internal political discourse for subversive objectives. This risk was shown in a simulation conducted by the Institute for National Security Studies (INSS) just before the recent Knesset elections.

However, foreign interference is only part of the problem of contaminated public discourse. No less problematic are domestic elements, whose activities on the internet, using bots and artificial intelligence, contribute to polarization. The first victims are the younger generation, growing up in a toxic climate of disrespect for others. A divided and argumentative society, allowing breaches of the right to privacy and damage to personal reputations, is a society whose national resilience is undermined and weakened.

Some of the media companies claim to be cooperating with the government initiative to increase regulation and have even announced that they take responsibility for their online content. They talk of their initiatives to invest in maintaining privacy, find a response to the appeals of their users, and remove inauthentic or harmful content, as well as their preparations for election campaigns. However, a discussion between company representatives and government representatives – in the framework of the Davidi Committee – showed that such activity is conditional on the willingness and interests of the companies, based on “rules of the community” (which are not consistent) and constitute a black box of sorts for the public and the government.

Conclusion

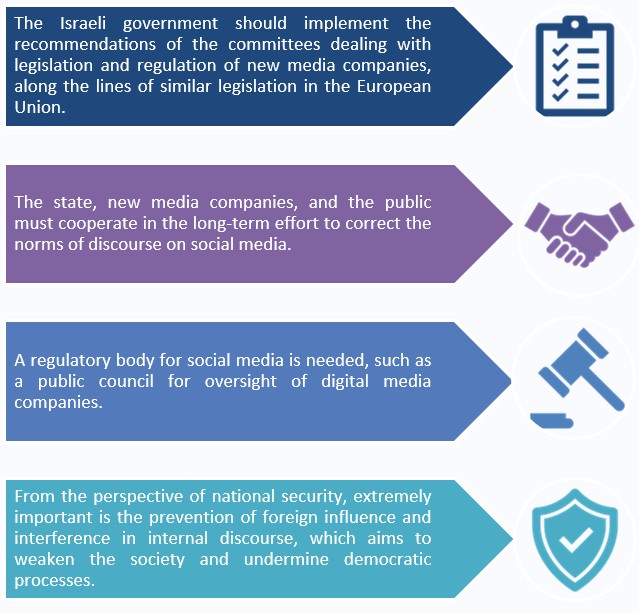

The new Israeli government should work to implement the recommendations of current and former committees dealing with legislation and regulation of new media companies, while aligning with similar legislation in the European Union (such as the GDPR regulation on the protection of privacy).

Many of the recommendations are not the sole responsibility of the Ministry of Communications, and a regulatory body for social media is needed. A public council for oversight of digital media companies could be the solution, facilitating the involvement of NGOs in the work of removing harmful content and increasing awareness of the risks inherent in the digital world.

Amending the norms of conversation on social media is a long-term process, requiring combined action from the state, new media companies, and the public. First, there is a need to increase awareness of the risks posed by wild and uninhibited online discourse. Second, legal and public steps must be defined with the purpose of limiting the damage to society from unrestrained discourse.

From the perspective of national security, the prevention of foreign influence and interference in internal discourse is extremely important, particularly since the absence of legislation and oversight allows hostile foreign elements to penetrate the internal debate and thus try to influence society to the detriment of democratic processes.

No comments:

Post a Comment