Megan McBride

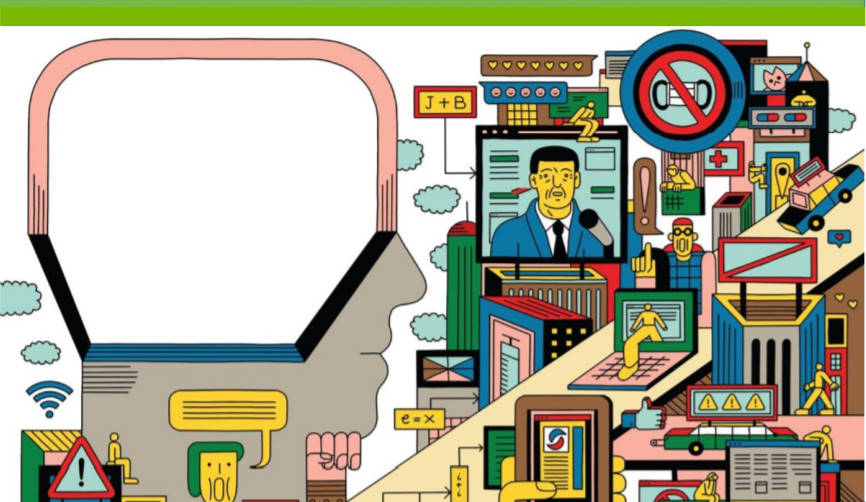

As humans evolved, we developed certain psychological mechanisms to deal with the information surrounding us. But in the 21st century media environment, where we are exposed to an exponentially growing quantity of messages and information, some of these time-tested tools make us dangerously vulnerable to disinformation.

Today, messages of persuasion are not just on billboards and commercials, but in a host of non-traditional places like in the memes, images and content shared online by friends and family. When viewing an Oreo commercial, we can feel relatively confident that it wants to persuade us of the cookie’s excellence and that the creator is likely Nabisco. The goals of today’s disinformation campaigns are more difficult to discern, and the content creators harder to identify. Few viewers will have any idea of the goal or identify of the creator of a shared meme about COVID-19 vaccines. And since this content appears in less traditional locations, we are less alert to its persuasive elements.

In a recent CNA study, we examined how, in this disorienting information environment, normal information-processing and social psychological mechanisms can be exploited by disinformation campaigns. Our report, The Psychology of (Dis)Information: A Primer on Key Psychological Mechanisms , identifies four key psychological mechanisms that make people vulnerable to persuasion.

Initial information processing: Our mental processing capacity is limited; we simply cannot deeply attend to all new information we encounter. To manage this problem, our brains take mental shortcuts to incorporate new information. For example, an Iranian-orchestrated disinformation campaign known as Endless Mayfly took advantage of this mental shortcut by creating a series of websites designed to impersonate legitimate and familiar news organizations like The Guardian and Bloomberg News. These look-alike sites were subject to less scrutiny by individual users who saw the familiar logo and assumed that the content was reliable and accurate.

Cognitive dissonance: We feel uncomfortable when confronted with two competing ideas, experiencing what psychologists call cognitive dissonance. We are motivated to reduce the dissonance by changing our attitude, ignoring or discounting the contradictory information, or increasing the importance of compatible information. Disinformation spread by the Chinese government following the 2019 protests in Hong Kong took advantage of the human desire to avoid cognitive dissonance by offering citizens a clear and consistent narrative casting the Chinese government in a positive light and depicting Hong Kong’s protestors as terrorists. This narrative, shared via official and unofficial media, protected viewers from feeling the dissonance that might result from trying to reconcile the tensions between the Chinese government’s position and that of the Hong Kong protestors.

Influence of group membership, beliefs, and novelty (the GBN model): Not all information is equally valuable to individuals. We are more likely to share information from and with people we consider members of our group, when we believe that it is true, and when the information is novel or urgent. For example, the #CoronaJihad hashtag campaign leveraged the emergence of a brand new disease — one that resulted in global fear and apprehension — to circulate disinformation blaming Indian Muslims for the its origins and spread.

Emotion and arousal : Not all information affects us the same way. Research demonstrates that we pay more attention to information that creates intense emotions or arouses us to act. That means we are more likely to share information if we feel awe, amusement or anxiety than if we feel less-arousing emotions like sadness or contentment. Operation Secondary Infektion, coordinated by the Russians, tried to create discord in Russian adversaries like the U.K. by planting fake news, forged documents and divisive content on topics likely to create intense emotional responses, such as terrorist threats and inflammatory political issues.

Despite their impact on the spread of disinformation, these mechanisms can be generally healthy and useful to us in our daily lives. They allow us to filter through the onslaught of information and images we encounter on a regular basis. They’re also the same mechanisms that advertisers have been using for years to get us to buy their cookie, cereal or newspaper. The current information environment, however, is far more complex than it was even 10 years ago, and the number of malicious actors who seek to exploit it has grown. These normal thought patterns now represent a vulnerability we must address to protect our communities and our nation.

The U.S. government is already working on technological means of thwarting state and nonstate actors spreading disinformation in and about the United States. And an increasingly robust conversation about legislative action might force more aggressive removal of disinformation from social media platforms. Our analysis, suggests another path that merits additional attention: empowering individual citizens to reject the disinformation that they will inevitably encounter. Our work outlines two promising categories of techniques in this vein. One is to provide preventive inoculation, such as warning people about the effects of disinformation and how to spot it. The other is to encourage deeper, analytical thinking. These two techniques can be woven into training and awareness campaigns that would not necessarily require the cooperation of social media platforms. They could be simple, low-cost and scalable. A comprehensive approach to breaking the cycle of disinformation will address not only where disinformation messages are sent, but also where and how they are received.

No comments:

Post a Comment