Katerina Sedova, Christine McNeill, Aurora Johnson and Aditi Joshi

The age of information has brought with it the age of disinformation. Powered by the speed and data volume of the internet, disinformation has emerged as an insidious instrument of geopolitical power competition and domestic political warfare. It is used by both state and non-state actors to shape global public opinion, sow chaos, and chip away at trust. Artificial intelligence (AI), specifically machine learning (ML), is poised to amplify disinformation campaigns—influence operations that involve covert efforts to intentionally spread false or misleading information.

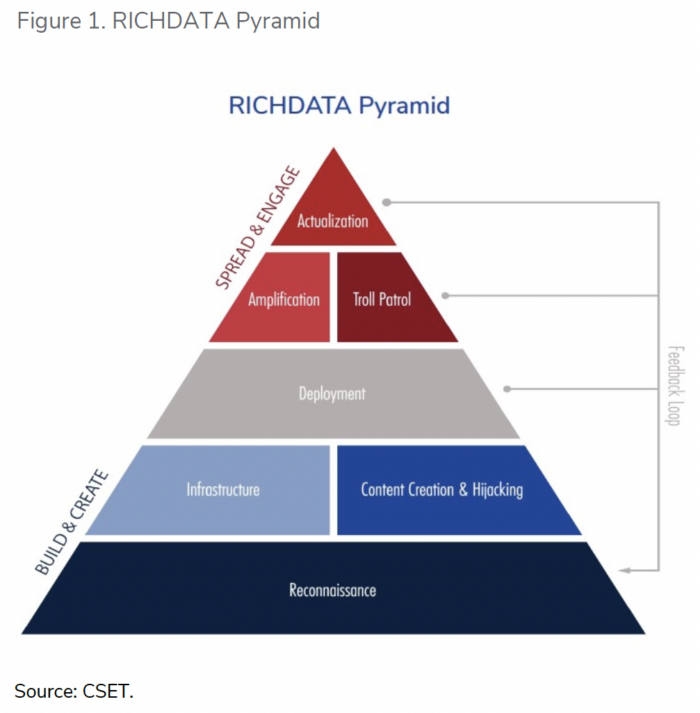

In this series, we examine how these technologies could be used to spread disinformation. Part 1 considers disinformation campaigns and the set of stages or building blocks used by human operators. In many ways they resemble a digital marketing campaign, one with malicious intent to disrupt and deceive. We offer a framework, RICHDATA, to describe the stages of disinformation campaigns and commonly used techniques. Part 2 of the series examines how AI/ML technologies may shape future disinformation campaigns.

We break disinformation campaigns into multiple stages. Through reconnaissance, operators surveil the environment and understand the audience that they are trying to manipulate. They require infrastructure—messengers, believable personas, social media accounts, and groups—to carry their narratives. A ceaseless flow of content, from posts and long-reads to photos, memes, and videos, is a must to ensure their messages seed, root, and grow. Once deployed into the stream of the internet, these units of disinformation are amplified by bots, platform algorithms, and social-engineering techniques to spread the campaign’s narratives. But blasting disinformation is not always enough: broad impact comes from sustained engagement with unwitting users through trolling—the disinformation equivalent of hand-to-hand combat. In its final stage, a disinformation operation is actualized by changing the minds of unwitting targets or even mobilizing them to action to sow chaos. Regardless of origin, disinformation campaigns that grow an organic following can become endemic to a society and indistinguishable from its authentic discourse. They can undermine a society’s ability to discern fact from fiction creating a lasting trust deficit.

This report provides case studies that illustrate these techniques and touches upon the systemic challenges that exacerbate several trends: the blurring lines between foreign and domestic disinformation operations; the outsourcing of these operations to private companies that provide influence as a service; the dual-use nature of platform features and applications built on them; and conflict over where to draw the line between harmful disinformation and protected speech. In our second report in the series, we address these trends, discuss how AI/ML technologies may exacerbate them, and offer recommendations for how to mitigate them.

No comments:

Post a Comment