Julian Droogan and Catharine Lumby

Summary

This Element presents original research into how young people interact with violent extremist material, including terrorist propaganda, when online. It explores a series of emotional and behavioural responses that challenge assumptions that terror or trauma are the primary emotional responses to these online environments. It situates young people's emotional responses within a social framework, revealing them to have a relatively sophisticated relationship with violent extremism on social media that challenges simplistic concerns about processes of radicalisation. The Element draws on four years of research, including quantitative surveys and qualitative focus groups with young people, and presents a unique perspective drawn from young people's experiences.

1 Introduction

One autumn Friday in 2019 shortly after lunchtime, an Australian man Brenton Tarrant strapped a camera to his helmet, linked the feed to Facebook Live, and went on to carry out New Zealand’s worst-ever terrorist attack. Inspired by far-right Islamophobia and white supremacism, the livestreamed attack eventually claimed the lives of fifty-one adults and children attending two mosques in the city of Christchurch, while leaving a further forty injured. Friday, March 15 became, in the words of Prime Minister Jacinda Ardern, “one of New Zealand’s darkest days” and the perpetrator became New Zealand’s first convicted terrorist. That day made history in another sense. The Christchurch attack, as it came to be known, was not the first terrorist attack to be livestreamed across social media to a global audience, but it was the first to go viral.

All terrorist attacks are to some degree performative, but the twenty-eight-year-old gunman went to extraordinary lengths to appeal to and engage an online global audience to spread his message of hatred and violence. Minutes before the Facebook livestream commenced, copies of his self-penned manifesto were linked to posts he made on social media platforms Twitter and 8chan. A URL to the livestream and words of encouragement to online followers were included so that others would access, share, and spread the attack in real time. The helmet camera resulted in nearly seventeen minutes of high-definition, point-of-view violence that purposefully replicated a first-person video game. A “backing track” made up of anti-Islamic songs, popular among the denizens of online far-right chat forums such as 4chan and 8chan, was played through a speaker strapped to a weapon. This was interspersed with instructional commentary as the attacker discussed the effectiveness of his weapons and attempted to glorify his violence through direct appeals to the audience. The weapons themselves were graffitied with symbols and phrases, such as “kebab killer,” referring to popular online racist memes. Perhaps most revealingly, just before the attack the terrorist made a direct comment to the camera saying, “Remember lads, subscribe to PewDiePie,” referencing Swedish YouTuber Fellix Kjellberg. Kjellberg, who had been accused of using far-right material in his clips, was at the time the world’s top-subscribed YouTuber and in a race with Bollywood music channel T-Series to be the first to reach 100 million subscribers (Reference DicksonDickson 2019).

As the attack took place, only a limited number of online followers encouraged and cheered on the attacker in real time (Reference LoweLowe 2019). However, copies of the footage soon began to spread across digital media. In the first twenty-four hours after the attack, Facebook moderators removed 1.5 million uploads. At over two billion users, Facebook represents by far the biggest audience in history. During the same period, YouTube removed tens of thousands of versions of the clip (it has not released exact numbers), many of them altered by users in an attempt to evade automated censor software. At one point, the number of new clips of the attack being uploaded to YouTube reached one per second, while hundreds of new accounts were created solely to share versions of the livestream. Some mainstream media also posted clips of the attack online, with six minutes of raw video footage being posted by Australian news.com.au showing the gunman driving on his way to the attack (Reference MurrellMurrell 2019). Days after the attack, Facebook’s former chief information security officer Alex Stamos posted on Twitter that searches surged as “millions of people are being told online and on TV that there is a video and a document that are too dangerous for them to see” (Reference BogleBogle 2019).

In the wake of the Christchurch attack, governments and social media companies scrambled to address the danger that terrorism and violent extremism on the Internet supposedly pose to vulnerable audiences online. Internet service providers in New Zealand blocked access to lesser-regulated platforms such as 4chan, 8chan, and LiveLeak (Reference IlascuIlascu 2019), while Reddit banned the subreddits WatchPeopleDie and Gore due to their glorifying of the attacks. Australia introduced legislation fining platforms and potentially imprisoning their executives if they did not remove terrorist content; its Prime Minister stated that social media companies must “take every possible action to ensure their technology products are not exploited by murderous terrorists” (Reference FingasFingas 2019). On the international stage, New Zealand and France led the Christchurch Call, a global effort to hold social media companies to account for promoting terrorism by eliminating terrorist and violent extremist content online. This was because “such content online has adverse impacts on the human rights of the victims, on our collective security and on people all over the world” Reference Call(Christchurch Call n.d.). The New Zealand Classification Office (n.d.), in banning the livestream, was blunt about the dangers it believed were posed by online violent extremist material, stating that those “who are susceptible to radicalisation may well be encouraged or emboldened.”One year after the Christchurch attack, we, as academics at Macquarie University in New South Wales, the Australian state from which the attacker hailed, conducted focus groups with young people about their emotions and experiences when accessing terrorism and violent extremism online. Almost without exception these young people had accessed the Christchurch attack, either the full livestream or partial clips. What we saw was surprising. Instead of evidence for radicalisation, the young people we talked to revealed complex emotional responses and behaviours. For one participant, the attack was an affront to their online culture. They were shocked that their humour had been appropriated by a terrorist, but this did not result in them abandoning their online culture:

Yeah, that [references the Christchurch attack] – and it ruined the humour behind the subscribe to PewDiePie. After that moment, I was just – or that humour about his subscribe to PewDiePie, let’s take over the world. Then I – it just – those jokes just weren’t really funny at all. I remember seeing that and that just completely really broke something for me and I was just like oh, this guy is gross. This guy has the same sort of humour as me. He has a similar culture, meme culture and stuff – humour, as me and he’s doing all those terrible things that moved me away from that meme culture, I guess. But obviously, I’m still into it, so, yeah, I was like woah.

This Element is about young people, online terrorism, and emotion. In it, we explore the issues of how young people consume violent extremist material in the digital era: how it makes them feel, what they do with this content and these feelings afterwards, and how they talk about it with friends and family. If the Christchurch attack was “engineered for maximum virality” (Reference WarzelWarzel 2019), a design principle that has since been emulated by far-right extremists in El Paso (Reference ZekulinZekulin 2019) and Singapore (Reference WaldenWalden 2021), then this Element is about the generation who have been targeted as the online audience. Yet despite almost universal concern from a number of quarters, including parents, the media, government, and tech companies, there have been surprisingly few attempts to initiate conversations with young people themselves about their emotions and the effects on them of exposure to online terrorism and violent extremism. This is surprising given that so few of these young people actually become terrorists. There is a lot of concern in this space, but not a lot of conversation.

Emotions, Terrorism and the Audience

The incorporation of the word “terror” in terrorism, defined here as “the state of being terrified or extremely frightened; an instance or feeling of this” (Oxford English Dictionary, n.d.), creates an inescapable relationship between the phenomenon of terrorism and emotion. Yet any study of emotions within the discipline of terrorism studies has been largely absent. In part this reflects a perspective of emotions as unconscious and beyond the control of an individual, and therefore problematising the presentation of terrorism as the product of rational decision-making (Reference CrawfordCrawford 2000, 124). Notable exceptions include studies by Neta Crawford and by David Wright-Neville and Debra Smith (Reference CrawfordCrawford 2000; Reference Wright-Neville and SmithWright-Neville and Smith 2009). The distinction between positive and negative emotions has been shown by scholars within the history of emotions to be a function of history, with simple dichotomies restricting more complex analysis Reference Solomon(Solomon 2008). However, the binary demarcation of positive and negative emotions remains in use among scholars examining emotions in the context of violent extremism. This is reflected in the tendency of terrorism scholarship to focus on negative emotions both among victims (terror, hurt, fear) and among perpetrators (humiliation, anger, hate). The focus on these types of emotions, as will be discussed here, is certainly valid. However there remains the possibility that other emotions arise, including positive emotions such as love, happiness, relief, and compassion (Reference Cottee and HaywardCottee and Hayward 2011, 975). Examining the intersection between emotion, social structures, political processes, and individual perceptions and/or behaviours can provide insights into the complex dynamics involved in processes of radicalisation to violent extremism (Reference Wright-Neville and SmithWright-Neville and Smith 2009).Although writing prior to the global dissemination of digital media, Neta Crawford’s comments remain acutely relevant:

Just as emotions are labile, emotional relationships may be altered. So, the categorization of a group’s emotional relationship to another group, and therefore the behaviours a group deems normatively obliged to enact, may change if empathy or antipathy are elicited through contact.

Her statement reflects the critical requirement for systematic examination of the relationships between emotions, violent extremism, and digital media to understand how, why, and when online content may (or may not) contribute to processes of radicalisation to violence. By marginalising the role emotions play in violent extremism, we risk returning to a one-dimensional model of radicalisation that pitches the all-powerful violent extremist against the vulnerable and passive individual.

Examining the spectrum of emotions also provides insight into the emergence of the moral panic that has arisen in response to the presence of violent extremist content on digital media and the framing of youth audiences as particularly vulnerable. It helps to problematise the notion that “technical things have political qualities … and the claim that the machines … can embody specific forms of power and authority” (Reference WinnerWinner 1996; Reference NahonNahon 2015, 19). Prioritising emotions in this way demands a reconceptualisation of the complexity of this “vulnerable” audience while identifying opportunities to strengthen and develop resilience to violent extremism at an individual, group, and societal level.

Understanding the audience is key to any appreciation of how or why terrorists engage in violence the way that they do, and why, since the advent of digital media, terrorists themselves have been so dedicated to posting violence online. According to most definitions, terrorism can usefully be thought of as an audience-focused performance of violence. The violent act is intended to create strong negative emotions (i.e., terror and fear) among those who are impacted by the violence, or who hear about it or view it through media reporting. Strategically, terrorism is a remarkably successful form of political violence due to this ability to cultivate widespread fear among an audience, particularly when this popular fear is then translated into demands for political elites to respond to the provocation in some way (Reference FreedmanFreedman 1983). Responses can include accommodating the terrorist’s demands, that is, for political concessions such as the autonomy or emancipation of a group, or forms of unintended state overreaction that put further pressure on the society that is under attack (Reference AltheideAltheide 2006). In this way, it is no exaggeration to say that terrorism is in essence a strategy of political violence that relies on the manipulation of negative emotions, particularly anxiety, fear, and terror, among an audience usually made up of the general public.

It is surprising therefore that, given the central role audience emotion plays in the success of terrorist strategy, there has been relatively little attention placed by terrorism researchers on the range of emotional responses felt by audiences who view media reporting of terrorist acts. As we will see, those studies that have been conducted have mostly been in relation to traditional media, particularly newspapers and television, and not the new media landscape characterised by the Internet, digital platforms, and social media (Reference AlyAly 2017). The majority of academic considerations of the audiences of terrorist attacks have focused their analysis on various classifications of the audiences into types. These include the uncommitted versus the sympathetic Reference Wright(Wright 1991), immediate victims versus neutral groups (Reference SchmidSchmid 2005), or government versus media (Reference MatusitzMatusitz 2012). Although useful for thinking about how terrorists perform their violence and frame their media so as to impact various groups, this research does not reveal the variety of emotional responses, perceptions, and responses held by audiences themselves to the terrorist content they consume.

What we do know is that emotions such as fear, anxiety, and even trauma are not uncommon audience reactions to terrorist violence, even when that exposure is purely through media reporting (Reference Sinclair and AntoniusSinclair and Antonius 2012; Reference Kiper and SosisKiper and Sosis 2015). For years after the September 11, 2001, attacks by al-Qaeda, for example, polls showed terrorism remained the highest-ranking fear among American youth (Reference LyonsLyons 2005). Research on human subjects has demonstrated that media exposure to terrorist events can create audience fear and sympathy (Reference Iyer and OldmeadowIyer and Oldmeadow 2006), depression, and anxiety (Norris, Kern, and Just 2003), as well as lingering posttraumatic stress (in this case among children) (Reference Pfefferbaum, Seale, Brandt, Pfefferbaum, Doughty and RainwaterPfefferbaum et al. 2003). This is despite the fact that the high levels of fear engendered by terrorism are usually disproportionate to the actual risks terrorism poses in Western countries, certainly when compared with other less sensationalised dangers such as homicides, domestic violence, or traffic accidents (Reference MatusitzMatusitz 2012).

Indeed, research on audiences who have been exposed to terrorist violence through traditional media show that media saturation following a terrorist attack can result in “mean world” syndrome whereby audiences overestimate the risk of becoming the victim of terrorism and demonstrate an irrational desire for overprotection (Reference MatusitzMatusitz 2012). This overreaction to negative emotions has been shown to manifest as a form of catastrophising in which audience members feel either increased aggression towards out-groups, particularly when they share the same religion or ethnicity as the terrorists (Reference Kiper and SosisKiper and Sosis 2015), or an opposite fear that encourages capitulation to terrorist demands (Reference Iyer, Hornsey, Vanman, Esposo and AleIyer et al. 2015). This ability of media exposure to terrorism to create fear, anxiety, anger, aggression, and prejudice towards an out-group has been shown to be more pronounced than in media about other forms of crime (Reference Shoshani and SloneShoshani and Slone 2008; Reference Nellis and SavageNellis and Savage 2012).

The type of media through which terrorism is experienced also plays a role in how audiences respond. Sensationalised and tabloid news coverage has been shown, for example, to lead to the adoption of more negative emotions and hawkish foreign policy positions among American subjects (Reference GadarianGadarian 2010). These effects have also found to increase when exposure is through a visual medium such as television, and especially when graphic and evocative imagery is used Reference 70Gadarian(Gadarian 2014). Reference VerganiVergani (2018) found that terrorism is perceived as more threatening by audiences living in countries dominated by market-oriented commercial and tabloid media. He suggests this is due to commercial media’s focus on entertainment and on arousing the emotions and passions of viewers, in part through sensationalising terrorist events. This contrasts with public-oriented media that emphasises factual non-emotive reporting, which correspondingly results in an audience that feel less terrified and threatened by anxieties about terrorism.

The research that we refer to above has tended to focus on traditional, rather than digital, media. It assumes viewers that are relatively passive and view news through traditional mediums such as print and broadcast media. However, in the digital environment there is a fundamental shift in the nature of audience responses and in how people expect to and do interact with media on different platforms and in differing contexts. Using these platforms, it is well-established that audiences become both consumers and producers of content. They are, in other words, part of the process of interacting with and disseminating content (variously termed “prosumers” or “produsers”), as a form of entertainment and social engagement (Reference Ritzer and JurgensonRitzer and Jurgenson 2010; Reference BrunsBruns 2007). Indeed, research on how audiences receive terrorism content online, on how it makes them feel and act, is conspicuously missing (Reference AlyAly 2017). Hence, most commentary about how online audiences experience terrorist content, or even become radicalised to violence, only speculates on the nature and extent of their impressions and how they are influenced. As noted by Reference AlyAly (2017) this sort of commentary is “often based on an assumption that the violent extremist narrative works like a magic bullet to radicalise audiences already vulnerable and predisposed to becoming violent.”

In part, this assumption about the dangers of exposure to online terrorist content and the vulnerability of audiences reflects the application of a media-effects theory framework in understanding how exposure to digital media may lead to radicalisation to violence. A foundational theorist of the media-effects school of thought was Albert Bandura, a Canadian-American psychologist who used social science experiments to demonstrate that, by observing behaviours, people – and particularly children – learn to model behaviours and emotions. This model was then cross-applied to the theory that, by watching and absorbing media of various forms, vulnerable groups would be stimulated to mimic the behaviour and would be effectively desensitized. The best-known critic of this framework for understanding how people, and particularly young people, respond to media stimuli, including violent video games, is David Gauntlett, a British sociologist and media studies theorist. In his influential essay, Ten Things Wrong with the Media “Effects” Model, he argues that reputable criminologists consistently rank media engagement as one of the least influential factors among the causes of real-world violence (Reference Gauntlett, Dickinson, Harindranath and LinneGauntlett 1998). He notes that media effects studies are consistently conducted in artificial laboratory type settings which ignore the multiple factors that influence how and why media consumers view material and the role that their pre-existing values and cultural and socio-economic backgrounds and experiences play in those interactions. Indeed, in their systematic review of protective and risk factors for radicalisation, Reference Wolfowicz, Litmanovitz, Weisburd and HasisiWolfowicz et al. (2020) noted the limited effect of direct and passive exposure to violent media in generating risks associated with radicalisation (Reference Wolfowicz, Litmanovitz, Weisburd and HasisiWolfowicz et al. 2020).

Overall, despite the shocking nature of terrorist violence going viral online, and the very clear strategy used by groups and individuals such as the so-called Islamic State and the Christchurch attacker in hijacking the Internet and social media to spread their propaganda, we still know very little about how this material affects young people. Assumptions taken from the field of terrorism research and traditional media-effects theory suggest that the primary emotional response to this material must be terror, or something like it, and that the result must be trauma or even radicalisation to violence. However, we do not know if these assumptions are valid, particularly in the context of the blended online environments through which young people increasingly inhabit and mediate their social relationships. This Element, and the research that informs it, explore this new environment through the voices and experiences of young people themselves.

The Research Project

The genesis of this research came about in early 2015. That year saw a rapid rise in concern among the government, the media, and the general public about the dangers the Internet posed to young people who were being increasingly exposed to violent extremism online, particularly through digital media. The succeeding twenty-four months became something of a watershed for fears about the confluence of terrorism, the Internet, and “vulnerable” youth. The so-called Islamic State (IS) had commenced a global online media campaign that was tech-savvy, aimed at youth, and beginning to result in large numbers of people – often young people – leaving their homes to join IS’s self-styled caliphate (Bergin et al. 2015). Others, once connected to the extensive and well-funded online IS networks, were remaining home and supporting the group in other ways: as financiers, recruiters, propagandists, or even as violent actors. Around this core of terrorists and their supporters a larger, grayer area was coalescing; this was made up of IS “fanboys” and “fangirls” using the Internet and social media to create and spread violent and extreme pro-Islamic State memes, songs and video games (Reference WinterWinter 2015). At the same time, schools began to report an emergent phenomenon of “Jihadi cool” among students, a transgressive subculture adopted by rebellious youth, sometimes as young as primary school age (Reference CotteeCottee 2015). It is not surprising that increasingly frantic questions began to be asked by concerned parents, teachers, politicians, and national security practitioners about the vulnerability of youth on the Internet. Was a whole generation being radicalised overnight via their mobile phones and social media accounts? In this climate of uncertainty and fear, there was no shortage of terrorism commentators and instant experts warning that online violent extremism presented a new and sinister threat that adults and established security agencies were completely unequipped to counter (Reference BurkeBurke 2015).

As academic researchers working in the fields of terrorism studies and media studies, we began to ask questions about this phenomenon. Just what is the role of the Internet in creating terrorists? In particular, how exactly was online violent extremist content received, interpreted, and processed by young people themselves? Why, given the volume and frequency of engagement with this type of content, were so many young people not becoming radicalised to violent extremism? It was clear that there were major gaps in our understanding of terrorism and the Internet regarding the role and influence of online violent extremist messaging on the phenomenon of radicalisation. While literature in the field acknowledged that the Internet played some part in radicalisation processes for some people and in some circumstances, there was little actual evidence to support assumptions of causality between young people accessing online violent extremist content and becoming radicalised to violent extremism (Von Behr et al. 2013).

As concern about online youth radicalisation grew, and as Islamic State’s propaganda was joined – and then superseded – by online far-right violence and extremism, one thing became increasingly apparent: for all the attention given by the media and government to this problem nobody was asking young people (Reference FrissenFrissen 2021). Their experiences navigating these difficult online spaces, and their own ideas about what constituted “violent” and “extreme” content, were not being recorded or considered. Nor were their emotional responses and strategies of coping. Here we present our research with young people reflecting and talking about how they navigate violent extremist material online, how it makes them feel, what they do with these emotions afterwards, how they talk about them with friends and adults, and their experiences of the contested process of radicalisation. Through this, we hope to reinsert the voices and experiences of young people into a debate that has not gone away but that has only intensified over the succeeding years.

The young people who generously gave us their time and trust to discuss their experiences, during what became an increasingly anxious time as Covid-19 made its mark on Australian campuses, are referred to as “participants” throughout. Quotations from participants are in general verbatim, although some minor modifications were made at times and when necessary for purposes of anonymity. Numeric references are used to indicate a discussion between different participants within a single focus group, while all other quotes reflect comments from a single participant.

Definitions

The arguments presented below rely on two key terms – “violent extremism” and “digital media.” Our definitions for these complex and contested phenomena are set out here.

Violent Extremism

There is little consensus in the literature as to the definition of violent extremism. Its popularity in academic and policy circles arose in part to address the intrinsic relationship between terrorism and the politics of power. The term has found itself intertwined with political narratives of power, exclusion, and control, and as such is drowning in definitional complexity (Reference ElzainElzain 2008, 10). We use the definition of extremism by J. M. Berger who in turn draws on social psychological theories of social identity (Reference BergerBerger 2018). Reflecting the findings of the work presented here, this definition is centred on the primacy of social relationships and the tendency of these to generate distinctions between in-groups and out-groups based on perceived social connections. These differences are not necessarily problematic and, as Berger notes, are often celebrated within pluralistic societies. Violent extremism occurs when an out-group is systematically demonised and positioned as an acute crisis for the survival of the in-group, necessitating decisive and hostile action against the out-group (Reference BergerBerger 2018, 121–122).

The work of Manus Reference MidlarskyMidlarsky (2011) provides a theoretical model for why an out-group may be framed as a threat to the survival of an in-group, and how this can lead to the creation of extremist social movements willing to perpetrate violence and murder. Midlarsky argues that a loss of political and social authority by an in-group can lead to deeply felt perceptions of injustice and mass emotions of anger, shame, and humiliation. This shared perception by the in-group opens a cognitive window allowing dehumanisation, violence, and even the extermination of those considered to be the problem. Although Midlarsky’s framework relies on a consideration of the shared emotions of masses, rather than the emotions of individuals and their contribution to collective social movements, it remains useful in keeping us alert to the foundational emotional drivers of violent extremism and terrorism.

Online violent extremist content may be expressed in multiple ways, drawing on humour, satire, glorification, and deniability to attract and speak to different audiences. However, underpinning all these variations is a commitment to a polarised way of viewing the world that is intolerant to dissent. It is a view of the world whereby the survival of the in-group requires the destruction of the out-group.

Digital Media

In the focus groups we conducted, the environment under examination was usually referred to as “social media” with the unspoken assumption this covered social media platforms such as Facebook, Instagram, and TikTok as well as tools such as Google and Wikipedia. However, it became increasingly clear that participants engaged with traditional legacy media sources such as newspapers and television through online mediums. These mediums in turn were far more than just channels for transferring information but represented what Simon Lindgren describes as “environments for social interaction” (Reference LindgrenLindgren 2017, 4). This complexity has led to a shift in the discipline towards the adoption of the term “digital media” to capture the blurring of both traditional and new media with online and offline environments. While this term usefully captures the combination of co-existing forms of information and technologies, we remain cognisant of the risk that removing “social” from the phrase will muffle the fundamentally important relationship between human emotion, behaviour, and technology. While technology is by no means a passive phenomenon, it is essentially humans that make and use technology. Digital media should therefore be read as an enabling environment, within which interactions occur, that facilitates dynamic social relationships between people, information, and technology.

2 Youth and Online Violent Extremism

The surveys and discussions with young people about their experiences of terrorism and violent extremism online that are presented here come from research conducted between 2015 and 2020. This research project, supported by an Australian Research Council Discovery Project grant, focused on examining how youth audiences interact with online violent extremist content, during and after exposure. Considering that terrorists undertake attacks and spread propaganda primarily to instil the emotion of terror in an intended audience, and to then leverage this terror to their own advantage, we were particularly interested in finding out more about the feelings, perceptions, and emotions that young people experienced when interacting with online violent extremism. Through recording how online violent extremist messages are received, interpreted, discussed, and reflected upon by youth audiences, we hoped to develop a baseline of evidence about the strategies of resistance and resilience employed by those inhabiting these online spaces. Both research and experience attest that the overwhelming majority of people, of whatever age, who experience violent and extreme content online do not become radicalised to violent extremism, let alone join terrorist organisations (Reference ConwayConway 2017). This being the case, the interaction between young people and online violent extremist content, particularly the emotions they feel when exposed, must be more complex, nuanced, and subtle than dominant discourses suggest.

Our research involved a mixed-method investigation into young people’s experiences online, divided into two main phases. The first phase was broadly quantitative and exploratory. It drew on the results of online quantitative polling of approximately 1,000 Australian young people between twelve and twenty-four years of age, and asked about aspects of their experiences with online violent extremism. As will be discussed below, this survey data was part of a broader online polling commissioned by the Advocate for Children and Young People (ACYP), who used a certified social market research company to conduct the research in line with industry standards and regulations. The second phase was broadly qualitative, building a set of more in-depth questions based on the findings from the earlier survey. It consisted of a series of seven in-depth focus group discussions with young people between the ages of eighteen and twenty-four. These focus groups were structured around open and semi-structured discussions among young people and facilitated by the three-person research team. The focus groups and use of the survey data were conducted in compliance with Macquarie University Human Research Ethics (No: 52019347312409). This required that all participants sign a consent form that detailed the difficult nature of the subject matter, specified that we would not be providing new or explicit content during the focus groups, and explained how information and activities of interest to legal authorities were not to be discussed. To mitigate the possibility of distress to participants, we provided the details of relevant support services including those of the helpline StepTogether that specialises in countering violent extremism and of broader counselling providers. The information was reiterated at the beginning and end of each focus group.

Both the survey and the focus groups were carried out in the Australian state of New South Wales (NSW) between mid-2018 and the beginning of 2020. The two phases were designed to capture the experiences and voices of the generation in which social media and smartphone use has become almost ubiquitous. Many of the young people we surveyed and talked with had experienced the rapid expansion of Islamic State and far-right violence and extremism online, had been part of friendship circles in which online violent extremism and terrorism were discussed and had become key social touchstones, and had seemingly found ways to navigate these difficult spaces successfully. It should be noted that the young people we surveyed were also part of a generation that had been impacted by heightened parental, school, and government concern about the dangers of terrorism on the Internet and the possibilities of youth radicalisation (Bergin et al. 2015).

This mixed-method design incorporating both quantitative and qualitative data was employed in order to build empirically on what is known about young people’s experiences of online violent extremism, rather than on what commentators assume (or fear) about it. In addition, rather than repeating the numerous studies attempting research into the elusive process of online radicalisation (Reference Meleagrou-Hitchens, Alexander and KaderbhaiMeleagrou-Hitchens, Alexander, and Kaderbhai 2017), we decided to explore the wider issue of how young people interact, both emotionally and behaviourally, with online violent extremism. Instead of looking for the “one in ten thousand” who become radicalised to terrorism, we were more interested in how the full cohort and their friends navigated this space, the problems they faced, and the solutions they devised. It is our contention that it is impossible to understand radicalisation to violence online, and the risks that it poses to youth, without first gaining a clearer picture of the diverse ways young people have themselves devised to engage emotionally and behaviourally with these difficult online spaces.

Phase One: The Survey

The survey was carried out in collaboration with the NSW Office of the Advocate for Children and Young People (ACYP). The Advocate has existed since 2014 as an independent statutory office reporting to the New South Wales Parliament in Australia. Its aim is to advocate for the well-being of children and young people through promoting youth agency in decisions that affect their lives, to make recommendations on policy, and to conduct research into issues that affect children and young people. We partnered with the Advocate because of our shared concern for young people and interest in better understanding the context within which online violent extremism is accessed, experienced, and reacted to.We included five questions related to young people’s experiences of online violent extremism in a broader attitudinal survey the Advocate commissioned in June 2018. The survey was distributed online to a representative sample of young people between the ages of twelve and twenty-four from across New South Wales, attracting approximately 1,005 respondents. All questions were presented through with simple multiple choice or drop-down-style answers. The questions were:

Have you experienced extremism online?

Where was the place that this online extremism was experienced?

What is the reason why you considered the experience to be extreme?

How did the experience of extreme content make you feel?

What action did you take after experiencing this extreme content?

One limitation of this phase of the research was our inability to ask specifically about “violent” extremism online. The reluctance to question young people about experiences of violence through a large government-sponsored online survey stemmed from duty of care responsibilities should any responses indicate engagement with violent extremism or terrorist activities. The absence of references to violence from the questions, however, did not prevent young people from reflecting on and reporting violent extremism in their answers. This is because experiences of specifically violent extremist content would presumably still be considered to have been “extreme,” and hence reported and recorded in the survey.

Phase Two: The Focus Groups

The focus groups were carried out in partnership with Macquarie University, located in Sydney, Australia. Seven focus group sessions were held in early 2020 on the university campus. These were ended suddenly in early 2020 due to the interruption caused by the Covid-19 pandemic and by the abrupt cessation of all face-to-face research due to university and government social distancing policies. Each focus group lasted for one hour, and consisted of members of the research team and a group of young people aged between eighteen and twenty-four years. Participants were drawn from the undergraduate cohort at Macquarie University; approximately half were domestic Australian and half were international students, mostly from the Asia Pacific. There was an attempt to balance between genders in recruiting focus group participants. The focus groups were advertised through campus-wide paper flyers and direct emails to student cohorts within the Faculty of Arts. Advertisements specifically asked for volunteers who had experienced violent or extreme material online and who were willing to talk about these experiences in a safe and non-judgmental environment. Participants were reimbursed with a $20 (AU) coffee voucher. Focus groups were purposely kept small and intimate to allow an in-depth and qualitatively rich discussion about their experiences. Each session had a minimum of three and a maximum of seven participants. In total, twenty-five young people participated in the discussions; each had previously reported having had significant experiences of online violent extremism.

Focus group sessions were recorded and later transcribed by an outside company. These transcripts were then analysed by the project team through NVivo software using a grounded theory approach. Any identifying characteristics of participants, such as name, age, nationality, and so on were removed through the transcription process. In this way, participants were anonymised in a way that ensured their privacy and safety, and that encouraged open and frank discussion about their online experiences. Participants were notified that while the research team were not collecting information about any illegal behaviour, any disclosures of such activity would be reported to authorities in line with legislative requirements.

Focus group discussions were kept open-ended to allow the young people to raise and reflect on the issues that have affected them, rather than on those that terrorism experts assume to be most important. At the same time, each focus group was structured around the same series of key questions and themes posed by the research team. These questions were designed to explore how young people defined violent extremism, how it made them feel emotionally, what they subsequently did with the material, why they thought this material was produced, and whether they discussed these experiences with friends or adults. The research team made a conscious decision to neither define violent extremism at the outset, nor to mention the terms “terrorism,” “terrorist,” “radicalisation,” or notable types of terrorism such as “al-Qaeda,” “Islamic State,” or “the far right.” This was done in order to not seed the participants with expectations about the types of violent extremism that researchers might be most interested in, familiar with, or consider to be of most concern. Nor did we want young people to be prompted into thinking we were most concerned with issues or mechanisms of radicalisation to terrorism. Instead, young people told us what most concerned them online, what they found to be violent and extreme, and how it made them feel and act. Even with these conscious absences, incidents of terrorism (particularly Islamic State and the far-right Christchurch massacre livestream) were raised during each focus group and were animatedly discussed by all the participants. Participants recalled incidents of terrorism and violent extremism online but had minimal knowledge of the specifics of group names, movements, and political contexts. Our strategy of initiating a truly open discussion about violent extremism online resulted in a wider and more diverse understanding than is usually found in the terrorism literature about the nature of this difficult environment and how it is navigated by youth.

Young People and Online Extremism

The following section details the results of the 2018 survey of the general youth population about their experiences online. It presents results pertaining to fundamental questions that remain unanswered or debated in this space. These are: whether young people experience extremism online; where they experience it; how they define “extreme”; how it made them feel emotionally; and what they did with this content afterward. These questions provide the foundational context for a more detailed discussion in later sections about the emotional experiences and subsequent behaviours of young people.

The questions about where this material was accessed online and, most importantly, about what they consider to be violent and extreme are supplemented by research taken from the in-depth focus groups. This was done to give the reader a fuller comprehension of the nature of these foundational issues and questions before moving on to the focus group discussions in Sections 3, 4, and 5.

Have You Experienced Extremism Online?

The survey revealed that, as shown in Figure 1, just over one in ten (12 per cent) of young people reported experiencing extremism online. A much larger number, two-thirds (67 per cent), said that they have not, and 22 per cent didn’t know. The large percentage who marked “don’t know” could reflect confusion about the meaning of “extremism,” a term that is not necessarily self-evident despite high levels of public and media debate and concern over recent years. A similar finding was noted in the 2018 Australian survey by the e-safety commissioner (State of Play 2018) where only 25 per cent of young people reported “negative experiences online,” indicating that extremism makes up only one aspect of the perceived negative aspects of youths’ online experiences. Emerging research from beyond Australia suggests that these figures should not be taken as representing universal experiences.

Figure 1 Have you ever experienced extremism online?

The numbers represented in Figure 1 contain a significant level of diversity. Older respondents (nineteen to twenty-four years old) were slightly more likely than younger ones (twelve to eighteen years old) to have experienced extremism, as were those who did not speak English at home. Males were more likely, at 14 per cent, to have experienced extremism online than females (9 per cent), mirroring research that consistently shows that while violent extremist material affects both males and females, it affects males at a higher rate (Reference Möller-LeimkühlerMöller-Leimkühler 2018). Those with a disability were much more likely, at 26 per cent, to have reported experiencing extremism online. One way of explaining this disparity is that it may reflect an increased amount of time spent online by those who identify as disabled, or alternatively it may reflect higher levels of discrimination faced. This may be particularly significant in light of the subsequent Covid-19 pandemic and elevated time spent online generally.

The places online where children and young people reported that they had experienced extreme content were, in descending order, Facebook (34 per cent), Instagram (16 per cent), and YouTube (16 per cent), followed by “social media generally” (15 per cent) (Figure 2). This broadly reflects the popularity of the main social media platforms among youth at this time, and prior to the growth of later popular sites such as TikTok or Parler. The absence of peer-to-peer encrypted sites such as Telegram or WhatsApp, as well as niche sites such as Gab, is notable. However, it is possible that some experiences on these platforms may have been captured in the large percentage (10 per cent) listed as “other.”

Alarmingly, a full 10 per cent of respondents claimed that extremism was “everywhere and anywhere” online, while 4 per cent saw it in the news and mainstream media. These figures reinforce an understanding of the blurred distinction between social media and legacy mass media, and even between the offline and online domains. The inclusion of mass media does suggest that isolating and elevating social media as an exceptional or separate realm does not adequately reflect the realities of young people’s experiences. This is backed by responses from the later focus groups held in 2020 and reiterates the need to conceptualise digital media as a spectrum of media types old, new, online, offline, and other.There was a persistent willingness during the focus groups for participants to list non-social media platforms as notable online spaces where they have come across violent or extreme content. Netflix was mentioned a number of times, in particular the US documentary Don’t F**k with Cats: Hunting an Internet Killer, which follows the online manhunt for an animal abuser who later murdered an international student. The Trials of Gabriel Fernandez, a US true crime serial depicting the abuse and murder of an eight-year-old boy was also noted, as was the 2019 film Joker. Although produced for popular consumption and accessed through online streaming platforms, these TV shows, documentaries, and films were talked about in a way that showed that they had shocked some viewers. These fictionalised or documentary depictions of violent extremism also provided frames of reference through which the depictions of real-world online violent extremism were later interpreted and understood. According to one participant:

I got Facebook when I was quite older, so when I was 17, 18, so I didn’t really have that much access to all these online platforms at a young age. I would say the most violent extremism that I can remember was actually through TV. There was a documentary about a shooting in a high school in America. I remember all the details. I remember thinking that can happen, this happens. Every time a shooting does happen, I always think back to that and think back to – because they showed footage from what the kids had and screaming and fear and all. So every time something like that comes up, I always think back to when I watched that.

A smaller number of participants described news videos from mainstream media sites depicting organised crime or state violence by the police or armed forces as online violent extremism. These were discussed in relation to the Philippines and Chile specifically, and the clips were generally seen on Facebook. Surprisingly, no participants described online gaming platforms as the location where they experienced violent or extreme content.

In terms of the digital media platforms discussed, participants reported accessing violent extremist materials on a range of sites that closely align with the broader survey data described above, and that mirror the relative popularity of sites used by young people at the time. Facebook was by far the most discussed platform, making up more than half of all references in our sample. This is notable as it contradicts the popular assertions and some research (Anderson and Jiang 2018) that suggests Facebook has lost currency among young people, and that they are departing the platform for more niche sites. Our experience suggests that Facebook has been the primary platform through which young people experience violent and extreme material, at least in recent years. Following Facebook, the relative popularity of platforms mentioned during the focus groups were: Instagram and YouTube in equal second place, and then Twitter and Reddit in equal third, followed by Snapchat. Lesser visited sites that were mentioned fewer than five times included 4chan, WhatsApp, and Tumblr. Sites that have recently been associated with far-right extremism and violent content, such as 8chan/8kun and Gab, were not mentioned at all.One site that was mentioned on three separate occasions was LiveLeak. This is an extreme and voyeuristic file-sharing and streaming service designed to make available extreme, taboo, and violent material. As expected, although this site was only mentioned on a handful of occasions, it was associated with ultra-violent material such as Islamic State beheading videos and footage of the Christchurch attack. According to one participant:

They have all these uncensored, really violent videos and I’d watch the ISIS execution videos. I’d watch it for fun, but I felt like that desensitized me towards a lot of things. I somehow managed to get hold of the Christchurch shooting footage as well last year. I think that was the first time in my life where I actually got a bit concerned about it, about my health.

What Do You Consider to be Violent or Extreme?

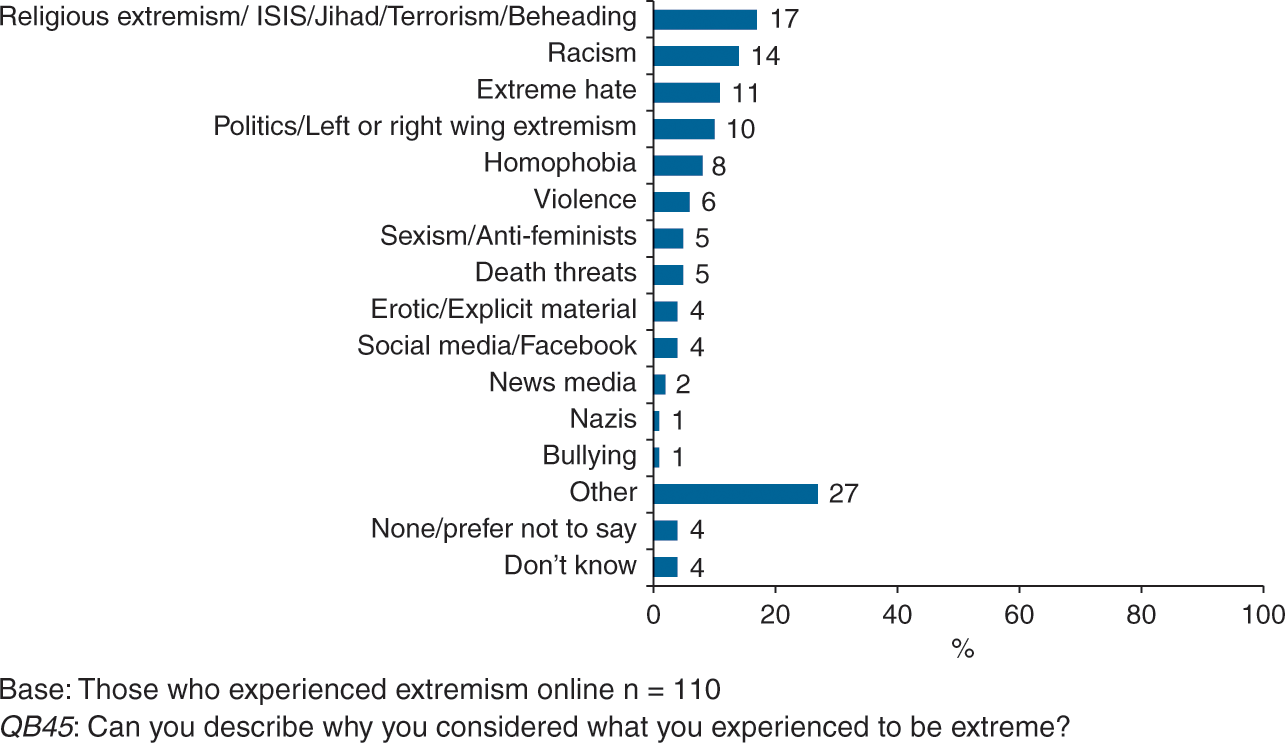

One of the most contentious questions facing digital media platforms, users, and governments is also seemingly the simplest – what exactly is violent extremist content? This was one of the first questions asked during the 2018 survey, the results of which can be seen in Figure 3. It is perhaps unsurprising that 17 per cent of respondents classified religious extremism, ISIS, jihad, terrorism, and/or beheading as violent extremist content. This type of terrorist content, including the livestream footage of the Christchurch attack, was also frequently referenced by the focus group participants:

There was a video where it was all young – twelve–thirteen – teenagers, and they were all just kneeling down and then they were saying something in their language. With guns and I think they started shooting … that’s the first thing that I think of.

Results of both the survey and focus group highlight the complexity underpinning classifications of what should be labelled online violent extremism. As demonstrated in Figure 3, survey respondents categorised homophobic, sexism or anti-feminist, and death threats as representative of extreme content. Interestingly, the highest frequency category selected by survey participants was “other” (27 per cent). The use of this catch-all term may indicate a lack of understanding among respondents as to the meaning of the question. This uncertainty was also reflected in the focus groups. For example, it was not uncommon for participants to discuss whether something counted as violent extremism, saying things like: “I don’t know – does it count? – someone on my Facebook went through a phase of posting pictures of foetuses.”

It is also possible that for some respondents the pre-given survey categories (based on usual interpretations of violent extremist content found in the terrorism studies literature and in media reporting) did not accurately capture their perceptions of extreme online content. This breadth in definitions for violent extreme content is increasingly paralleled in mainstream discussions of the blurred line between hate speech, incitement of violence, and expressions of negative emotions such as disgust, anger, dislike, and so on. Indeed, it has increasingly become “inherently difficult to define objective parameters for something as value-laden as highly political ideological discourse,” of which violent extremism is perhaps the greatest example (Reference HolbrookHolbrook 2015, 64).

This diversity of content labelled as “violent extremism” was reiterated in the focus groups. Again, Netflix documentaries such as Don’t F**K with Cats: Hunting an Internet Killer, The Trials of Gabriel Fernandez and Surviving R. Kelly were all raised and discussed by participants as examples of online violent extremism. The inclusion of these types of content highlights the melding of traditional and new forms of media (Reference PascoePascoe 2011). Participants’ discussions around these documentaries, as well as real-world events such as the Columbine school shooting, point to the challenges that arise from the inherent dynamism associated with online content production. While the initial event or film was classified by young people as violent extremism, they also discussed the emergence of linked memes, music videos, and commentary that they classified as humorous. For example, memes of R. Kelly and the song Pumped Up Kicks made about the Columbine shooting were discussed more in terms of humour and/or celebrity status. As one participant noted with respect to the transformation of violent extremist content: “You just kind of make fun of what we think – like what’s usually evil or what’s usually violent, as a joke.”

The diversity of violence captured in the focus group discussions also reflected the presence of voyeuristic platforms that host video content depicting a range of violent acts such as people dying or being involved in car crashes. Here participants were unclear as to the intentionality of the act noting how it “could be purposeful or it could be accidental.” This is an interesting observation particularly given the importance granted to the attribution of purpose within traditional definitions of violent extremism. This diversity of violence is also reflected in the inclusion of gang-related violence as falling within violent extremist classifications. Participants described their experiences of viewing violence perpetrated by Mexican cartels and Brazilian gang-related shootings. Sexual violence was also cited by participants as an example of violent extremist content. Participants described encountering videos that depicted acts of graphic violence against women; for example, one user described a video where “it’s a dead body of a woman, and her – this part is cut open and someone … this guy was penetrating her. It was really graphic.” They also classified mainstream media reporting of sexual assaults against women in conflict zones, such as the stoning of Yazidi women, as examples of violent extremist content.

One of the atypical categories that emerged from the focus groups was animal-rights content. Discussions often pointed to the presence of videos and images that documented the abuse of animals, including slaughter and torture. There was a degree of nuance in the applicability of the term “violent extremism” for some of the content that was deemed as depicting natural events, even when the content had been moderated by a given platform. One participant, for example, identified a difference between a moderated video depicting “lions eating something” as opposed to “some vegan person has shared a video about an animal being tortured.” However, even here, as will be discussed below, the reactions to these violent extreme examples were varied with one participant explaining how “personally I do watch the dogs abused in YouTube.”

How Does Violent Extremist Material Make You Feel? What Does It Make You Do?

The 2018 survey captured young people’s emotional and behavioural responses to online extreme content. The results of these two questions (Figures 4 and 5) drew attention to the gap between the expected or anticipated emotions and behaviours and those that were actually experienced by young people. This was of great assistance in helping us articulate the framework for the later in-depth focus groups.

The initial survey data captured a complexity of ways in which young people expressed their emotional responses to online violent extremist content. The list of possible emotions presented in the online survey arguably skewed responses towards negative emotions, particularly those that are frequently discussed in literature focused on the aims and objectives of violent extremism content producers. The most common emotions selected from the list were sad, depressed, upset, and distressed (24 per cent). Despite this potential bias, the existence of a far broader and nuanced spectrum of emotions also emerged from the results. Young people reported that extreme content elicited anger and annoyance (9 per cent), an emotion that despite its negative associations has also been correlated with mobilisation and positive feelings (Waldek, Ballsun-Stanton, and Droogan 2020). Around one-fifth (21 per cent) of young people indicated that the emotions listed in the survey did not appropriately express their feelings, resulting in their selection of “other.” This highlights the presence of a far more diverse spectrum of emotional responses to this content than was initially expected from a review of the terrorism literature.

When young people were asked about what they did with extreme content, the survey results again depicted a discrepancy between the behaviours anticipated by the survey creators and those actually performed by young people. The most common response from young people was that, after viewing the content, they would delete it (23 per cent) or report it to a teacher, parent, or adult or to the police or the eSafety Commissioner (20 per cent). Some of the respondents liked the content (13 per cent), shared it with friends and/or family (11 per cent), or shared it more broadly across social media platforms (5 per cent). However, once again the list of behaviours provided in the survey failed to include all the activities in which young people were actually engaging, with many respondents selecting “other” (27 per cent). The focus groups, as documented further in later sections, drew attention not just to the importance of placing the experiences of young people themselves at the centre of understanding of online violent extremist content. The groups also articulated the presence of a myriad of emotional and behavioural responses that may provide opportunities for increasing the resilience of young people to engage in an environment that is unlikely to ever be completely free from violent and extreme content.

3 The Diversity of Reactions to Online Violent Extremism

This section explores the emotional responses young people described in response to engaging with violent extremism, in particular on digital media. In it, we present a series of emotional responses to violent extremist content that often differ from the terror, fear, or shock that audiences are assumed to experience. Indeed, in addition to negative emotions young people reported a darkly humorous side of this material, its utility in rituals of bragging and rebellious performance, as well as a fundamental sense of curiosity. Many young people wrestled with fears about their exposure and had concerns about a poorly defined concept of desensitization. However, overall, it was the social context of this material, how they and their friends discussed and responded to it collectively and in relation to one another, that served to frame much of their discussions.

Concerns with sociality, with their friends, and to a lesser degree with family, infused the lives of the focus group participants. They couched their descriptions of online violent extremist content, as well as the minutiae of their lives, within the bounds of social relationships among peers, family members, educators, and the broader adult populations. As will be explored in more detail below, the emotions young people discussed in relation to online violent extremism often emerged within the context of a feedback loop of social validation. These emotional responses were affected by norms that young people perceived to operate within their peer groups and throughout wider society.

Cultivating an awareness of the social context of experiences of online violent extremist material highlights a disjuncture that has arisen from the moral panic associated with online violent extremism. Social concerns often focus on the media and technology as both the source of the problem and the location of the solution. However, as will be highlighted in this section, when we return our awareness to the critical social context of the media, the problem takes on a far more diverse and complex dimension. In turn, existing and new opportunities arise to build up resilience among young people and their social environments.

Sociality

Sociality is one of the most important qualities defining the complex relationships that shape and are shaped by the online environment. Digital media and their associated platforms, including social media (Reference boyd and Ellisonboyd and Ellison 2007), are predicated on human relationships. Digital media are proactively designed to enhance sociality through their ability to host, facilitate, and enhance social interactions (Reference Marres and GerlitzMarres and Gerlitz 2018; Reference NahonNahon 2015).

The technological affordances of the multitude of social networking sites are not alone in crafting human emotional interactions with content and technology. As previously described in our definition of it, digital media’s sociality is melded by humans whose behaviours and emotional responses are shaped by complex motivations, including identity construction and social relationships (Reference Zhang and LeungZhang and Leung 2015). Studies highlight the complex effects social media has on social connectedness and have shown both increases and decreases in social connectivity (Reference Ryan, Allen, Gray and McInerneyRyan et al. 2017; Reference Ahn and ShinAhn and Shin 2013; Reference Grieve, Indian, Kate Witteveen, Tolan and MarringtonGrieve et al. 2013). It is unsurprising that violent, extreme content has an equally varied impact on young people.

Sociality and connectivity occur within an environment where, as has been well documented, the lived experience of an individual bridges the online and offline worlds. One participant in the focus group highlighted the fluidity between these environments when they stated: “Yeah. It’s kind of like a conversation starter as well, which is weird, but it’s – you definitely do talk about it offline moreover than online, I believe.” It is this fusing of realities, often described in mainstream media and academia as distinct, that provides insight into the sense of penetration that digital media has had for young people. As another participant noted, digital media “is so woven into everybody’s everyday lives.”

This inherently social behaviour is paralleled in violent extremism. As the French anthropologist Scott Atran notes, “[P]eople don’t simply kill and die for a cause. They kill and die for each other” (Reference Atran, Webel and ArnaldiAtran 2011a, xi). That digital media has become a conduit for these social behaviours is hardly surprising. What is more complicated and far less understood, however, is what this shared mutual sociality means for individuals engaged in the production and consumption of its associated content, at both an emotional and a behavioural level. How are young people themselves navigating this sociality and its associations with identity formation as well as with the construction and sustenance of social relationships?Research drawing on survey data examining young people’s viewing of violent content (defined more broadly than extremism) documents the critical role played by social relationships, particularly those found within peer groups (Reference Third, Delphine Bellerose, Oliveira, Lala and TheakstoneThird et al. 2017). In parallel, terrorism scholarship has increasingly documented the key role social relationships play in processes of radicalisation to violent extremism (Reference Nesser, Stenersen and OftedalNesser, Stenersen, and Oftedal 2016; Reference SagemanSageman 2008). Friendship groups generate pressures to engage in certain behaviours despite little real understanding of or deep resonance to the underpinning beliefs, values, and objectives, as was the case for several American Somalian young men who travelled together overseas to connect to the terrorist organisation Al Shabaab (Reference Weine, Horgan, Robertson, Loue, Mohamed and NoorWeine et al. 2009). The connection between sociality and violent extremism contributes to the associated fear that in this highly social online environment young people are particularly vulnerable. While it was clear that peer groups significantly impacted upon the experiences young people had with violent extremist content, any correlation with an associated vulnerability to radicalisation to violent extremism was far less clear. For example:

You kind of feel obligated to watch it as well. After watching these series, or videos, or pictures, you tell people straight away. Ooh my gosh, did you see that image or did you see that?At the same time, the importance of understanding engagement with violent extremist content within a framework of sociality is highlighted by the role peer groups play in navigating and demarcating appropriate responses. These responses help to construct the boundaries of the in-group. Here the peer group represents a behavioural shibboleth that helps to determine the legitimacy of an individuals’ membership in a community Reference Muniesa(Muniesa 2018), demarcating who is in and who is out. The following discussion, for example, describes how the participants’ emotional responses were influenced by their peers:

Participant 1: Yeah, but it depends, because if you’re in a big group, and the person who first reacts – the person who reacts to it first, is usually how everyone reacts to it as well … .

Participant 2: Yeah, that’s trueIn another encounter, a participant reiterated the active role peer groups play in creating the emotional boundaries around an individual’s engagement with violent extremist content:

It would depend on the context. If my friends were sending me a violent video or something either as a meme or as something to be horrified by, then I would probably adapt my response to their response. Otherwise you wouldn’t really have much overreaction at all.

The conversations with participants consistently highlighted how their engagement with violent extremist content was framed within networks of sociality. These social relationships helped to moderate and mediate not only their behaviours, such as sharing content, but also their emotional engagement and responses. Young people documented a high degree of sensitivity to peer pressures. However, this pressure does not always result in vulnerabilities that increase the effectiveness of violent extremist content in “infecting” young minds. In contrast, the peer group also operated as a means of reinforcing the negative moral and normative framing of the content, something that may offer young people opportunities to strengthen their resilience to violent extremism.

Shock, Disbelief, Fear

The etymology of terrorism leaves no doubt that the emotion of terror is central to understanding how audiences are thought to respond to terrorist attacks. As Schmid has pointed out: “Terrorism is linked to terror which is a state of mind, created by a level of fear that so agitates body and mind that those struck by it are not capable of making an objective assessment of risks anymore” (2005, 137). This purposeful construction of fear and the idea that fear can be appropriately and effectively manipulated in various targeted audiences is a core feature of understanding terrorism as a strategy (Reference CrawfordCrawford 2013, 121).The pivotal role played by fear in violent extremism makes it unsurprising that this emotion was frequently cited by the participants in conversations around their initial engagement with content. For example, when describing the viewing of a beheading video, one participant stated: “I remember seeing that and I was – at first, I just looked away and I couldn’t watch it. I couldn’t watch the guy getting his head sawed off. I was – I felt really – I felt a bit sick.” Another participant describes how when seeing violent content relating to animal rights: “Sometimes you do get a little bit sad and tired I guess, but I guess mainly just shock and outrage.” This expression of high negative emotions such as disgust, horror, and shock fits with wider research (Reference CantorCantor 1998). In this quote a participant describes the emotions felt during the viewing of the Netflix documentary Don’t F**K with Cats:

So when he killed someone, it’s – I mean, he already killed someone on the subway but he killed someone in his own house and that was the very – that really got to me. It kind of gave me nightmares. I felt shocked, surprised. At first, you will feel empathy for him because he was being bullied but at that time you say he’s going overboard. All the things he does is – for – he’s the only priority now … so when I saw that, I was shocked and I – it was – I was speechless while watching that.At times the fear expressed by participants was related to the perceived proximity or lack of proximity of the violent content. The nearer the violence was to their everyday experiences and communities the more fearful they felt in response to viewing the content online. For example:

I think the closer to home, like where the video comes from, like the Christchurch shooting or the Lindt Café, I think the closer to home it is the more fear it puts in you. Because when you see something happening over in America, well that’s all the way over in America, where these ones were closer to home. So I think they inflicted the most fear.

These expressions of negative emotions centred around the shock and fear generated by the viewing of violent online content align with research that, while focused on a slightly younger cohort (a survey of 25,142 nine-to-sixteen year olds) noted that the most commonly expressed emotional response to violent (although not necessarily extremist) content was fear or disgust (Reference Livingstone, Kirwil, Ponte and StaksrudLivingstone et al. 2014). Given the association of these types of negative emotions with some of the psychological drivers towards engagement with violent extremism, it is unsurprising that the strong presence of negative feelings among young people also drives equally negative and fearful emotions in protective audiences such as parents, schools, and governments. Arguably, it is not only the presence of fear and related emotions that helps to shape the deeply concerned and even panicked responses. These responses are also driven by a pervading sense of a lack of control these same audiences have (or perceive themselves to have) over the online environment. This may be because a lack of control is central to fear (Reference NabiNabi 2010).

This relationship between violence and terror has led to an institutionalization of the centrality of negative emotions such as fear, anger, and anxiety in terrorism studies predating even the attacks of September 11. (Reference CrawfordCrawford 2000). Yet, treating the relationship between violent extremist content and emotions as a series of biologically determined reactions misses the way in which an individual’s emotions are generated through a far broader and more dynamic process (Reference Wright-Neville and SmithWright-Neville and Smith 2009). It situates the encounter solely within the domain of feelings as opposed to the more complex role emotions play as producers of effects and behaviours, both negative and positive. This complexity was expressed by respondents who frequently caveated the initial expression of fear and other negative emotions with references to other types of feelings.

The Christchurch attack case study outlined in the introduction is an example of the complexity of emotional responses to online violent extremism. The participant quoted in our introduction initially expressed shock and horror at the appropriation of what he identified as “his” online culture by the Christchurch attacker, noting how “it ruined the humour behind the subscribe to PewDiePie. After that moment … those jokes just weren’t really funny at all.” Yet he later noted that despite the generation of negative emotions he was still engaged in the culture, stating “but obviously, I’m still into it, so, yeah, I was like woah.”

Here, the shock generates the type of evaluation that Sara Ahmed describes in her work on the affective nature of emotions, whereby the initial reaction causes a type of self-reflection that can be evaluative in nature (Reference AhmedAhmed 2014). In this case, the initial horror of the appropriation of their online culture for purposes of real-world and livestreamed violent extremism caused the participant’s ongoing enjoyment of the culture to include a parallel sense of unease and disquiet.

While the shock, disbelief, and other negative emotions experienced by young people should clearly remain an integral part of any investigation of online violent extremism, the above examples point to a much-needed problemisation of any simple conclusion that starts and ends with just the emotion of terror. It is more useful to perceive these types of emotions as the starting point of an examination of the dynamic, nuanced, and often ambiguous lived experience of young people who, in their own words, are frequently exposed to and engaged with such content, online and offline.

CuriosityThe sensation of curiosity is familiar in daily life, yet it was surprising to many of the participants that they experienced curiosity in response to online violent extremist content. The following exchanges are good examples of how curiosity was often initially discussed:

I get a sense of curiosity because it’s not something I see every day. So it’s shocking and it’s like you get curious at what this is and – because it’s not common to see.Given this forbidden yet ubiquitous content, it is hardly surprising that almost all participants expressed curiosity, an emotion centred on the desire for information (Reference Hsee and RuanHsee and Ruan, 2016). Empirical research has demonstrated how morbid curiosity generates behaviours that lead humans to deliberately view and engage with negative content, including death, violence, and gore (Reference Niehoff and OosterwijkNiehoff and Oosterwijk 2020; Reference ScrivnerScrivner 2020). This morbid curiosity was described by a participant who stated that:

I think there’s like a, kind of like a morbid curiosity about it. Like you kind of want to see what’s on the other side but it might not exactly be something that you want to see. It’s just like you’re kind of compelled to see it.

Curiosity can be experienced both positively and negatively. So, while for some participants it is likely that the taboo nature of the content triggered curiosity, others described a more positive process of knowledge acquisition. Here the participants seem to experience curiosity in relation to a desire to learn more about the context, background, or nature of the thing represented by the violent extremist content. In a study of adolescents in high school (Reference Jovanovic and BrdaricJovanovic and Brdaric 2012), higher levels of the trait of curiosity were linked with higher levels of life satisfaction and positive well-being, raising questions around the possible value of this emotional trait for the resilience of young people with regard to their experience and consumption of violent extremist content. As one participant expressed: “Sometimes curiosity is the driving force for you to see things because you want to know what’s happening.” Curiosity highlights the disjuncture between the moral panic, the aims of moderation or restriction, and the experiences of young people around the use of such content as a means of fulfilling inquisitive and entertainment needs. As another participant noted: “[Us] young people want to know more about these violent acts, like what’s going in their head. What’s making them do this, was it their childhood? That’s what makes us curious about these videos.”

The attractive power of curiosity raises a concern that social media regulators, in making it more difficult to find and acquire moderated content, may contribute to the movement of young people into more niche platforms where, ironically, exposure to violent extremist content is not only more likely but the social space of exposure is also potentially more extreme.