By PAUL TULLIS

if you were the type of geek, growing up, who enjoyed taking apart mechanical things and putting them back together again, who had your own corner of the garage or the basement filled with electronics and parts of electronics that you endlessly reconfigured, who learned to solder before you could ride a bike, your dream job would be at the Intelligent Systems Center of the Applied Physics Laboratory at Johns Hopkins University. Housed in an indistinct, cream-colored building in a part of Maryland where you can still keep a horse in your back yard, the ISC so elevates geekdom that the first thing you see past the receptionist’s desk is a paradise for the kind of person who isn’t just thrilled by gadgets, but who is compelled to understand how they work.

The reception area of the Intelligent Systems Center at the Johns Hopkins Applied Physics Center. (jhuapl.edu)

It’s called the Innovation Lab, and it features at least six levels of shelving that holds small unmanned aerial vehicles in various stages of assembly. Six more levels of shelves are devoted to clear plastic boxes of the kind you’d buy at The Container Store, full of nuts and bolts and screws and hinges of unusual configurations. A robot arm is attached to a table, like a lamp with a clamp. The bottom half of a four-wheeled robot the size of a golf cart has rolled up next to someone’s desk. Lying about, should the need arise, are power tools, power supplies, oscilloscopes, gyroscopes, and multimeters. Behind a glass wall you can find laser cutters, 3D printers. The Innovation Lab has its own moveable, heavy-duty gantry crane. You would have sufficient autonomy, here at the Intelligent Systems Center of the Applied Physics Laboratory at Johns Hopkins University, to not only decide that your robot ought to have fingernails, but to drive over to CVS and spend some taxpayer dollars on a kit of Lee Press-On Nails, because, dammit, this is America. All this with the knowledge that in an office around the corner, one of your colleagues is right now literally flying a spacecraft over Pluto.

The Applied Physics Laboratory is the nation’s largest university-affiliated research center. Founded in 1942 as part of the war effort, it now houses 7,200 staff working with a budget of $1.52 billion on some 450 acres in 700 labs that provide 3 million square feet of geeking space. When the US government—in particular, the Pentagon—has an engineering challenge, this lab is one of the places it turns to. The Navy’s surface-to-air missile, the Tomahawk land-attack missile, and a satellite-based navigation system that preceded GPS all originated here.

The Applied Physics Laboratory campus at Johns Hopkins University. (APL/LinkedIn)

In 2006, the Defense Advanced Research Projects Agency (DARPA), an arm of the Pentagon with a $3.4 billion budget and an instrumental role in developing military technology, wanted to rethink what was possible in the field of prosthetic limbs. Wars in Iraq and Afghanistan were sending home many soldiers with missing arms and legs, partly because better body armor and improved medical care in the field were keeping more of the severely wounded alive. But prosthetics had not advanced much, in terms of capabilities, from what protruded from the forearm of Captain Hook. Some artificial arms and legs took signals from muscles and converted them into motion. Still, Brock Wester, a biomedical engineer who is a project manager in the Intelligent Systems Group at APL, said, “it was pretty limited in what these could do. Maybe just open and close a couple fingers, maybe do a rotation. But it wasn’t able to reproduce all the loss of function from the injury.”

The Applied Physics Laboratory brought together experts in micro-electronics, software, neural systems, and neural processing, and over 13 years, in a project known as Revolutionizing Prosthetics, they narrowed the gaps and tightened the connections between the next generation of prosthetic fingers and the brain. An early effort involved something called targeted muscle re-enervation. A surgical procedure rearticulated nerves that once controlled muscle in the lost limb, so they connected to muscle remaining in a residual segment of the limb or to a nearby location on the body, allowing the user to perceive motion of the prosthetic as naturally as he or she had perceived the movement of his or her living limb.

Another side of the Revolutionizing Prosthetics effort was even more ambitious. People who are quadriplegic have had the connection between their brains and the nerves that activate their muscles severed. Similarly, those with the neuromuscular disorder amyotrophic lateral sclerosis cannot send signals from their brains to the extremities and so have difficulty making motions as they would like, particularly refined motions. What if technology could connect the brain to a prosthetic device or other assistive technology? If the lab could devise a way to acquire signals from the brain, filter out the irrelevant chaff, amplify the useful signal, and convert it into a digital format so it could be analyzed using an advanced form of artificial intelligence known as machine learning—if all that were accomplished, a paralyzed person could control a robotic arm by thought.

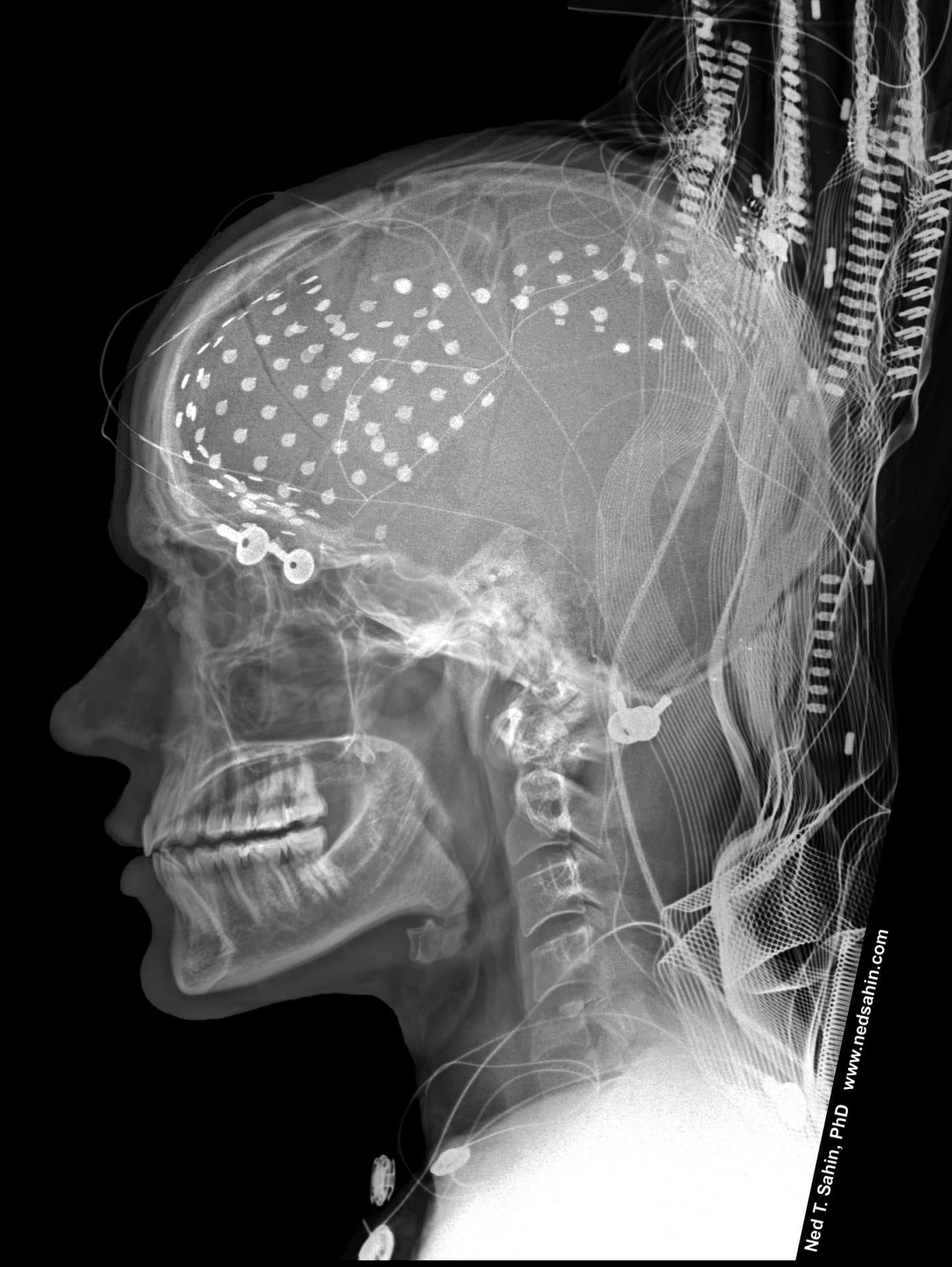

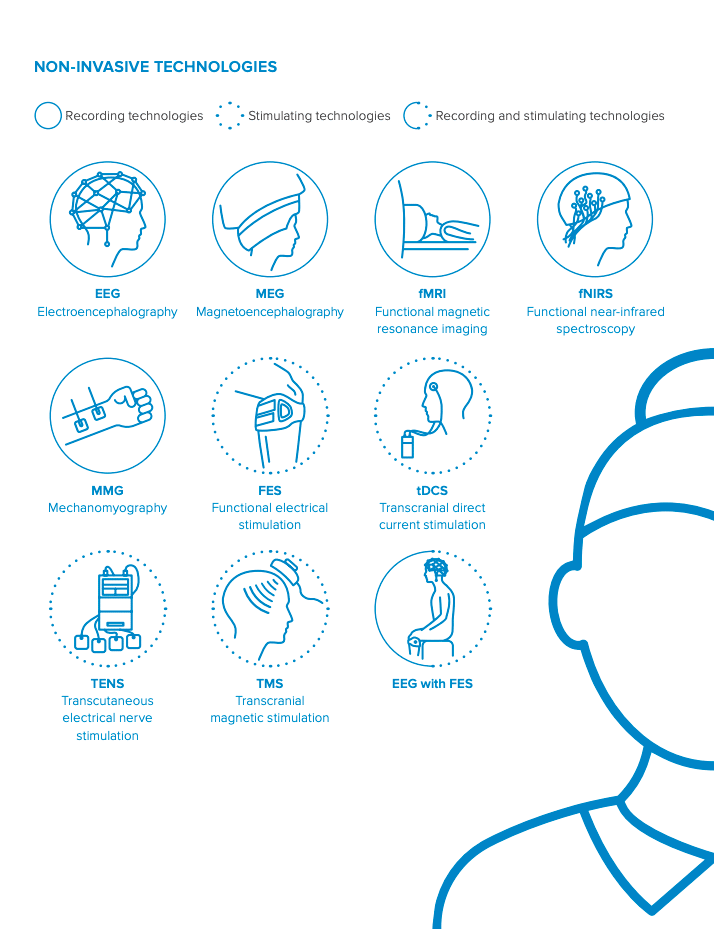

Researchers have tested a variety of so-called “brain-computer interfaces” in the effort to make the thinking connection from human to machine. One method involved electrocorticography (eCog), a sort of upgrade from the electroencephalography (EEG) used to measure brain electrical activity during attempts to diagnose tumors, seizures, or stroke. But EEG works through sensors arrayed outside the skull; for eCog, a flat, flexible sheet is placed onto the surface of the brain to collect activity from a large area immediately beneath the sensor sites.

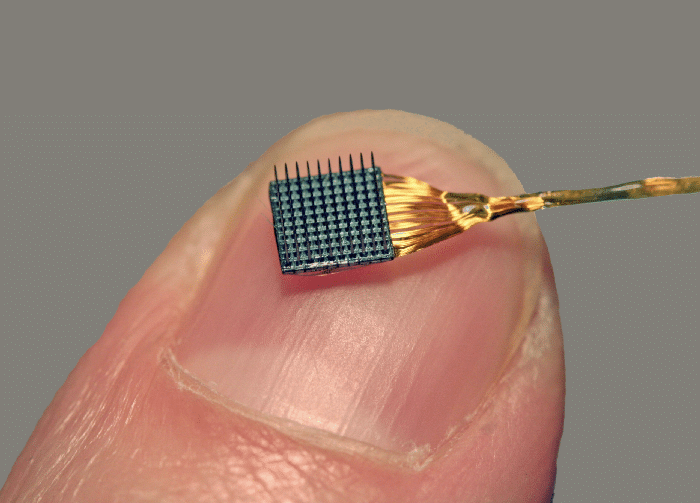

The Utah array. (Stanford University Neural Prosthetics Translational Laboratory)

Another method of gathering electrical signals, a micro-electrode array, also works under the skull, but instead of lying on the surface of the brain, it projects into the organ’s tissue. One of the most successful forms of neural prosthetic yet devised involves a device of this type that is known as a Utah array (so named for its place of origin, the Center for Neural Interfaces at the University of Utah). Less than a square centimeter in size, it looks like a tiny bed of nails. Each of the array’s 96 protrusions is an electrode up to 1.5 millimeters in length that connects to neurons and receives and imparts signals from and to them.

Illustrations from The Royal Society’s iHuman report.

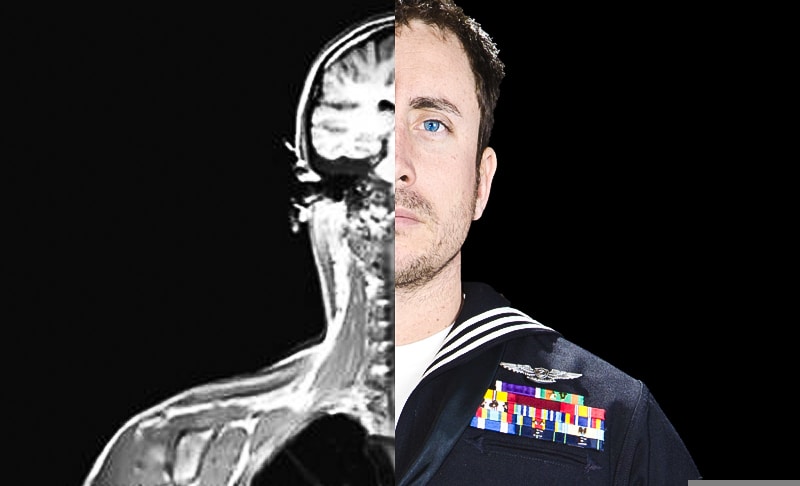

Jan Scheuermann, an experimental subject working with Applied Physics Laboratory partners at the University of Pittsburgh Medical Center, had lost control of her limbs from a rare neurodegenerative disease; she was fitted with a Utah array in 2012. A metal protrusion from her skull connected to wires which (skipping over a few complicated electronic and computer programming steps here) connected to a robotic arm that she learned to instruct to bring a piece of chocolate to her lips.

Nathan Copeland, another experimental subject-participant (it’s a dual role, in this field), was able to regain his touch perception. In fact, he thought about moving a robotic arm, and the arm fist-bumped with President Obama in 2016. The arm detected the pressure of the president’s fist, digitized it the way an iPhone digitizes an image or a voice, and sent the data to the part of the Copeland’s motor cortex that understood “pressure on knuckles” as such.

One day, instead of connecting Scheuermann’s Utah array to a robotic arm, experimenters hooked it into a flight simulator. By thinking about moving her hand, she was able to get the virtual airplane to pitch and roll—moving over time in three-dimensional space. It suddenly became clear that a person fitted with a brain-computer interface could use it to do a lot more than nibble chocolate. Once the brain signal is in a computer, it can go essentially anywhere, be connected to almost anything. It could be sent to the next room, or go to the moon. It could connect to a flight simulator—or a real airplane, like the unmanned aerial vehicles on the shelves at the Intelligent Systems Center. “Getting information on what neurons are doing, whether you map that to an airplane or an arm or you name it, it doesn’t matter,” said Dave Blodgett, chief scientist for the research and exploratory development department at the Applied Physics Laboratory, who leads a team developing a brain-computer interface.

And what if you could shake the president’s hand using a robot arm controlled by your mind—without the brain surgery part? Then the Pentagon could give it to active soldiers in the field instead of wounded vets returning from it. People without functioning limbs, like Scheuermann and Copeland, could take it home with them and maybe type again, just using their brains, and move a computer mouse, and so hold regular office job. Less righteously, maybe people without debilitating diseases or injuries could post status updates to Facebook more rapidly and more conveniently and hence more often, providing more content for the internet behemoth to sell more ads against.

Today, Facebook is in fact one of at least five companies working on a non-invasive, or minimally invasive, brain-computer interface. DARPA, meanwhile, has funded six groups, mostly in academia (including one at the Applied Physics Laboratory), to develop a device capable of sensing and stimulating the brain—reading from it and writing to it—as good as instantly. All have been making slow but, by their accounts, steady progress.

Some envision a day when a device worn in a hat can understand and transmit thoughts. “Think of a universal neural interface you could put on and seamlessly interact with anything in your home environment, and it would just know what you need to do when you need to do it,” said Justin Sanchez, former director of the Biological Technologies Office at DARPA, where he oversaw the Next-Generation Nonsurgical Neurotechnology (N3) program, which provided grants to APL and the other groups to develop a non-invasive interface. Sanchez is now life sciences technical fellow at Battelle Memorial Institute in Columbus, Ohio, one of the N3 participants.

the brain machine interface program was launched with the plan of enabling service members to “communicate by thought alone.”

For all its potential benefits, the potential pitfalls of Sanchez’s vision are legion. If there were devices that could measure all the neurons in a brain, they would create privacy issues that make Facebook’s current crop look trivial. Making it easy to fly a military drone via thinking might not be a welcome development in areas of the world that have experienced US military drones flown by hand. Some privileged people could use a non-invasive brain-computer interface to enhance their capabilities, exacerbating existing inequalities. Think of data security: “Whenever something is in a computer, it can be hacked—a BCI is by definition hackable,” said Marcello Ienca, a senior researcher at the Health Ethics & Policy Lab at the Swiss Federal Institute of Technology, in Zurich. “That can reveal very sensitive information from brain signals even if [the device] is unable to read [sophisticated] thoughts.”

Then there are the legal questions: Can the cops make you wear one? What if they have a warrant to connect your brain to a computer? How about a judge? Your commanding officer? How do you keep your Google Nest from sending light bulb ads to your brain every time you think the room is too dark?

A wearable device that can decode the voice in your head is a way’s off yet, said Jack Gallant, professor of psychology at University of California, Berkeley and a leading expert in cognitive neuroscience. But he also said, “Science marches on. There’s no fundamental physics reason that someday we’re not going to have a non-invasive brain-machine interface. It’s just a matter of time.

“And we have to manage that eventuality.”

In this 1965 experiment, electrodes placed in a young bull's brain allowed Yale University neurophysiologist José Delgado to control its movement via radio transmitter. (The March of Time / Getty Images)

The Pentagon’s interest in fusing humans with computers dates back more than 50 years. J.C.R. Licklider, a psychologist and a pioneer of early computing, wrote a paper proposing “Man-Computer Symbiosis” in 1960; he later became head of the Information Processing Techniques Office at DARPA (then known as ARPA). Even before that, a Yale professor named Jose Delgado had demonstrated that by placing electrodes on the skull, he could control some behaviors in mammals. (He most famously demonstrated this with a charging bull.) After some false starts and disappointments in the 1970s, DARPA opened the Defense Sciences Office by 1999 had the idea of augmenting humans with machines. At that time, it boasted more program managers than any other division at the agency. That year, the Brain Machine Interface program was launched with the plan of enabling service members to “communicate by thought alone.” Since then, at least eight DARPA programs have funded research paths aiming to restore memory, treat psychiatric disorders, and more.

First coined by UCLA computer scientist Jacques Vidal in the 1970s, “brain-computer interface” is one of those terms, like “artificial intelligence,” that is used to describe increasingly simple technologies and abilities the more the world of commerce becomes involved with it. (The term is used synonymously with “brain-machine interface.”) Electroencephalography has been used for several years to train patients who have suffered a stroke to regain motor control and more recently for less essential activities, such as playing video games.

EEG paints only broad strokes of brain activity, like a series of satellite images over a hurricane that show the storm moving but not the formation and disruption of individual clouds. Finer resolution can be achieved with electrocorticography, which has been used to locate the short-circuits that cause frequent seizures in patients with severe epilepsy. Where EEG can show the activity of millions of neurons, and ECoG gives aggregate data from a more targeted set, the Utah array reads the activity of a few (or even individual) neurons, enabling a better understanding of intent, though from a smaller area. Such an array can be implanted in the brain for as long as five years, maybe longer.

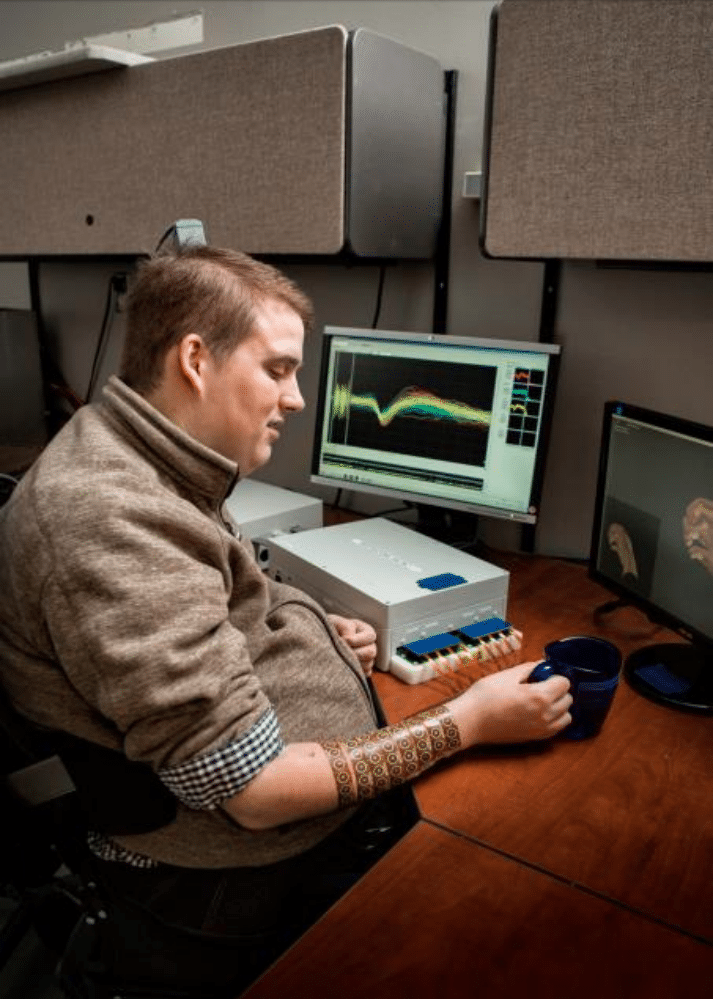

People with severe hypokinetic movement disorders who have had the Utah array—um—installed can move a cursor on a screen or a robotic arm with their thoughts. In August I met Ian Burkhart, one of the first people in the world to regain control of his own muscles through a neural bypass. He was working with scientists on a new kind of brain-computer interface that Battelle, a nonprofit applied science and technology company in Columbus, Ohio is trying to develop with doctors at Ohio State University’s Wexner Medical Center.

Once you get past the glass-enclosed atrium, the cafeteria, and the conference rooms and enter the domain where the science takes place, Battelle imparts the feeling of a spaceship, as depicted in one of the early Star Wars movies: Long, windowless, beige hallways with people moving purposefully through them and wireless technology required to gain admittance to certain areas. An executive led me to a room where Burkhart was undergoing experiments. After months of training and half an hour or so of setup each time he used it for an experiment published in Nature four years ago, he could get the Utah array, implanted in his motor cortex, to record his intentions for movement of his arm and hand. A cable connected to a pedestal sticking out of his skull sends the data to a computer, which decodes it using machine-learning algorithms and sends signals to electrodes in a sleeve on Burkhart’s arm. They activate his muscles to perform the intended motion, such as grasping, lifting, and emptying a bottle or swiping a credit card in a peripheral plugged into his laptop. “The first time I was able to open and close my hand,” Burkhart told me, “I remember distinctly looking back to a couple people in the room, like, ‘Okay, I want to take this home with me.’”

Battelle engineer Nicholas Annetta adjusts a cable attached to the Utah microelectrode array inserted in Ian Burkhart's brain. (The Ohio State University Wexner Medical Center)

Signals from an implant in Ian Burkhart's brain are decoded by a computer then sent to a sleeve on his arm, activating muscles he is otherwise unable to control. (Battelle)

DARPA wants a device that is both more powerful and more sensitive than what now exists—and that works from outside the skull. “As AI becomes more pronounced in the military environment, getting humans to interact with more advanced systems, a mouse and keyboard don’t cut it anymore,” said Al Emondi, who as program manager of N3 doled out its $120 million in grants. After 12 months, participants need to show they can sense and stimulate brain activity across 16 independent channels on 16 cubic millimeters of neural tissue, within 50 milliseconds, with 95 percent accuracy, continuously for two hours. Whoever succeeds will next integrate these capabilities in a device no larger than 125 cubic centimeters in size and test it on animals. Phase 3, starting in 2021, is human testing.

Emondi’s specifications will be difficult to achieve. Jennifer Collinger, an assistant professor in the department of physical medicine and rehabilitation at the University of Pittsburgh, works with Nathan Copeland (that’s her standing to Copeland’s right when he fist-bumped with Obama). In her office, pinned beside pictures her children drew with their hands, is one Copeland drew by thinking into the robotic arm. She said that when she started on the Revolutionary Prosthetics program, “everybody was like, ‘Oh, in five or 10 years, this will be everywhere’—and, no.”

A digital image drawn by Nathan Copeland via the electrode array in his brain. (Courtesy Nathan Copeland)

Obstacles to a non-invasive, or minimally invasive, brain-computer interface that can actually do something are many. You need to make your device as small as possible, as flexible as possible, and as biocompatible as possible, which is hard enough. For medical use, to stimulate and sense brain activity—to record intention from the central nervous system and simultaneously deliver it through the peripheral nervous system, like Burkhart’s brain-computer interface does—“is actually very difficult to do, since neural signals are very small,” said Rikky Muller, assistant professor of electrical engineering and computer sciences at UC Berkeley. Few methods can gather a lot of information from the brain quickly enough to operate the thing you connect the brain to—be it prosthetic arm, flight simulator, or drone. The more data you collect, the slower you become, and the faster you get the less data you can grab. Muller pointed to other problems. To pick just one: “It’s very hard to squeeze a high data rate out of a wireless device, because it costs power you don’t necessarily have when you’re in a power-constrained environment, like inside the human body,” she said.

the human skull is nothing like a chicken breast.

In 2017, I attended a session at the Milken Global Conference, a sort-of West Coast version of Davos that the Milken Institute, a research institution founded by former financier Michael Milken, holds annually in Beverly Hills. A former executive with Google and Facebook and professor at the MIT Media Lab, Mary Lou Jepsen, spoke about the possibility of an architect using a brain-computer interface to upload blueprints for a building to construction robots directly from his mind and later promised kits for developers to mess around with in 2019. She did a TED Talk that’s been viewed more than 800,000 times in which she demonstrates the ability to cast light through a piece of chicken. But the human skull is nothing like a chicken breast. As far as anyone can tell, her technology is still basically nowhere, in terms of current human use. “All the BMI’s out there today, nobody wants to use,” said Gallant. “They all suck.”

But they’re getting better. Not very long ago, it was questionable whether it was possible to access 100 neurons and track their activity over time. Matt Angle, who heads a company called Paradromics (which has received DARPA funding) and is trying to develop a minimally invasive brain-computer interface for people with difficulty moving or speaking, said capabilities exist today that have not even been leveraged yet. “You can likely detect when someone is hallucinating by decoding the sensory [data in the brain] and comparing it to an objective microphone or camera,” he said. “You could probably build that today and make meaningful progress on something like schizophrenia.” The last few years have seen an explosion of work in every aspect of neurotechnology—neuroscience, neurosurgery, algorithm-building, micro-electronics. Meanwhile, computers have become fast enough to process that information and translate it into a useful command for a robotic arm or a computer cursor.

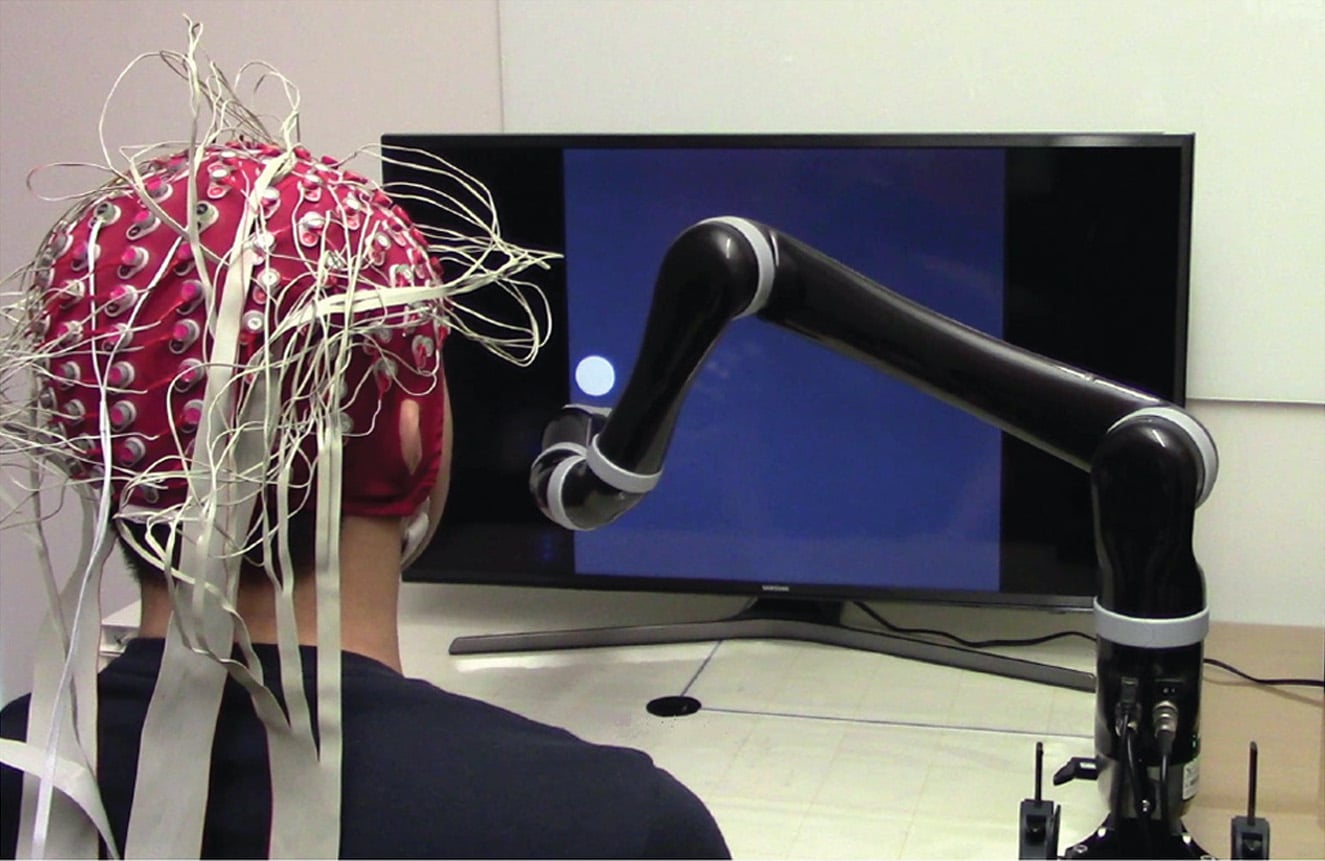

Carnegie Mellon University scientist Bin He developed non-invasive brain interfaces with far greater precision than a decade ago. (Video excerpt from Carnegie Mellon University / YouTube)

Bin He, head of the department of biomedical engineering at Carnegie Mellon University, published a paper last year showing a non-invasive brain-computer interface that could continuously track a computer cursor on a screen without any jangly delays. He told me that when he started working with EEG to decode human intention, “I was a little bit suspicious, and I am an EEG expert.” But his lab can now do things that were “not thinkable” 10 or 20 years ago. “The field has really advanced so much. A lot of things people thought that it’s impossible, things happen and make it possible.”

After Wester and others in the Intelligent Systems Group showed me what their prosthetics could do, I operated (just for fun, with my hands) the flight simulator Scheuermann had worked with her mind. Then I went to another building at the Applied Physics Laboratory where Blodgett showed me the experiment he’s working on for N3.

Blodgett isn’t a neuroscientist; his background is in remote sensing—detecting changes to an area from a distance—and he’s approaching the creation of a wearable brain-computer interface in essentially the same context. The scale is just reduced, from miles to micrometers.

Blodgett proposed to N3 that he would measure changes in the intensity and phase of light generated when a neuron is stimulated. A neuron can be thought of as an electrical cable with a charge running down it; the human brain contains approximately 80 billion of them. When the charge activates, scientists refer to the neuron as “firing.” Neurons are in a binary state; either they’re “on” (firing) or “off” (not firing). In other words, a firing neuron creates an electrical field, which can be recorded.

When neurons fire, their tissue also swells and contracts. That means their firing can be seen, as well as detected by their electricity via an EEG, eCog, or Utah array. This plays to Blodgett’s strengths as a scientist. “I have a system that’s sensitive to tissue motion, and I see tissue motion that corresponds to neural activity,” he told me.

“We know something is going on in neural tissue that you can record optically,” Blodgett said. “People say the electrical signal is the intrinsic neuroactivity signal, but if I can measure the swelling of tissue, which is just as intrinsic, can you build an optical system sensitive to that swelling? I believe what I’m measuring is actually that tissue that’s swelling.

“People hadn’t done that before because the motion of that tissue is so small you can’t detect it using standard optical techniques.”

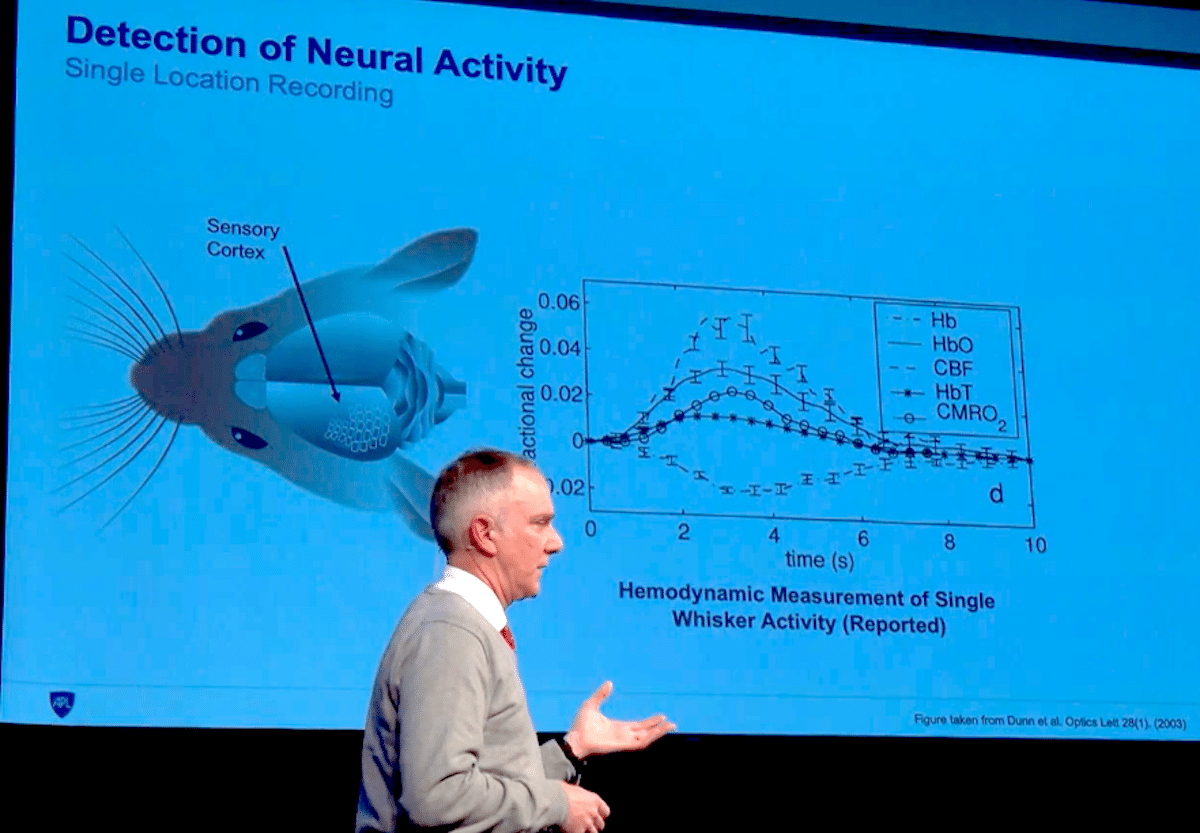

He and his team have already shown, in published research, that his detection system can see the neural activity in the brain of a mouse that corresponds with its flicking its whisker. Mice have well-defined whisker cortices in their brains, one for every whisker. So when one flicks, neurons fire in a distinct location. Blodgett recorded the whisker-flicker neurons firing once for every flick, with only about 100 microseconds of delay in capturing the signal.

Applied Physics Laboratory chief scientist David Blodgett explains his research on optical sensing of neural activity at an MIT event in December 2019. (Video still via MIT Technology Review)

“If you can see neurons fire, that’s amazing right there, and no one’s ever done those kinds of things. So you’re well ahead of what anyone else has done,” Blodgett said.

Battelle researchers are taking a different approach, investigating the possibility of using nanoparticles with magnetic properties to “read” and “write to” a mouse’s brain.

But the human brain is reluctant to give up its secrets: People have these really thick skulls, which scatter light and electrical signals. The scattering properties make it difficult to aim either light or electricity at a specified area of the brain, or to pick up either of those signals from outside the skull. If it weren’t for this anatomical fact, Burkhart and Copeland and Scheuermann would not have needed surgery, and a non-invasive brain-computer interface would already be a reality.

If scientists at APL or Battelle, or the other N3 participants, show they can read from and write to the brain using whatever technique, they will need to use a material with the same optical or electrical properties as a live human skull (a dead skull acts on electricity differently) and see if they can stimulate the brain tissue of experimental animals through it. Maybe one technique to come out of the N3 program will be better than the others at sensing, another best at stimulating, while a third shows it can get through the skull simulator.

Then Emondi could join the groups and combine methods for phase two of the process—that is, for human experimentation.

Facebook declined my requests for interviews about its brain-computer interface research. The company could be taking multiple approaches, but one method could come from a startup called CTRL-labs, founded by Thomas Reardon, who developed Microsoft’s first web browser. Facebook bought the company in 2019. Gallant called Reardon’s attempt to amplify the brain’s signal in the muscle rather than detect it in the brain “remarkable.”

Jack Gallant found me wandering cluelessly around a part of the Berkeley campus I had rarely frequented as an undergraduate there, and after we went for coffee, he took me up to his lab, which is unusually bereft of gadgets and equipment for a scientific lab. The guys at the Intelligent Systems Center would be bored out of their minds; it’s all just grad students sitting in front of computers. We found an empty office, and I asked him one question. He talked for an hour virtually without interruption.

“The thing to remember about brain decoding is, it's just information,” he said at one point. There’s a lot of information—he figured 80 billion neurons, a billion of them firing at any given moment, each transmitting five bits of information, that’s 5 billion bits. But all of it is potentially decodable. “And the only thing that prevents you from decoding it is access.” The skull is one inhibitor to access. Understanding the activity is another. Translating it into a computer that can do something with the activity is another. But, Gallant said, these are all obstacles that can be overcome.

Revolutionizing Prosthetics began as a means of restoring the physical abilities of amputees and the paralyzed. Earlier DARPA programs had aimed for “predictable, high-quality brain control.” But after a DARPA-funded surveillance project called Total Information Awareness was made public in the early ’00s, it seemed maybe the Pentagon was getting too creepy, and brain-computer interface efforts were dialed back. Revolutionizing Prosthetics seemed benign—who could argue with such a noble medical use? But the number of people who have been fitted with the product that came out of it is exactly eight, out of more than 1,650 Iraq and Afghanistan vets who lost limbs.

N3’s purpose, Emondi said, is to make warfighters more effective.

DARPA / Jonathan David Chandler

There is a dark and light side to the development of many technologies. In the heady early days of the World Wide Web, everyone could become a publisher as potentially influential as established news organizations, amplifying the voices of the marginalized. Little thought was given to the possibility that some of the marginalized would be racists and religious extremists who would use new Web platforms to recruit followers, spread disinformation, harass women, or evangelize terrorism. The Manhattan Project gave us V-J Day and life-saving nuclear medicine; it also gave us Chernobyl and the thousands of existing nuclear weapons, any few hundred of which could end civilization in 30 minutes. The internet was developed to facilitate communications among scientists, and now some use internet-enabled social media like Facebook to coordinate ethnic cleansing.

While there’s certainly nothing wrong with giving disabled people like Burkhart and Copeland greater autonomy, it’s not as clear that providing brain-computer interfaces to soldiers and the wealthy won’t have significant unintended consequences. UC Berkeley’s Muller said “there are so many conditions for which there are not a lot of really effective treatments where neural interface technologies can make an enormous difference in someone’s life--and I think that’s what our goal really needs to be.” Carnegie Mellon’s He, on the other hand, allowed that “everything I do in my lab is trying to develop something that eventually could be used in most people.”

The advancement of brain-computer interfaces doesn’t even need to be intentional. Gallant pointed out that neuroscience research to understand brain function and better treat conditions such as depression and schizophrenia is effectively research toward a better interface between brains and computing. “You can't stop the evolution of brain measurement [for BCIs] unless you want to stop neuroscience and live with the horrible situation we have with mental health disorders,” he said.

it’s not hard to imagine hackers gaining access to someone’s thoughts and leveraging them for public humiliation, or blackmail.

As these interfaces progress, a host of ethical, legal, and social questions will arise. Can you delete your thoughts from the computer once the interface has picked them up? “You have these latent desires that you may not have even thought of yet,” Gallant said. “We all have problems with racism or bad attitudes about other humans that we would rather not reveal because we don’t really believe them or we don’t think they should be spoken. But they’re in there.” It’s not hard to imagine hackers gaining access to someone’s thoughts and leveraging them for public humiliation, or blackmail.

What kind of gap will emerge as a few people have access to such powerful devices and many do not? Angle, who heads the Paradromics brain-implant firm, pointed out that wealthy families already pay to cognitively enhance their children. “The higher education system in general is kind of pay-to-play,” he said. “If we really feel strongly about that, let’s solve the problems we have right now and still help people with paralysis.”

The industry and others are looking closely at these issues. EEG expert He was part of the Multi-Council Working Group under the National Institutes of Health’s Brain Initiative, a tech-focused research effort started by the Obama administration. The Royal Society, the independent scientific academy of the UK, put out a 106-page paper last year on brain-computer interfaces, part of which was given over to ethical issues, and scientific journals like Nature have chimed in as well. But given that at least one US senator demonstrated at a hearing last year that he did not know how Facebook earned its revenue and the current partisan divide, it seems unlikely Congress will be able to craft effective legislation to regulate interfaces between brains and computing devices. “If we want this going forward, we need the entire society to support it,” He said.

Once the N3 program participants prove the technology, the private sector will no doubt run with it—as happened with ARPANET, which became the Internet. “DARPA opened the door, saying ‘Here’s proof of concept,’” said Battelle’s Sanchez, the former DARPA exec. “It’s going to be up to the commercial world to take those advances and make them into whatever the world is going to want. As more people have access, people and industry are going to define what they do with it.”

Justin Sanchez, former director of the Biological Technologies Office at DARPA, in a 2015 video on the future of neurotechnologies. (DARPA)

Not everyone is so thrilled at this prospect. “Any [funding] that has to do with improving human life, making advances in neuroscience, neurotechnology—why should that be distributed through the military?” asked Philipp Kellmeyer, a neurologist and neuroethicist at the Neuromedical AI Lab at the University of Freiburg, in Germany. “That's just odd.” He noted that another organization with deep pockets, Facebook, has hired more than 100 neuroscientists and engineers for its non-invasive brain-computer interface effort. “Even universities like MIT or Harvard can’t compete with tech companies in keeping these researchers,” Kellmeyer said. “A lot of what is being done now [in the BCI field] is inscrutable, not in the spirt of open science that we’d hope this type of research is conducted. I’m concerned that the space for publicly accountable neurotech research is shrinking.”

Whatever the circumstances of the development of BCIs’ development, there will be demand for them. “Now we just need to get small enough that I can actually use it,” Burkhart said.

No comments:

Post a Comment