By SYDNEY J. FREEDBERG JR.

Soldiers use their IVAS goggles to view replays and analysis of a training exercise. IVAS is one of a handful of weapons programs GAO says are using private-sector best practices for agile software development.

Soldiers use their IVAS goggles to view replays and analysis of a training exercise. IVAS is one of a handful of weapons programs GAO says are using private-sector best practices for agile software development.

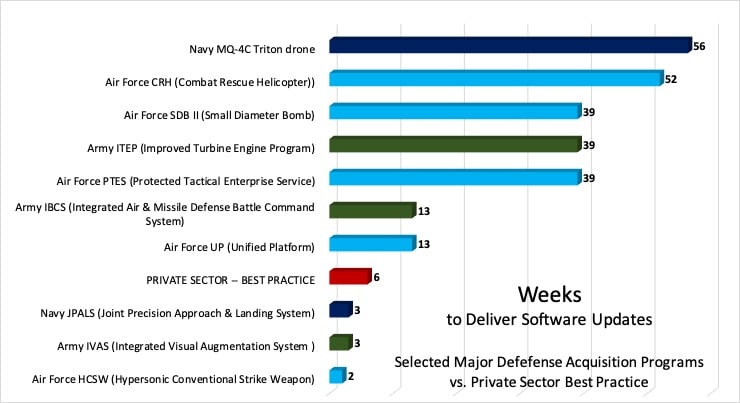

WASHNGTON: As the Pentagon struggles to catch up to Silicon Valley, top officials have loudly embraced the private-sector software development strategy known as “agile.” But in the GAO’s annual survey of 42 major weapons programs, while 22 claimed to be using agile methods, only six actually met the private-sector standard of delivering software updates to users every six weeks — at most.

While GAO didn’t publish this data for all programs, we were able to find it for 10 of them. Of those, just three programs – one of them since cancelled – met the six-month timeline. The other seven had update cycles ranging from three months to more than a year.

Breaking Defense graphic from GAO data

The three standout programs all reported delivering software to end-users – not just internal testers – every two or three weeks:

The Army’s IVAS smart goggles, aka the Integrated Visual Augmentation System, are a militarized version of a commercial product, the Microsoft HoloLens. That makes it much easier to apply private-sector best practices than a traditional weapons program. As we’ve reported, Army soldiers are working side-by-side with Microsoft engineers to field-test the technology and make frequent changes.

By contrast, the Navy’s JPALS, the Joint Precision Approach & Landing System, is a uniquely military product: automated guidance to help pilots land safely amidst dust storms or on the pitching decks of aircraft carriers. It’s also being developed by a traditional defense contractor, Raytheon. But it’s still a package of electronics, making it much more similar to a civilian tech-sector project than developing, say, a combat aircraft or a missile.

Finally, the Air Force’s Hypersonic Conventional Strike Weapon was a missile, one that pushed the bleeding edge of high-speed, high-altitude aeronautics. HCSW (pronounced “hacksaw”) would have been a true test of whether a civilian software-development process really worked in a daunting, unforgiving environment. But it was cancelled in February in favor of a yet more ambitious approach.

The seven programs that tried to be agile but didn’t make the six-week turnaround time were even more diverse. They ranged from the blandly named Unified Platform – basically, a computer hardware and software package for cyber warfare – to the PTES satellite communications terminal, the ITEP engine for Army helicopters and the Navy’s long-range Triton drone.

Two MQ-4C Triton drones on the tarmac.

Why does agile software development matter? Because what modern technology can do – in business or on the battlefield – often depends on software as much as or even more than on hardware. Just as two physically identical iPhones will function very differently depending on which apps the user downloads, two physically identical aircraft may dogfight differently depending on, for example, whether their radar can analyze enemy countermeasures and pick up the true signal amidst the chaff.

Each software update for the F-35, for example, has expanded the capabilities the aircraft boasts. And while it can take years to develop, test, build, and install new radars, missiles, or jet engines, you can update the software in those systems overnight.

But should you? Silicon Valley can push out a bare-bones Minimum Viable Product, get user feedback on what works and doesn’t, quickly fix reported bugs and then add new features. Military systems are not only more complex than most iPhone apps, they’re much more prone to get people killed when they go wrong: Terms like “fatal error” and “crash” mean something very different to software developers than to, say, helicopter pilots.

Hear From The Experts

There are areas where the Defense Department can and should adopt the agile methodology, three experts we spoke to all agreed. Where they disagreed was on how well DoD is actually doing and how much farther it should push.

David Berteau

Agile is a double-edged sword, warned David Berteau, a former Pentagon official who now heads the Professional Services Council, an association of IT and services contractors. “Agile in the commercial marketplace is driven by competitive dynamics, including the need to stay ahead of competition, be first to market, go as fast as possible, etc.,” Berteau told me. “The result? Updates that always — always! — need correcting in another week or two.”

“Agile in DoD is driven by a more complex set of dynamics,” Berteau continued. Competition with great-power rivals like Russia or China is very different, and far more dangerous, than even the bitterest fights between, say, Amazon and Microsoft. Budgets are locked in years in advance; laws and regulations make it hard to combine different activities like development, production, and testing; and the high-tech hardware that the software will run on takes years to develop. “Why have the software ready before it can be installed or used?” he asked. “Faster is not always better.”

The GAO report, with its focus on high-profile weapons programs, doesn’t really look at the areas where agile software development is most applicable to the Pentagon, Berteau argued. “The real benefits from agile software updates will come as much if not more from processes, systems, logistics, and support outside MDAPs [Major Defense Acquisition Programs], in ongoing day-to-day operations across DoD,” he said. “In those arenas, the private sector best practice goal may make more sense.”

Bill Greenwalt

By contrast, Bill Greenwalt, a former Hill staffer who wrote many of the acquisition reform laws now in place, was far more enthusiastic about using agile development across the Defense Department.

“The Defense Innovation Board software study was pretty clear that DOD does a poor job of software development, and that there are some real best practices out there to do agile development — none of which DOD as a whole is doing,” he told me.

“There may be some things that cannot be met by agile,” Greenwalt acknowledged. “I don’t think we will be flying planes using the first minimum viable product from an agile software development.” Obviously, you’d run the software in a simulator many, many times before you put test pilots’ lives at stake: “Testing is critical,” he said.

But, Greenwalt argued, the current Pentagon acquisition system isn’t actually great at testing. While commercial software is often pushed out too early, inadequately tested and full of bugs, traditional weapons programs start serious testing too late, when they’ve completed design and development. That results in costly and time-consuming fixes to problems that could have been solved more cheaply and quickly if they’d been discovered earlier on.

“DOD’s traditional way exudes confidence that we already know the end solution,” he said, “[so] testing comes late, and that is when the multitudes of engineering and software changes happen that drive program costs and schedules through the roof.” Far better, he argued, to take the agile approach, humbling admitting you don’t know the best solution when you start, testing early and often, and making changes based on feedback from real users throughout design and development, instead of waiting to give them a completed product at the end.

Andrew Hunter

Can the Pentagon make this work? “Doing agile acquisition, [acquiring] adaptable systems, is hard for DoD,” said Andrew Hunter, head of defense industrial studies at CSIS, a Washington thinktank. Given how hard it is for the Pentagon bureaucracy to adopt private sector privates, he told me, “I actually find the GAO report encouraging.”

“It shows that it can be done, as evidenced by the three cases that are achieving commercial innovation cycles,” Hunter said, pointing to IVAS, JPALS, and HCSW. Nor are the longer timelines for the other seven programs such a discouraging sign, he argued: “Even the more challenging cases in the study are adapting far faster than is typical for DoD, where upgrade cycles are usually measured in years, not weeks.”

“Not every DoD system can or will need to be able to do updates every two to six weeks,” Hunter told me, “but I think we should make sure that programs which must be adaptable are routinely able to do so in two to six months.” That’s a benchmark many more of these programs are actually reaching.

No comments:

Post a Comment