By SYDNEY J. FREEDBERG JR.

ARMY WAR COLLEGE: Theater commanders around the world want weapons they can see and use right now, the Chairman of the Joint Chiefs told the Army War College. It’s a lot harder, Gen. Joseph Dunford said, to sell experienced senior officers on an untested and intangible capability like Artificial Intelligence.

ARMY WAR COLLEGE: Theater commanders around the world want weapons they can see and use right now, the Chairman of the Joint Chiefs told the Army War College. It’s a lot harder, Gen. Joseph Dunford said, to sell experienced senior officers on an untested and intangible capability like Artificial Intelligence.

One week after Dunford’s visit, the Army War College convened two dozen officers and civilian experts to take on that challenge: How do you demonstrate the potential value of a military AI before you actually build it? (The conference was scheduled long before Dunford’s visit, but his words were very much on participants’ minds). The immediate objective: come up with ways to mimic the effects of an AI so the school’s in-house game designers could turn it into either a computer simulation or a table-top exercise within 10 months — without new money. The hope is that the 2020 game, in turn, will intrigue Army leadership enough that they’ll support a larger, longer-term AI effort.

This squid’s thought process is less alien to you than an artificial intelligence would be.

“We’re trying to influence very senior leaders who don’t have a lot of time,” one participant said. “They just have to see it. Once they see it, the money will follow.” (Sydney followed the Chatham House Rule in covering the event so no sources are identified by name.) That means, he said, those leaders need a way to visualize how AI might impact future operations. Wargames are a time-honored way for military professionals to see how new technologies might play out before you actually build them, often before it’s even possible to build them.

“We’re trying to influence very senior leaders who don’t have a lot of time,” one participant said. “They just have to see it. Once they see it, the money will follow.” (Sydney followed the Chatham House Rule in covering the event so no sources are identified by name.) That means, he said, those leaders need a way to visualize how AI might impact future operations. Wargames are a time-honored way for military professionals to see how new technologies might play out before you actually build them, often before it’s even possible to build them.

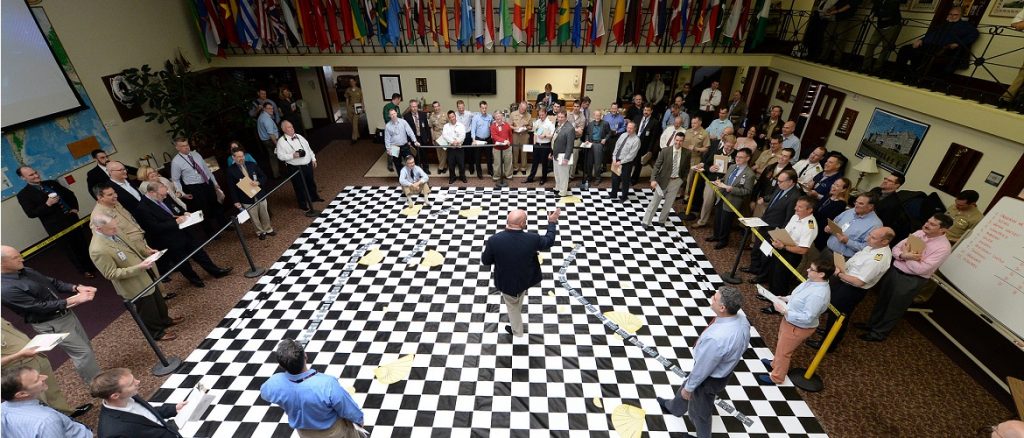

The most famous case is the Naval War College in the 1920s and 1930s, which ran more than 100 games exploring a possible war with Japan, often with officers moving miniature ships around a tiled floor used as a giant gameboard. Admiral Nimitz famously said that these games explored so many different technologies and strategies that “nothing that happened during the [actual] war was a surprise… except the kamikaze tactics.”

But wargaming Artificial Intelligence is a much harder problem. If you’re trying to figure out how a new weapon might be used, the way the Naval War College did with the aircraft carrier in the 1930s, you can add new pieces to your board and new options to your rules: How does the game change if, for example, you let some of your ships launch aircraft x miles to bomb targets with y percent chance of destroying them?

But AI is not a physical weapon. AI is a machine that thinks. It’s not too hard to change your wargame’s rules to simulate planes that can fly farther, ships that sail faster, tanks that are tougher to kill or satellites that transmit messages faster. But how do you change your game to simulate one side getting smarter?

Harder still: How do human game designers and human players simulate the military decision-making of a non-human artificial intelligence, when AI’s crucial advantage is it can think of strategies no human ever could?

Reenacting the Battle of Jutland on the famed tiled floor of the Naval War College

Game Changers

The Army War College doesn’t have the time or money to build a superhumanly intelligent AI to play its wargames. Making such a mega-brain is probably years away for anyone. Even high-priority Pentagon AI programs are focusing, for now, on improving technical functions like maintenance, logistics, cybersecurity, electronic warfare, and missile defense — not building robot strategists.

You can’t eliminate this problem, but there are two ways to reduce it. One is to simulate how future super-smart AIs will handle the staggering complexities of the real world by testing how today’s relatively limited AIs handle the limited complexities of a simplified model. The other is to give human players some kind of advantage that helps them out-think their opposition, simulating how warfare changes when one side consistently thinks faster and better.

It’s remarkable how many ways the Army War College conference came up with to simulate AI without using any new technology at all. (I participated as an invited expert — with the Army covering my expenses — so some of these ideas are partially mine).

US and allied officers play a tabletop wargame at the Command & General Staff College, Fort Leavenworth, KS

Even if your “simulation” is something as simple as two people playing a board game, you can break out a stopwatch or a chess clock to time their moves — and give one side more time each turn to simulate how AI can think faster than a human. You give one side more players, to simulate how AI can think through more options simultaneously and come up with a wider range of different strategies.

Even if your “simulation” is something as simple as two people playing a board game, you can break out a stopwatch or a chess clock to time their moves — and give one side more time each turn to simulate how AI can think faster than a human. You give one side more players, to simulate how AI can think through more options simultaneously and come up with a wider range of different strategies.

What if both sides aren’t seeing the same game board? That’s a common feature both of consumer strategy games, in which each player only sees the parts of the map around their units, and of formal War College exercises, in which each side is in a different room and gets all its information from a neutral umpire. This allows you to simulate AI by giving one side more or better information.

For example, one high-priority application for AI is rapidly collecting, analyzing, and disseminating vast amounts of data — say, video from surveillance drones — that would take human intelligence analysts much longer to plow through. You could simulate that capability by giving one side information faster than the other. Perhaps the umpire tells the humans playing the “AI-enabled” side where the pieces are on the board right now, but it only tells the non-AI team where the pieces were last turn, or two turns ago.

You can use a similar kind of lag to simulate how AI might automate a lot of routine staff work. It’s an arduous process to turn a commander’s scheme of maneuver into detailed timetables of what unit takes what route when, what air and artillery support they’ll have on call, when they’ll meet up with supply convoys carrying ammunition, food, or fuel, et cetera ad nauseam. It’s also the kind of work which human’s hunter-gatherer brains aren’t evolved to do, but which computers are great at. So maybe the side simulating the AI-enabled headquarters can move its pieces on the board right now, but when the non-AI side issues orders, it takes a turn or two before its pieces actually carry them out.

Or you could combine both approaches. The side simulating AI gets to see the board and move its pieces then and there, but the non-AI side only knows where its pieces were last turn and can only issue orders for what they’ll do next turn.

The Army also envisions AI being able to transmit intelligence and orders rapidly over secure wide-area networks, letting dispersed command posts work closely together despite the distance. You could simulate this by putting all the players on the AI-enabled team in the same room, while splitting the other team up. The division commander and his staff are in one room, his brigade commanders are each in their own rooms, and any communication has to go through the umpire. Now combine this with lag. All the AI players can communicate freely face-to-face, but if the non-AI commander tells his brigades to do something, they won’t actually receive that order until the next turn.

Lockheed Martin’s third Multi-Domain Command & Control (MDC@) wargame

If you can actually write or modify a computer wargame, instead of doing a pure tabletop exercise, your options get more sophisticated and fiendish. You can put each player at a different screen and manipulate how much information they get, how quickly, and how accurate it is. You can cut off in-game messaging between players to simulate radio jamming, or send false messages to simulate their network getting hacked. You can use crowdsourcing to simulate an AI’s ability to generate a wider range of strategies. The non-AI players can only brainstorm among themselves, but the team simulating the AI can post the game board on the Internet and get hundreds of suggestions for its next move.

If you can actually write or modify a computer wargame, instead of doing a pure tabletop exercise, your options get more sophisticated and fiendish. You can put each player at a different screen and manipulate how much information they get, how quickly, and how accurate it is. You can cut off in-game messaging between players to simulate radio jamming, or send false messages to simulate their network getting hacked. You can use crowdsourcing to simulate an AI’s ability to generate a wider range of strategies. The non-AI players can only brainstorm among themselves, but the team simulating the AI can post the game board on the Internet and get hundreds of suggestions for its next move.

Once you put your wargame on a computer, you can also start replacing human players with real AI. Sure, it won’t be as sophisticated as the future AI strategist you’re emulating, but the simulated world it’s playing in isn’t as complicated as the real world, either. And there are a lot of AIs available already you can repurpose to play your particular game. “There’s no need to reinvent game-solving AIs,” said one participant. “That’s already been done — and productized.”

Those AIs are also getting better all the time. If you write your software right — specifically, the Application Programming Interface (API) — then you can plug in new and smarter AIs as they become available. Even in the long run, when you finally develop an AI strategist that could plan an actual battle, you’ll really want to test it in simulation before you stake human lives on its performance.

No comments:

Post a Comment