By SYDNEY J. FREEDBERG JR.

ARLINGTON: With the US, Russia, and China all investing in Artificial Intelligence for their armed forces, people often worry the Terminator is going to come to life and kill them. But given the glaring vulnerabilities of AI, maybe the Terminator ought to be afraid of us.

ARLINGTON: With the US, Russia, and China all investing in Artificial Intelligence for their armed forces, people often worry the Terminator is going to come to life and kill them. But given the glaring vulnerabilities of AI, maybe the Terminator ought to be afraid of us.

“People are saying, ‘oh my god, autonomy’s coming, Arnold is going to be here, he’s going to be out on the battlefield on the other side,’” said Marine rifleman turned AI expert Mike Kramer. ”I don’t believe that. This is an attack surface.”

As Kramer and other experts told the NDIA special operations conference this morning, every time an enemy fields an automated or autonomous system, it will have weak points we can attack – and we can attack them electronically, without ever having to fire a shot.

“If we’re going to have an autonomy fight, have it at their house,” continued Kramer, who now heads the technology & strategy branch of the Pentagon’s Joint Improvised-Threat Defeat Organization(JIDO). “We are attacking the autonomy, not just the platform.”

In other words, if you’re worried about, say, the Russians’ new robotic mini-tank, the much-hyped but underperforming Uran-9, don’t dig in with your bazooka and wait until you can shoot at it. Use hacking, jamming, and deception to confound the algorithms that make it work.

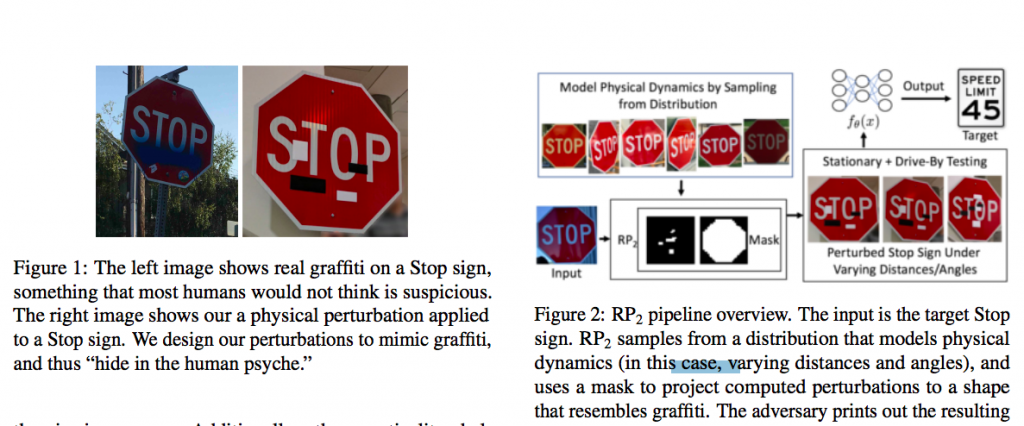

How? Breaking Defense has written extensively about what we call artificial stupidity: the ways algorithms can misinterpret the world in ways no human ever would, because they interpret data in terms of mathematics and logic without instinct, intuition, or common sense. It turns out such artificial stupidity is something you can artificially induce. The most famous example is an experiment in which strategically applied reflective tape caused the AIs used in self-driving cars to misclassify a STOP sign as a speed limit.

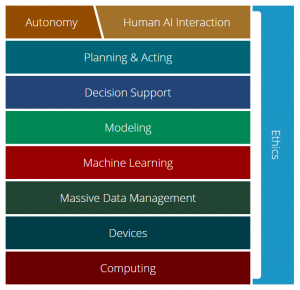

Carnegie Mellon University’s “AI stack” model of the interdependent components of artificial intelligence.

But there are plenty of other avenues of attack, which is what Kramer & co. are talking about when they refer to “attack surface.” At Carnegie Mellon University – home to the Army’s newly created AI Task Force – a former Google VP turned dean of computer science, Andrew Moore, has come up with a simplified model called the AI stack, which shows how getting intelligent output from an AI depends on a whole series of underlying processes and technologies. Planning algorithms need models of how the world works, and those models are built by machine learning, which needs huge amounts of accurate data to hone its algorithms over millions of trials and errors, which in turn depends on having a lot of computing power.

Now, Moore devised the AI stack to help understand how to build a system up. But, his CMU colleague Shane Shaneman told the Special Ops conference this morning, you can also use it to understand how to tear that system down. Like a house of cards or a tower of jenga blocks, the AI stack collapses if you mess with any single layer.

The more complex and interconnected systems become, Shaneman continued, the more vulnerabilities they offer to attack. A modern Pratt & Whitney jet engine for a F-16 fighter has some 5,000 sensors, he said. “Every one of those can be a potential injection point” for false data or malicious code.

With strategically placed bits of tape, a team of AI researchers tricked self-driving cars into seeing a STOP sign as a speed limit sign instead.

AI vs. AI

You can use your own artificial intelligence to figure out where the weak points are in the enemy’s AI, Shaneman said: That’s what DARPA’s highly publicized Cyber Grand Challenge last year was all about. The stop sign tampering experiment, likewise, relied on some sophisticated AI analysis to figure out just where to put those simple strips of tape. This is a whole emerging field known as adversarial AI.

Machine learning uses arcane mathematical formulae called manifolds to extract patterns from masses of data. But no nation has a monopoly on math. If an adversary can see enough of the inputs your AI sucks in and the outputs it spits out, they can deduce what your algorithms must be doing in between. It turns into a battle between opposing teams of mathematicians, much like the codebreaking contests of World War II and the Cold War.

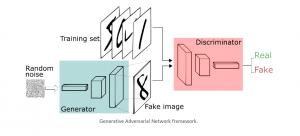

Generative Adversarial Networks (graphic by Thalles Silva)

What’s new, though, is it’s also a battle of AI versus AI. One technique, called generative adversarial networks, basically locks two machine learning systems together in a virtual cage match, each driving the other to evolve more sophisticated algorithms over thousands of bouts. It’s similar to the reinforcement learning system used in DeepMind’s AlphaGo Zero, which played millions of games against itself for 40 days until it could defeat the greatest go players, human or machine. But generative adversarial networks add another layer. The two opposing AIs aren’t identical, but diametrically opposite – one constantly generates fake data, the other tries to detect the counterfeits. What ensues is a kind of Darwinian contest, a survival of the fittest in which dueling AIs replicate millions of years of evolution on fast-forward.

One lesson from all this research, Shaneman said, is you don’t want your AI to stand still, because then the other side can figure out its weaknesses and optimize against them. What you need, he said, is “algorithmic agility… constantly being adjust those weights and coefficients.”

One lesson from all this research, Shaneman said, is you don’t want your AI to stand still, because then the other side can figure out its weaknesses and optimize against them. What you need, he said, is “algorithmic agility… constantly being adjust those weights and coefficients.”

Robert Work

The good news is that the required combination of creativity, adaptation, and improvisation is a part of American culture – scientific, entrepreneurial, and even military – that potential adversaries

No comments:

Post a Comment