Jonathan E. Czarnecki

INTRODUCTION

The concept of command and control is central to modern warfare. Command is a legal and behavioral term referring to a designated individual leader’s responsibility and accountability for everything the leader’s unit of command does and does not do.[1] Control is a regulatory and scientific term denoting the ability to manage that which is commanded.[2] Command and control together is considered a doctrinal operational function of military units.[3] The concept is concrete and essential for all organizations to successfully conduct their business; for the military the primary business is combat operations in contested environments.

This article investigates the use of certain types of command and control in operational environments that overwhelm the ability of commanders to do their primary job—lead and succeed in operations. It provides perspectives on the ability of military organizations to actually implement command and control and concludes the nature of current and future operational environments condemns the idea and language of control to obsolescence. In its place, alternative terms that fit and work in those environments may be a better fit.

Command and control particularly dominates discussion in 21st century warfare because of the importance of information flows within and among military units. Those units with efficient and effective flows, facilitated by good command and control, are more successful in operations than those without.[4] These units are also complex adaptive systems composed of many interrelated parts or individuals and produce synergistic and emergent behaviors not predictable from just aggregating the behaviors of the parts.[5] Good command and control focuses emergent unit behaviors in ways that enhance mission success, thus providing a flexibility combat multiplier to senior headquarters.[6]

The significant challenge with command and control lies with the implications associated with the term control. By itself, control is innocuous; military units as exemplars of organizational systems require regulation of their behaviors to adjust performance to meet performance standards. Regulation of this sort, common to all systems, can be negative or dampening feedback (as with prohibiting certain actions, like the Laws of Armed Conflict prohibiting the shooting of prisoners of war) or positive and amplifying feedback (like encouraging unit competition to exceed performance standards.) A thermostat is a classic example of regulation by negative feedback, in this case of temperature in a specific environment. Without adequate regulation, any system can find itself either inert (too much negative feedback) or chaotic (too much positive feedback) in its operating environment. Just think of what happens when one’s home thermostat goes on the blink to get an idea of non-regulation of temperature. For military units, if a commander does not know what his or her unit is doing or where it is, that commander likely has lost that unit’s capability to contribute to completion of the mission. Unfortunately, the modern battlespace imposes severe complications for control, particularly that form associated with stereotypical military martinets: strict or positive control. To adjust to these complications, researchers and practitioners have identified developed several different forms of control. Depending on which research one reads, there are also several taxonomies to describe these forms. This article relies the four-quadrant taxonomy of organizational control described by Charles Perrow in Complex Organizations: A Critical Essay.[7]

Perrow’s Taxonomy (Adapted)

The taxonomy describes organizational task interaction in which one task proceeds directly from another as linear, as in manufacturing production lines. Complex interaction involves tasks that may occur simultaneously or indirectly affect others, as in dealing with a significant organizational strategic change. The taxonomy also describes the possible organizational system structures using a coupling perspective developed by Karl Weick, where coupling refers to the “degree to which actions in one part of the system directly and immediately affect other parts.”[8] Loose coupling in organizations enhances a quality called resilience, or the ability to a system to continue operating after incurring a certain degree of damage. Tight coupling in organizations enhances efficiency, saves time, and promotes rapid decision making in highly predictable environments.[9] Control is centralized or decentralized within each quadrant. Perrow believes that military operational environments should fall into the quadrant that has complex interactions and loose coupling. In this case, he suggests decentralization of control is best.[10] The challenge for military organizations is to be prepared to operate in the complex interaction/loose coupling environment while regulating organizational behavior to comport with relevant laws and norms of societal behavior.

While an expert like Perrow might perceive decentralization of authority or control as best for systems typified as loosely coupled with complex interactions, others may perceive the challenge presented by operational environments as requiring centralized control. Contemporary American military thought holds it axiomatic that planning is centralized while execution is decentralized. Since a military operation involves both planning and execution, it is reasonable to ask, “Where does centralization of control stop and decentralization start?” For example, a military master like Frederick the Great of Prussia considered both planning and execution to be centralized, and he trained his army to excel under these circumstances. Alternatively, another military master, Napoleon Bonaparte at his peak, depended on centralized planning and decentralized execution, including independent initiative perhaps unforeseen by his planning.[11] Finally, the case of Ulysses Grant of the American Army during the American Civil War illustrates the fluidity of a master’s thinking about control. When Grant took over command—and control—of the Union Armies, he developed through centralized planning a three-pronged strategy to go on the offensive against the Confederacy. That strategy failed in execution. Rebounding from that failure, he then decentralized his control, leaving planning and execution to his theater commanders while he planned and oversaw execution only in the Eastern theater. This approach, combined with getting the right generals in the right positions, worked, and the Union won the war.[12]

From left to right: “Frederick after the Battle of Kolin” painted by Julius Schrader, “Bonaparte at the Siege of Toulon” painted by Edouard Detaille, and General Grant at the Battle of Cold Harbor in 1864 photographed by Edgar Guy Fawx (Wikimedia)

These three examples serve to illustrate the phenomenon of decentralized versus centralized control. There seems to be an underlying continuum that enables different forms of control to succeed or fail at different times and places. That continuum is the operational environment in which the operation is planned and executed. Briefly stated, the operating environment is the defining variable that should determine the utility for a form of control. Frederick’s environment was relatively small in size, and simple in structure: soldiers wore clearly defined uniforms and did their work relatively separate from the indigenous populace (on battlefields). Frederick’s methods of command and control also were simple, relying on a variety of physical means (signal flags, sounds, etc.). Napoleon’s environment was much larger and varied in terms of natural and political geography; his armies were also larger and more varied, and the places they did their work included some population centers. Napoleon’s methods of command and control generally were the same as Frederick’s. Grant’s environment was the largest and most varied of the three; his armies frequently operated in both isolated and population centers. However, he did have rapid physical methods of command and control (railroads), and an emerging electromagnetic method (telegraphy.)

American command and control doctrine appears to recognize the changing and changeable nature of the operating environment and its critical impact on control. The Joint Staff’s overarching publication on the matter, Joint Publication 0-2, Unified Action Armed Forces, states:

…technological advances increase the potential for superiors, once focused solely on the strategic and operational decision-making, to assert themselves at the tactical level. While this will be their prerogative, decentralized execution remains a basic C2 tenet of joint operations. The level of control used will depend on the nature of the operation or task, the risk or priority of its success, and the associated comfort level of the commander.[13]

The experience of American military forces in actual recent campaigns, however, seems to sacrifice the decentralized execution aspect for highly centralized command and control.[14]

DISCUSSION

Military operational environments are more than just doctrinal slogans included in updated publications. They are real and real in their effects on any efforts to control them. Hence, there is an inherent interplay between command and control functions and systems and the operational environment in which they are supposed to work. If a specific command and control approach does not fit its operational environment, it will likely not work well or will fail altogether. In this section, the article examines this interplay from three perspectives. The first, from classic cybernetics behavioral theory, is Ashby’s Law of Requisite Variety; this constitutes a scientific perspective. The second is from the statistical control field, Demming’s axioms on process control; this provides a managerial perspective. Third and finally, the perspective of modern military thinkers, particularly John Boyd and the highly correlated thinking of the Department of Defense’s Command, Control, Communications, Computers, Intelligence, Surveillance and Reconnaissance (C4ISR) Cooperative Research Program (CCRP), adds a specific organizational sector (military) touch to the discussion.

Ashby’s Law

W. Ross Ashby, pioneering cyberneticist and psychologist, attempted to develop underlying general and objective laws of thinking that would facilitate the idea and design for artificial intelligence.[15] His Design for a Brain systematically elucidated a series of axioms, theorems, and laws to provide a mechanistic portrait of how the brains works while still enabling adaptive behavior. His deductive and highly mathematical approach provided clear descriptions of system behavior that previously had only been verbally discussed. Ashby’s most powerful and original deduction was his Law of Requisite Variety. According to some observers, this result is the only one from the discipline of cybernetics to attain the status of a scientific law.[16] Mathematically stated, Ashby’s law is:

V(E) > V(D) - V(R) – K [17]

Where:

V is a function of variety

E is system or process (operational) environment

D is a disturbance to be regulated

R is the regulation

K can be considered friction or entropy

What Ashby’s law means in the abstract is that only variety can regulate or destroy variety; regulation or control variety must equal or exceed the disturbance variety. It directly relates control (regulation) with environment and that which one seeks to control (disturbances.) Since the disturbance originates in the environment, the regulation or control must be as robust as the environment or, more precisely, have as much variety or varied behavior as the environment. Ashby’s law clearly describes why control systems in anything from home thermostats to aircraft ailerons work. The variety built into the controls meets or exceeds that of possible environmental disturbances. Ashby’s Law also helps one understand why certain control systems do not work as promised. One classic ecological example illustrates this point. Rachel Carson, in her book, Silent Spring, described the insidious and destructive effects of the application of DDT (dichlorodiphenyltrichloroethane) to the physical environment.[18] DDT was a chemical pesticide used to control mosquito and other flying insect pest populations. The only variety available to DDT users was the amount and the timing of application. The relevant population for the users was the insect population. However, the pesticide’s effects were not limited to the insect population. DDT was and is a persistent and poisonous agent that works its way upward through food chains. Varying amount and timing did not and cannot account for this environmental disturbance. The variety in the physical environment exceeded that of the control agent.

What Ashby’s law means in the abstract is that only variety can regulate or destroy variety; regulation or control variety must equal or exceed the disturbance variety. It directly relates control (regulation) with environment and that which one seeks to control (disturbances.) Since the disturbance originates in the environment, the regulation or control must be as robust as the environment or, more precisely, have as much variety or varied behavior as the environment. Ashby’s law clearly describes why control systems in anything from home thermostats to aircraft ailerons work. The variety built into the controls meets or exceeds that of possible environmental disturbances. Ashby’s Law also helps one understand why certain control systems do not work as promised. One classic ecological example illustrates this point. Rachel Carson, in her book, Silent Spring, described the insidious and destructive effects of the application of DDT (dichlorodiphenyltrichloroethane) to the physical environment.[18] DDT was a chemical pesticide used to control mosquito and other flying insect pest populations. The only variety available to DDT users was the amount and the timing of application. The relevant population for the users was the insect population. However, the pesticide’s effects were not limited to the insect population. DDT was and is a persistent and poisonous agent that works its way upward through food chains. Varying amount and timing did not and cannot account for this environmental disturbance. The variety in the physical environment exceeded that of the control agent.

For militaries, Ashby’s Law provides a mathematical metaphor for what commanders attempt to do with their units—always in some operational environment (in garrison or deployed.) Unfortunately for military command and control practitioners, there is a potential added factor: the inherently interactive and dynamic nature of the opposition and environment of war. The United States Army War College has captured four words—Volatility, Uncertainty, Complexity, Ambiguity—in an acronym (VUCA) that summarizes this nature.[19] As strategic thinkers from time immemorial have noted, war occurs between two opponents, each of whom constantly adjust and adapt to specific circumstances; Clausewitz aptly described war as a wrestling match in which nothing ever is constant.[20] Not only do control systems have to match the complexity of the starting or initial opposition and operational environment, they must match the changing patterns and behaviors of both over time and space. Using Perrow’s taxonomy as a guide, command faces opposition and environments that demand shifts, unexpectedly and unpredictably, in the appropriate type of command and control. Sometimes decentralization may be appropriate, sometimes not; sometimes a mix of controls may be necessary, sometimes only one. Because of war’s dynamic and uncertain nature, one cannot predict and set controls in advance. Ashby’s conditions set up in his equation cannot be met; the disturbance (or opposition) and the environment are too large for any regulation to affect. Putting it analogously, the unit’s thermostat (command) cannot control its performance.

DEMING’S STATISTICAL CONTROL THEORY

W. Edwards Deming was a founding father of the American quality control movement. An engineer, mathematician, and physicist by education,

Deming earned his first note of fame as a consultant to Japanese industry after the end of World War II. He introduced the concept of statistical quality control to the Japanese, emphasizing the importance of quality built in to products and minimizing the need for inspections after production. He demonstrated such a business strategy would reduce overall costs—mainly through eliminating after-the-fact fixes to defective products—and would establish enduring supplier-customer relationships mitigating uncertainties for both production and consumption. The validity of Deming’s ideas can be found in the facts of the tremendously profitable Japanese invasion of multiple products into the United States culminating in the 1980s—Japanese products established themselves as the high quality, high reliability alternative to American products—and in the reverence Japanese industry and government held for Deming, evidenced by the naming of the Japanese quality control award after him.[21]

Professor Deming and Toyota President Fukio Nakagawa at the Deming Prize award ceremony in 1965 (The W. Edwards Deming Institute Blog)

Reception of Deming’s ideas in the United States is mixed. American industry and government attended his lectures and short courses by the tens of thousands, yet implementation of his “System of Profound Knowledge,” considered by advocates of the Deming approach to be essential for excellent and continuous quality improvement, was and is spotty.[22] Interpretations of Deming such as Total Quality Management and Management by Objectives, minus the substantive methodology often have ended up as merely new management fads; they come and they go.[23] Yet, Deming’s “System of Profound Knowledge” remains as valid as it was and is for the Japanese: the primary element of the system is that one can only manage what one knows. He stated this system in four principles:

Appreciation of a system: understanding the overall processes involving suppliers, producers, and customers (or recipients) of goods and services (explained below);

Knowledge of variation: the range and causes of variation in quality, and use of statistical sampling in measurements;

Theory of knowledge: the concepts explaining knowledge and the limits of what can be known;

Knowledge of psychology: concepts of human nature.[24]

Readers may note this article addresses control in systems, as described by Perrow and Ackoff, variation of control as described by Ashby’s Law, and what the limits are to that which can be known. Psychology, or the variation of human control, is addressed in the following section. Deming expounds on his system by stating fourteen principles that make it work. All bear on the issue of control in system environments:

Create constancy of purpose toward improvement of a product and service with a plan to become competitive and stay in business. Decide to whom top management is responsible.

Adopt the new philosophy. We are in a new economic age. We can no longer live with commonly accepted levels of delays, mistakes, defective materials, and defective workmanship.

Cease dependence on mass inspection. Require, instead, statistical evidence that quality is built in. (prevent defects instead of detect defects.)

End of the practice of awarding business on the basis of price tag. Instead, depend on meaningful measures of quality along with price. Eliminate suppliers that cannot qualify with statistical evidence of quality.

Find Problems. It is a management’s job to work continually on the system (design, incoming materials, composition of material, maintenance, improvement of machine, training, supervision, retraining)

Institute modern methods of training on the job

The responsibility of the foreman must be to change from sheer numbers to quality… [which] will automatically improve productivity. Management must prepare to take immediate action on reports from the foremen concerning barriers such as inherent defects, machines not maintained, poor tools, and fuzzy operational definitions.

Drive out fear, so that everyone may work effectively for the company.

Break down barriers between departments. People in research, design, sales and production must work as a team to foresee problems of production that may be encountered with various materials and specifications.

Eliminate numerical goals, posters, slogans for the workforce, asking for new levels of productivity without providing methods.

Eliminate work standards that prescribe numerical quotas.

Remove barriers that stand between the hourly worker and his right of pride of workmanship.

Institute a vigorous program of education and retraining.

Create a structure in top management that will push every day on the above 13 points.[25]

When these points and principles are taken together, they bring to the forefront a critical control idea: one must know—in a deep epistemological way—what one is measuring for control and why it is important to measure it, always ensuring the measurement is across all elements of the specific process (input, process, output, enablers), not just the process itself.[26]

If one cannot deeply know the system or process, according to Deming, any improvement to the system merely is random. Control is illusory.[27] Can military leaders and forces deeply know their operating environment? All the information technology advances incorporated in the armed forces have that in mind. Yet, the attentive military researcher knows that even with the best of these formidable technologies, the deep knowledge required for control proves illusive. In the dramatic case of Operation ANACONDA in Afghanistan during 2002, the concentration of all available intelligence, surveillance, and reconnaissance capabilities on a small, fixed geographic area underestimated enemy strength by 100 percent, mis-located the enemy’s positions, and failed to identify enemy heavy artillery hiding in plain sight. Only after human intelligence directly observed the operational physical environment were the mistakes identified. Even then, the operation executed as planned using the mistaken intelligence as a baseline rather than adjusting for the corrected intelligence. Obviously, there was no valid quality control for the operation.[28]

STRATEGIC THOUGHTS OF JOHN BOYD AND CCRP

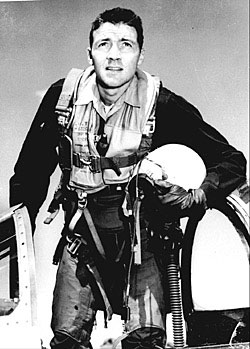

The third and last perspective on control is from the military and human. Two influential contributors to command and control thinking in this perspective are John Boyd and the collected work of the Department of Defense’s C4ISR Cooperative Research Program (CCRP).[29] John Boyd was the American intellectual father of maneuver theory.[30] The community of CCRP is the birthplace for Network Centric Warfare concepts.[31] The former emphasized the need for focus on the human; the latter continues to promote the possibilities of technological revolution in warfare. Each used and uses different methods to argue their respective cases, Boyd from history and the force of the dialectic, and the C4ISR Cooperative Research Program from the scientific method. However, both converge in area: the central role of information in the command and control of military forces.

John Boyd during the Korean War (Wikimedia)

Boyd’s second briefing is titled “Organic Design for Command and Control.” In it he explored the reasons for then-recent operational and exercise failures. He differed from then-conventional ideas that the solution to such disasters was to increase the situational awareness of commanders and troops through increased information bandwidth and channels, advocating an alternative solution lay in the “implicit nature of human beings.”[32] What Boyd had realized was the dual nature of information—data plus meaning—required different but strongly related solutions to any problems it presented. Data information problems are amenable to technological approaches, like increasing bandwidth and channels; problems of what the data means are impervious to technology and required focus on human behavior. In warfare, these two problem areas converge in the human leadership of units, or command and control. Boyd recognized the issue of information in command and control is intrinsically part of an environmental problem—of both environments external to the unit and also internal to it.[33] To have effective command and control, which to Boyd meant having appropriately quick decision cycles while imposing slow ones on the enemy, meant 1) achieving implicit harmony among friendly forces with the initiative to take advantage of fleeting opportunities and 2) addressing imminent threats.[34] For Boyd,

Boyd’s second briefing is titled “Organic Design for Command and Control.” In it he explored the reasons for then-recent operational and exercise failures. He differed from then-conventional ideas that the solution to such disasters was to increase the situational awareness of commanders and troops through increased information bandwidth and channels, advocating an alternative solution lay in the “implicit nature of human beings.”[32] What Boyd had realized was the dual nature of information—data plus meaning—required different but strongly related solutions to any problems it presented. Data information problems are amenable to technological approaches, like increasing bandwidth and channels; problems of what the data means are impervious to technology and required focus on human behavior. In warfare, these two problem areas converge in the human leadership of units, or command and control. Boyd recognized the issue of information in command and control is intrinsically part of an environmental problem—of both environments external to the unit and also internal to it.[33] To have effective command and control, which to Boyd meant having appropriately quick decision cycles while imposing slow ones on the enemy, meant 1) achieving implicit harmony among friendly forces with the initiative to take advantage of fleeting opportunities and 2) addressing imminent threats.[34] For Boyd,

Command and control must permit one to direct and shape what is to be done as well as permit one to modify that direction and shaping by assessing what is being done…Control must provide assessment of what is being done also in a clear unambiguous way. In this sense, control must not interact nor interfere with systems but must ascertain (not shape) the character/nature of what is being done.[35]

Boyd realized his conception of control was not conventional and sought a substitute phrase to better describe what he meant. He first suggested monitoring, but then settled on appreciation, because it “includes the recognition of worth or value and the idea of clear perception as well as the ability to monitor. Moreover, next, it is difficult to believe that leadership can even exist without appreciation.”[36] Without quibbling over his choice of phrases to describe his desired form of control, Boyd saw conventional or positive control was dysfunctional to a fine-tuned decision cycle. In uncertain and dangerous external environments, in which the internal cohesion of an organization is vital to insure protection of the organization’s boundaries (think of force protection), implicit command and control guarantees members of the organization can take appropriate action quickly without relying on explicit orders or commands. Boyd’s approach would guide an organization along the edge of behavior between chaos and stasis, both of which mean organizational (and, in battle, personal) death.[37]

The C4ISR Cooperative Research Program research most applicable to the issue of command and control is found in Alberts and Hayes, Power to the Edge: Command and Control in the Information Age.[38] The authors note command and control encompasses all four information domains of Network Centric Warfare theory: physical, information, cognitive, and social.[39] This is close to the levels of combat Boyd recognized two decades earlier: cognitive, physical, and moral. Equally importantly, Alberts and Hayes note command and control is not about who decides or how the task is accomplished, but about the nature of the tasks themselves. From a work study perspective, this means the roles and norms for organizational member are the key to understanding what makes good or bad command and control.[40] Again, Alberts and Hayes align themselves with Boyd in stressing the human dimension of control. Their research finds six prevailing types of successful command and control approaches ranging from centralized to decentralized:

Cyclic

Interventionist

Problem-Solving

Problem-Bounding

Selective Control

Control Free

The factors that determine their effective use are:

Warfighting environment–from static (trench warfare) to mobile (maneuver warfare);

Continuity of communications across echelon (from cyclic to continuous);

Volume and quality of information moving across echelon and function;

Professional competence of the decision-makers (seniornofficers at all levels of command) and their forces; and

Degree of creativity and initiative the decision-makers in the force, particularly the subordinate commanders, can be expected to exercise.[41]

Generally, static warfighting environments with communications continuity that includes volumes of quality information, or those characterized as more certain or knowable, are conducive to the application of the more centralized command and control approaches where orders are routinely published at specific time intervals with great detail. Fluid warfighting environments with discontinuous available communications including questionable information, coupled with a professional force of competent decision-makers who are trained in a creative and innovative professional culture, are amenable to decentralized command and control. In the latter case, control as an integral part of command becomes, as Boyd would put it, invisible.

Alberts and Hayes note modern external military operating environments, with the internal professional environments of armed forces, tend to the need for decentralized approaches:

Alberts and Hayes note modern external military operating environments, with the internal professional environments of armed forces, tend to the need for decentralized approaches:

The Information Age force will require agility in all warfare domains, none more important than the cognitive and social domains. The Strategic Corporal must be recruited, trained, and empowered.[42]

Alberts and Hayes go on to identify the desired characteristics of command and control systems for these environments—robustness, resiliency, responsiveness, flexibility, innovation, adaptation—to which one easily could add redundancy (as opposed to duplication).[43] They then propose their command and control solution, providing power to the edge, where edge refers to the boundaries of an information-age organization, those farthest from the information center. In industrial age organizations, these would be production-line workers or the field bureaucrats of some large public sector bureaucracy. Information age organizations that have eliminated the tyranny of distance as a barrier to communications and information have members at the edge who may be very high ranking; the edge in this instance refers to the edge of idea implementation and formulation.[44] In such organizations, control becomes collaboration.[45] Old fashioned control as regulation—as ensuring meeting pre-ordained criteria, as passing files inspections, as meeting phase-line objectives but not surpassing them—dissolves into obsolescence.

SUMMARY

Two major points emerge from this discussion. The first is the vital and dynamic role of the operating environment in developing command and control approaches. The second is the improbability of control working in current and foreseeable operating environments.

Modern operating environments have the habit of reacting to military force in indeterminate and unpredictable ways. Liberate a country and become occupiers. Feed the poor and get sucked into inter-gang feuds. Broker a peace and watch the peacekeeping force get butchered.[46] These and others demonstrate to an extent the effects of Jay Forrester’s Law of Counterintuitive Behavior of Complex Systems, the unintended consequences of war so well described by Hagan and Bickerton, and by extension the Heisenberg Uncertainty Principle wherein the observer influences the event under investigation.[47] Good intentions can lead to bad results. What is more, one cannot ignore the environment; it must be dealt with, but it is too complex to control in the classic sense. Finally, one cannot leave the operating environment without yielding to defeat. In some cases, defeat may be a least costly option.

As the American Nobel Laureate Physicist, John Archibald Wheeler, has put it, “we live in a participatory universe.”[48] One must learn to adjust to the pushback from the complex systems one wishes to adjust.

This last observation directly leads to the second point, that control as traditionally defined and still too often implemented cannot accomplish what it sets out to do. The operating environment is too varied and too varying for Ashby’s Law to enable effective regulation or control. Control in the Industrial Age sense of managing work efficiency through measurement always fails in this military operating environment. If control is to have any meaning or relevance to the complex, adaptive operating environments of armed forces, it must be radically re-conceived in ways that approach the thinking of Deming, Alberts and Hayes, and Boyd—control that is invisible, passive, and harmonizing from the core to the edge.

CONCLUSIONS

This paper has examined command and control from the perspective of the idea of control in operational environments. Three perspectives have shed light on the limits of traditional concepts of control in military organizations and their operational environments. Command and control is a term that has only been in existence since the height of the Industrial Revolution, around the turn of the 19th century; one conclusion of this paper is that the utility of traditional control in the military sense has run its course.

A depiction of the relative frequency with which “command and control” appears in the corpus of English-language texts from 1800-2000. (Google Ngram)

Addressing operational environments, always influential in wartime, has become a central concern if an armed force is to be successful in accomplishing its strategic mission(s). These environments are complex, adaptive, uncertain, and ambiguous. They do not yield to positive control, nor does it seem they ever can; their complexity undercuts human and organizational capability to manage them.

Once the operational environmental concept extends beyond the straightforward exigencies of direct combat—to the effects of human and physical terrain on units behaviors, to humanitarian or peace operations, to counterinsurgency—the idea of control in almost any guise becomes a poor joke played on those closest to the action, the members of the armed forces directly engaged. For the United States, the joke has been played on its armed forces far too many times in recent decades. There has been too much effort by senior leaders, civilian and military, to make war into their own image rather than accept war for what it is. In their efforts to control the image of war, these leaders have deluded themselves and their subordinates. Senior leaders still appear to believe they are adjusting a kind of military thermostat. This is a delusion. The delusion has proven tragically costly.

Rather than redefine the idea of control, it is time to dispose of the word, with all its baggage, at least from the military arts. Instead, if one wishes to retain the acronym C2 make of it Command and Coordination or Command and Collaboration. Perhaps monitoring or Boyd’s appreciation might work. It is better to face the 21st Century operational environment of war and warfare with a fresh and real face.

Jonathan E. Czarnecki is a Naval War College Professor at the Naval Postgraduate School. The opinions expressed in this essay are the author’s alone and do not reflect the official position of the Department of the Navy, the Department of Defense, or the U.S. Government.

Have a response or an idea for your own article? Follow the logo below, and you too can contribute to The Bridge:

Header Image: Soldiers from the 10th Mountain Division, participating in Operation Anaconda, prepare to dig into fighting positions after a day of reacting to enemy fire. (Spc. David Marck Jr./U.S. Army Photo)

NOTES:

[1] For a good summary of command responsibility, see Major Michael L. Smidt, “Yamashita, Medina and Beyond: Command Responsibility in Contemporary Military Operations,” Military Law Review, Volume 164, pp 155-234, 2000. This instance, see pp. 164-165.

[2] United States Army Field Manual (FM) 6-0, Mission Command: Command and Control of Army Forces, Washington: Headquarters, Department of the Army, 2003. Chapter 3, page 3-1.

[3] United States Joint Publication (JPUB) 3-0, Joint Operations, Washington: Joint Chiefs of Staff, 17 January 2017, p. III-2.

[4] Joseph Olmstead, Battle Staff Integration, Alexandria, Virginia: Institute for Defense Analyses Paper P-2560, February, 1992. Chapter V.

[5] Margaret J. Wheatley, Leadership and the New Science, San Francisco: Berret-Koehler Publishers, 1992, Chapter 5.

[6] United States Marine Corps Doctrine Publication 6, Command and Control, Washington: Headquarters, United States Marine Corps, 4 October 1994. See pages 44-47.

[7] Third Edition, New York: McGraw-Hill. 1986. See pages 149-150.

[8] Karl Weick and Kathleen Sutcliffe, Managing the Unexpected, San Francisco: Jossey-Bass, 2007. Page 91.

[9] Perrow, page 148.

[10] Ibid, page 150.

[11] Frederick The Great, The King of Prussia’s Military Instruction to His Generals, found at http://www.au.af.mil/au/awc/awcgate/readings/fred_instructions.htm, accessed 26 September 2018. Concerning Napoleon’s approach, read Steven T. Ross, “Napoleon and Maneuver Warfare,” United States Air Force Academy Harmon Lecture #28, 1985, found at http://www.au.af.mil/au/awc/awcgate/usafa/harmon28.pdf, accessed 26 September 2018.

[12] Russell F. Weigley, The American Way of War, Bloomington, Indiana: Indiana University Press, 1973, Chapter 7.

[13] Joint Publication 0-2, Unified Action Armed Forces; Washington: Joint Chiefs of Staff, 10 July 2001. Page III-13.

[14] The intrusion of senior civilian and military leadership into the actual and detailed planning and execution of military operations long has been observed within the American military experience. Vietnam has been the most recorded case; for example read H.R. McMaster, Dereliction of Duty: Johnson, McNamara, the Joint Chiefs, and the Lies that led to Vietnam; New York: Harper Perennial, 1998. Many readers may argue that Operation IRAQI FREEDOM may place a close second to Vietnam. For example, read Michael Gordon and Bernard Trainor, COBRA II: the Inside Story of the Invasion and Occupation of Iraq; New York: Vintage, 2008; and Thomas Ricks, Fiasco: The American Military Adventure in Iraq; New York: Penguin, 2007. Those are only two illustrations of many cases that stretch back into the 19th Century. For a classic look at the phenomenon, read Russell Weigley, The American Way of War: A History of United States Strategy and Military Policy; Bloomington, Indiana: Indiana University Press, 1977. Finally, for a very recent report on an old war, the Korean Conflict, that illustrates the great danger of misapplying command and control, read the late David Halberstam’s, The Coldest Winter: America and the Korean War; New York: Hyperion Books, 2008.

[15] W. Ross Ashby, Design for a Brain; New York: John Wiley & Sons, 1960. Pages v-vi.

[16] See website of Principia Cybernetica, http://pespmc1.vub.ac.be/REQVAR.html, accessed March 8, 2008.

[17] Ibid. Also, see Ashby, page 229.

[18] Rachel Carson, Silent Spring; Boston: Houghton-Mifflin, 1962.

[19] http://usawc.libanswers.com/faq/84869 accessed 1 October 2018.

[20] Christopher Bassford, “Clausewitz and his Works,” located at https://www.clausewitz.com/readings/Bassford/Cworks/Works.htm accessed 1 Oct 2018, under “On War.”

[21] https://deming.org/deming/deming-the-man accessed 6 October 2018.

[22] https://deming.org/explore/so-pk accessed 6 October 2018.

[23] http://www.teachspace.org/personal/research/management/index.html accessed 6 October 2018.

[24] W. Edwards Deming, Out of the Crisis; Cambridge, Massachusetts: Massachusetts Institute of Technology Center for Advanced Engineering Study, 1986. Chapter 1.

[25] Ibid, Chapter 2. Note that the 14th point has undergone revision since first espoused. Here the current statement is used; in Deming’s book, the statement reads, “Put everyone in the company to work to accomplish the transformation. The transformation is everybody’s job.” Page 24.

[26] Ibid, Chapter 11. In this chapter, Deming provides several methodological ways to know what one is measuring, as well as several misuses of the same methodologies.

[27] For those of us who attended Deming classes, the “red-white bead” experiment is a classic illustration of not knowing a process, yet demanding improvement. A version of this experiment is described in Chapter 11, ibid.

[28] An excellent account of Operation ANACONDA can be found in Sean Naylor, Not A Good Day To Die: The Untold Story of Operation ANACONDA; Berkeley, California: Berkley Books, 2006.

[29] To avoid weighing down the text with a terribly cumbersome acronym, C4ISR stands for Command, Control, Communications, Computers, Intelligence, Surveillance and Reconnaissance.

[30] For such a tremendous influence on modern strategic theory, John Boyd has very little written and published. His magnum opus was a collection of four extended briefings with notes, the conduct of which took upwards of fourteen (14) hours to complete. Those collected briefings, titled A Discourse on Winning and Losing, constitute the vast majority of Boyd’s strategic ideas. The version used here is from August, 1987, reproduced by John Boyd for the author in 1990.

[31] The C4ISR Cooperative Research Program, following its name and mission from the Department of Defense, has published numerous books and articles. The source CCRP document on Network Centric Warfare is David S. Alberts, John J. Garstka, and Frederick P. Stein, Network Centric Warfare: Developing and Leveraging Information Superiority, 2nd Edition (revised); Washington: DoD CCRP, August, 1999. It is to the credit of this organization that most of their publications are available to the public, at no charge, via their website, www.dodccrp.org.

[32] Boyd, “Organic Design for Command and Control,” in Discourse on Winning and Losing, unpublished, May, 1987, page 3.

[33] Ibid., page 20.

[34] Boyd’s Decision Cycle or Loop, the Observation-Orientation-Decision-Act or OODA Loop, has become quite the standard by which one can adjudge military efficiency and effectiveness. It is found originally formulated in his first briefing, “Patterns of Conflict,” within the same Discourse set, page 131. One of the major drawbacks of applying OODA Cycles to organizations is that the Cycle reflects an individual cycle, not an organization’s cycle. Be that as it may, the are other theoretical models, all empirically found valid and reliable, that can be used for organizations. For example, Schein’s Adaptive-Coping Cycle, found in Edgar Schein, Organizational Culture and Leadership; San Francisco: Jossey-Bass, 1991.

[35] “Organic Design….,” page 31.

[36] Ibid., page 32.

[37] The comparison between stasis and chaos, and the edge referred to, was first technically described in Langton, Chris G. 1990, “Computation at the Edge of Chaos: Phase Transitions and Emergent Computation,” Physica D: Nonlinear Phenomenon 42, no. 1-3 (June): pages 12-37.

[38] David S. Alberts and Richard E. Hayes, Power to the Edge: Command… Control… In the Information Age; Washington: Department of Defense C4ISR Cooperative Research Program, 2005.

[39] Ibid., page 14.

[40] Ibid, page 17. Also to study how work should be organized, consider Elliott Jaques, Requisite Organization; Green Cove Springs, Florida: Cason Hall & Company, 1989.

[41] Power to the Edge… pages 19-20.

[42] Ibid. page 69.

[43] Ibid., page 126.

[44] Ibid., pages 176-177.

[45] Ibid., this is a summary of Chapter 11 as applied to control.

[46] The three examples of counterintuitive behavior are Iraq, 2003 in the first case; Somalia, 1992-3 in the second; and Rwanda, 1994 in the third case.

[47] Forrester’s Law is found in a classic systems theory article, Jay W. Forrester, "Counterintuitive Behavior of Social Systems", Technology Review, Vol. 73, No. 3, Jan. 1971, pp. 52-68. Kenneth Hagan and Ian Bickerton’s extraordinary exploration into the effects of war is found in their, Unintended Consequences: The United States at War; London: Reaktion Books, 2007. Heisenberg’s Uncertainty Principle and extensions can be found at the American Institute of Physics website, http://www.aip.org/history/heisenberg/p08.htm, accessed March 9, 2008; though it applies formally to only the subatomic realm of quantum physics, the analogy is cogent.

[48] John Archibald Wheeler, “Information, Physics, Quantum: The Search for Links,” In Wojciech H. Zurek (editor), Complexity, Entropy, and the Physics of Information; Boulder, Colorado: Westview Press, 1990. Pages 3-28. The phrase, participatory universe, appears on page 5.

No comments:

Post a Comment