The evolution of search

Over the last few years there’s been an important paradigm shift in ‘search’ and understanding the end user has been crucial to evolving the search experience.

Over the last few years there’s been an important paradigm shift in ‘search’ and understanding the end user has been crucial to evolving the search experience.

Historically ‘search’ was simply matching keywords from a search box to text indexed in a database. The resulting output a series of blue links, which may or may not reflect what the user is actually trying to find.

Since then ‘search’ has matured, moving beyond keywords to recognising concepts, providing answers and offering personalised suggestions. By employing a dialogue based experience, search engines attempt to understand the intent of users to return results that are much more relevant.

What’s a dialogue model?

Rather than viewing search queries as individual and unrelated, a dialogue model combines queries (occurring within a period of time) into ‘sessions’.

Adopting a holistic (session based) view, each subsequent interaction combines to build a more informed understanding of the user’s intent (a Bayesian feedback system).

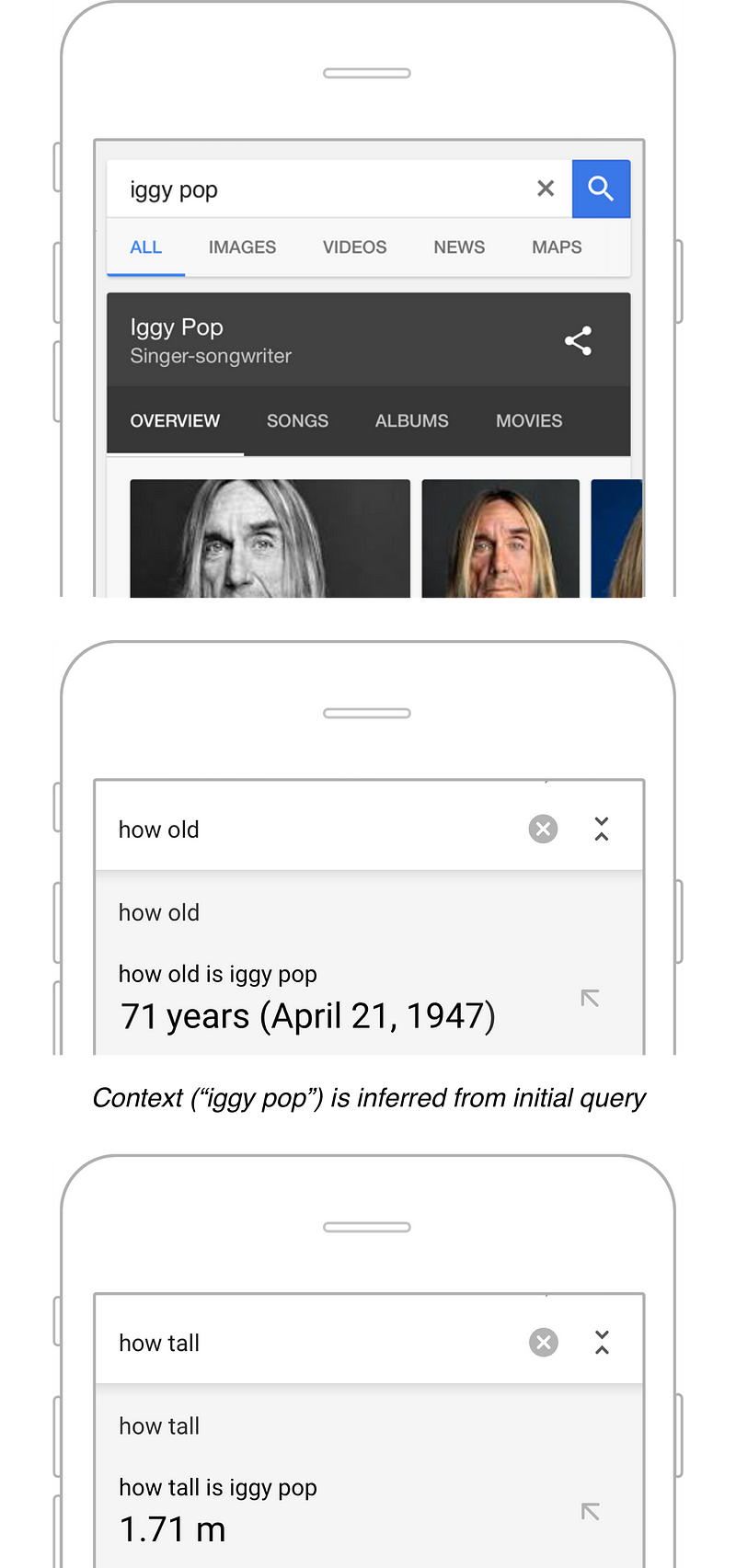

For example, having initially searched for a person (see below), subsequent queries (regarding age and height) are assumed to be contextual to that person. This reduces user overhead, creating a frictionless dialogue between the user and the search engine.

As simple as this seems, to achieve this, the search engine must know that age and height are attributes of a person entity (more on this later)

Continuing the conversation

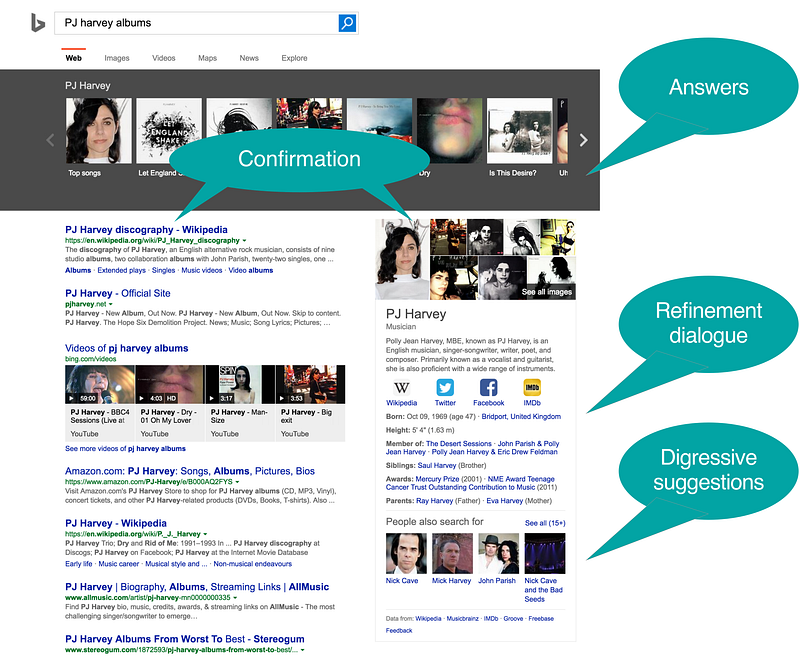

Components (referred to by Bing as ‘Dialogue Acts’) including answers, confirmation (of intent), disambiguation and suggestions (both progressive [refinement] and digressive [lateral]) are employed to facilitate a successful ongoing dialogue.

The example below illustrates how these components work to create a meaningful dialogue:

· Answers

The ‘grey bar’ displays exactly what I’ve asked for (in this case PJ Harvey albums). The format is usually flexible and context specific (it could just as easily display a text based term definition or a currency conversion).

· Confirmation

The results and knowledge graph ‘confirm’ the search engine has correctly interpreted my query. This is especially important when there’s more than one common context (for example, the query ‘apple’ could relate to both the corporation or the fruit).

· Refinement dialogue (progressive suggestion)

Facilitates the conversation/dialogue by serendipitously displaying the most frequent progressive (next) searches.

· Digressive suggestions

Aids discovery and reduces friction by offering common (lateral)progressions of the search ‘dialogue’.

Cutting out the middleperson

As seen above, ‘Knowledge graphs’ provide instant answers to a broad range of queries (instead of suggesting links to pages that might contain the answer).

Knowledge graphs often anticipate the next logical query (e.g. what’s the weather like later today / tomorrow) and provide simple interactions to easily access this information.

Google knowledge graph has more than 70 billion facts about people, places, things. + language, image, voice translation — Sundar Pichai (Google CEO)

Mobile, Mobile, Mobile

More recently, the major influence on search has been the increasing dominance of mobile devices. The inherent overhead of text input has created a shift from ‘typed’ to ‘tapped’ interaction models and led to an increasing number of voice searches; evolving the ‘dialogue experience’ to it’s logical conclusion… a (literal) conversation.

The biggest three challenges for us still will be mobile, mobile, mobile — Amit Singhal (Ex-Senior vice president of search at Google) [2015]

Less typing, more tapping

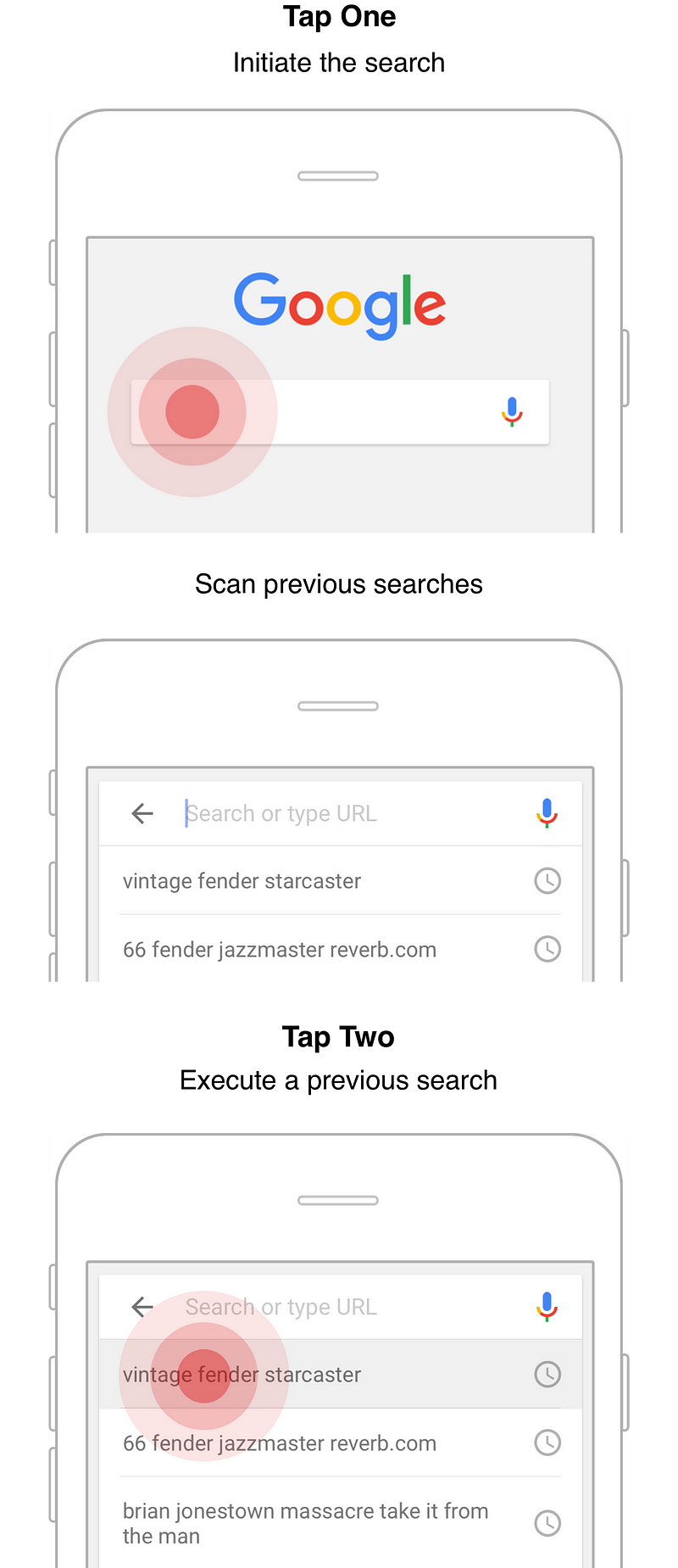

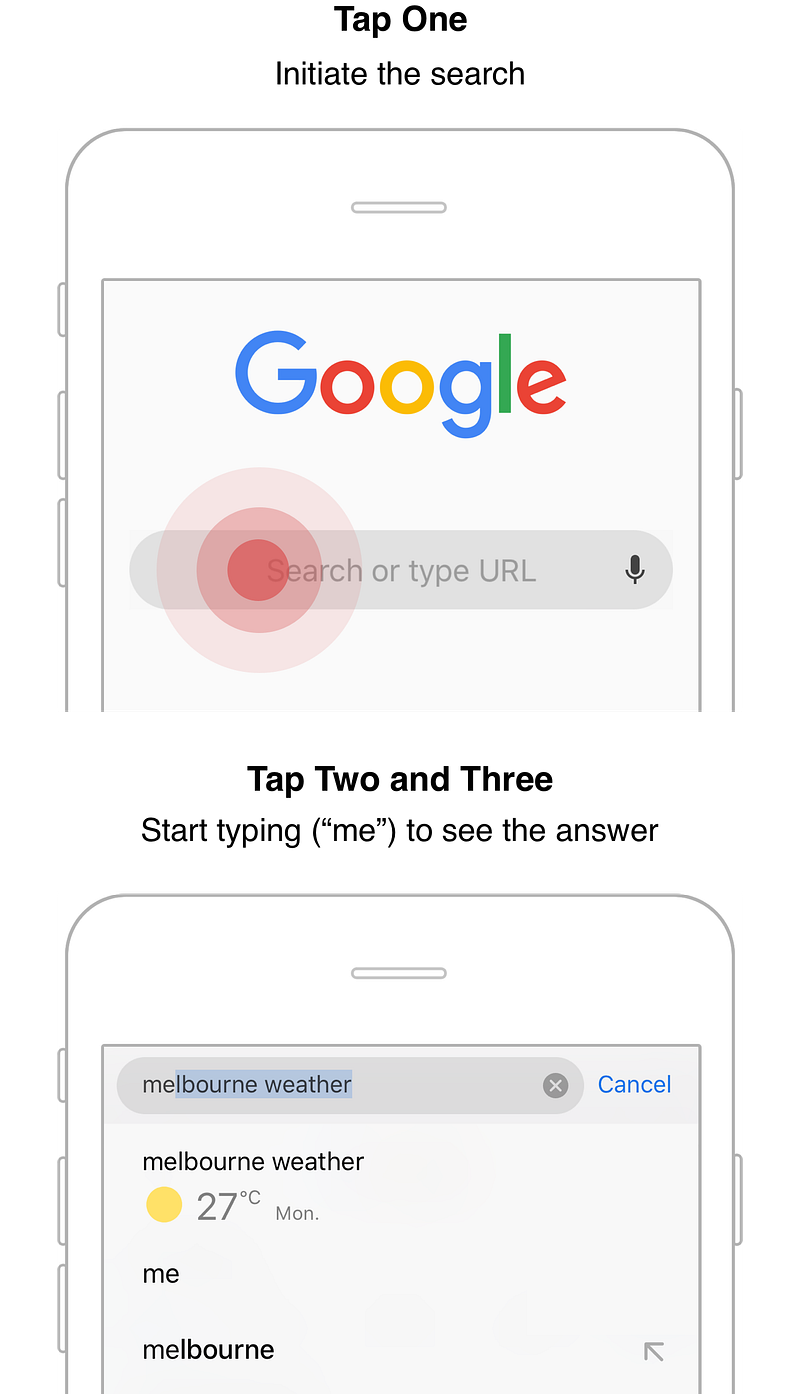

Serendipity is key to reducing the burden of user input and previous search behaviour can help predict current search requirements.

Tap interaction examples:

Presenting previous searches as default suggestions creates the possibility of an efficient ‘two tap’ interaction.

By taking into account factors such as the user’s location and general search patterns, relevant answers can be displayed with very little input (just a couple of letters)

Less typing, more talking

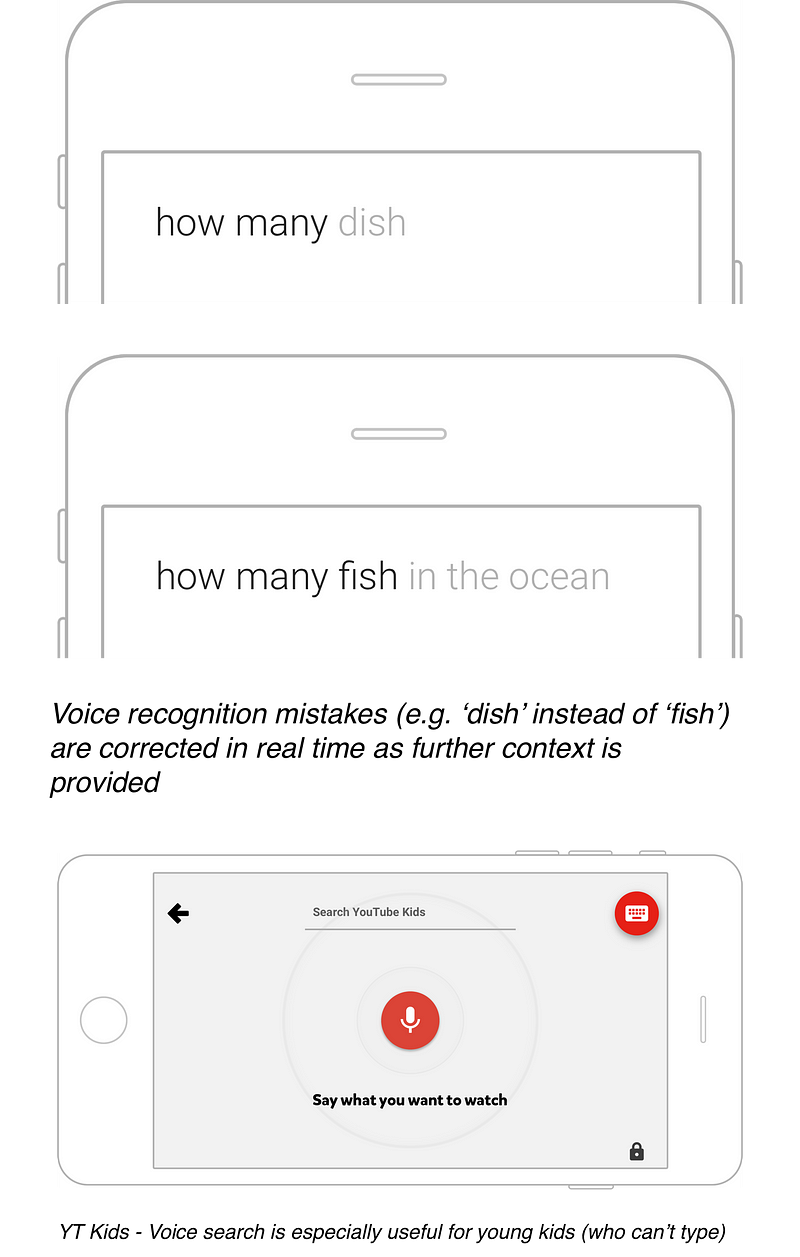

Voice search is a step further (in reducing the cost of user input) and has consequently become increasingly popular, especially in younger demographics, with more than 20% of Google and Bing searches conducted this way.

In response to this trend, Google invested heavily in ASR (Automated Speech Recognition) technology… reducing error rates from 80% to 20% and now around 8% (in the past couple of years)!

The future of search

Is the future of search ‘Less searching’?

As recommendation engines and virtual assistants continue to rise in popularity, the traditional ‘active’ search box model is evolving into a ‘passive’ discovery (conversational) model.

Driving this shift is a few key trends:

· Contextual search

Mobile phones are especially good at providing contextual information (for example, your location); despite there being approximately 60 Richmond’s around the world, a search for ‘restaurants richmond’ returns results for the city I’m in… Melbourne. Conversational context (e.g. the ‘Iggy Pop… how old… how tall’ example above) and search history can also help establish context.

· Voice search

As discussed earlier, the rapid evolution of voice recognition technology is evolving voice input from an error prone novelty to viable and efficient input method.

· Conversational search

Advancements in AI have enabled us to converse with search engines / virtual assistants in a more intuitive and human manner.

Why now?

The shift towards mobile devices has paradoxically increased the availability and appetite for information but decreased the ease of accessing it (small screen are inherently difficult to interact with, especially when multi tasking).

We’ve also reached a critical milestone where Google have successfully indexed (and organised) the world’s knowledge, Facebook know more about you than you probably realise and Amazon have amassed a wealth of data on consumer behaviour.

Vast amounts of both generalised and personal data (shared easily through APIs), combined with advancements in voice recognition have evolved virtual assistants from an interesting concept to a viable proposition. Just as importantly, virtual assistants are a natural fit for mobile as they consolidate a broad range of functionality into one convenient, mobile friendly, interface (that’s always available).

What’s next?

It’s almost impossible to say with absolute certainly but one emerging theory is that the platforms through which most digital services, including search will be delivered, will converge into a handful of widely adopted, UI light, applications (provided by tech giants such Facebook, Google, Amazon and Apple).

Why? Efficiency… for users, learning how to use countless applications is inefficient and often confusing. For businesses, leveraging existing platforms is not only cheaper but the ability to maintain engagement with bespoke apps is becoming increasingly difficult (recent research from Forrester found people spend 84% of their smartphone time using just 5 apps. Is your app indispensable enough to be one of the 5)!

What does this mean for User Experience professionals?

To design great search experiences we need to consider the following

Defining (and measuring) success

Unlike an e-commerce experience (where a sale is a clear, measureable success metric), search experiences rarely have clearly definable outcomes and action(s) that consistently equate to success.

This is further complicated by the shift to providing instant answers (how do we differentiate between good and bad sessions abandonment) and the increasing dominance of mobile devices (we can no longer use cursor movements as a proxy for user focus).

A Shift towards more user centric measurements

The inherent ambiguity in behavioural metrics led to the adoption of measurements such as Discounted Cumulative Gain (DCG). DCG involves 1000s of people (typically crowd sourced) measuring the relevance of search results (against defined criteria). These scores are then combined to reach statistical significance.

Whilst measuring the relevance of individual result sets is still important, a dialogue model is an interactive experience, occurring over time (and several interactions). Therefore measuring the holistic experience is arguably a closer reflection of actual success.

Example search metrics include:

· Session Success rate (SSR)

· Time to Success (TTS)

· Context aware search abandonment prediction

· Net Promoter Score (NPS)

For a more detailed look at measuring ‘search success’, check out Wilson Wong’s “Can’t we just talk”

How to reliably test / validate search concepts

Testing search models with dummy results typically yields poor insights (and prototyping with genuine results is often not possible). Dummy results tend to suffer from:

· Reduced user engagement (after all, results/answers are the primary reason for searching).

Making it difficult to assess whether the type and quantity of information displayed (on individual search results) is optimal

Awareness and engagement with elements other than search results increases significantly, reducing the accuracy of findings; It’s very easy to under estimate just how tunnelled vision people become when looking at results they’re genuinely interested in.

· Introducing unnecessary confusion

If search results don’t respond to re-querying or refinements as expected, doubt gets cast over whether the user’s action was acknowledged by the search engine. This has the potential to skew or invalidate metrics (for example a SUS) and cause participants to lose confidence and/or disengage.

When testing with real results isn’t an option, possible alternatives include:

· Check for other sites / apps that employ a model (or interactions) that are similar enough to use as a proxy?

· Conduct additional research, as soon as you have functional (pre-production) code. The initial round (with dummy results) will at least catch low hanging usability issues.

· Hack it! If you’re using prototyping software that supports dynamic content / variables (such as Axure) you can:

If the expected query terms are predictable enough, injecting query text (as a variable) into the results creates a crude approximation of real results. Axure’s ‘Repeater widgets’ are helpful for prototyping interactive search experiences and reduce the effort required to make changes (as you iterate the design)

Failing this, simply responding to user actions by transitioning between different (dummy) results sets provides feedback to the user (that their action has been responded to — and you can explain the limitations of the prototype)

Challenges in meeting (and exceeding) user expectations

Rapid advancements in search technology (and experience) have been mirrored by user expectations. As search engines became better at understanding intent, users responded with shorter (more ambiguous) search terms; creating an evolutionary ‘hump’ that’s difficult to overcome.

Also, while we’ve seen advancements in ‘gross personalisation’ (for example, factoring in a person’s location), ‘fine grain personalisation’ hasn’t developed at the same pace despite significant investment from leading tech companies. For example, if you purchase a gift (for someone else), you’re likely to receive recommendations (for you) based this purchase. The balance between failing to respond to a new ‘trend’ quick enough (and missing a commercial opportunity) versus reacting too soon (and annoying the user) has been more difficult than expected to resolve.

Moral dilemmas (Filter Bubbles and Privacy)

Tracking and profiling users enabled search engines to improve relevance, whilst simultaneously requiring less user input. However, this personalisation creates ‘Filter Bubbles’, where users see a limited, sub-set of results (that reflect and reinforce their personal views and political leanings), rather than the whole picture.

Interestingly, the search engine Duck Duck Go differentiate themselves by consciously avoiding filter bubbles. Rather than relying on assumptions, derived from user’s previous search activity, they explicitly ask the user to clarify any (intent) ambiguity and show ‘all’ relevant results.

Eli Pariser’s TED talk Beware online ‘Filter Bubbles” provides a good overview of potential concerns.

Cultural differences / expectations

Sometimes information expectations are culturally influenced. For example, in China it’s quite common to be interested in celebrities’ blood type!

Conclusion

Whilst search experiences have improved significantly, advances in Artificial Intelligence are propelling search into new and exciting trajectories. Traditional ‘active’ models are evolving towards serendipitous, ‘passive’, experiences that seamlessly anticipate and meet our needs (requiring increasingly less explicit user input).

As we transition from the ‘information’ to the ‘intelligence’ age we’re becoming increasingly reliant on products and services to anticipate our needs and provide the information, advice and recommendations we’d previously ‘actively’ searched for.

No comments:

Post a Comment